Tales from the jar side: Vision models do inventory, Chat Oriented Programming, Gemini context caching, and more

Three weeks ago I sent in my hearing aid to be repaired. I've heard nothing since. (rimshot, muted)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of June 23 - 30, 2024. This week I taught week three of my Spring in 3 Weeks course on the O’Reilly Learning Platform.

Regular readers of, listeners to, and video viewers of this newsletter are affectionately known as jarheads, and are far more intelligent, sophisticated, and attractive than the average newsletter reader or listener or viewer. If you wish to become a jarhead, please subscribe using this button:

As a reminder, when this message is truncated in email, click on the title to open it in a browser tab formatted properly for both the web and mobile.

Bookshelf Inventory — A Vision Model Challenge

I was talking to my editor about my upcoming book, working title Adding AI to Java, about vision models, which are AI models that can read and interpret uploaded images. I told her I’ve been surprised how often I’m using them. Whenever I’m faced with some weird error message, or some list of settings on a web page I don’t understand, or a menu of items I haven’t used, I take a screenshot of that area and upload it to GPT-4o or Claude 3.5 and ask about it. That works better than I thought it would, and way better than it did a few months ago.

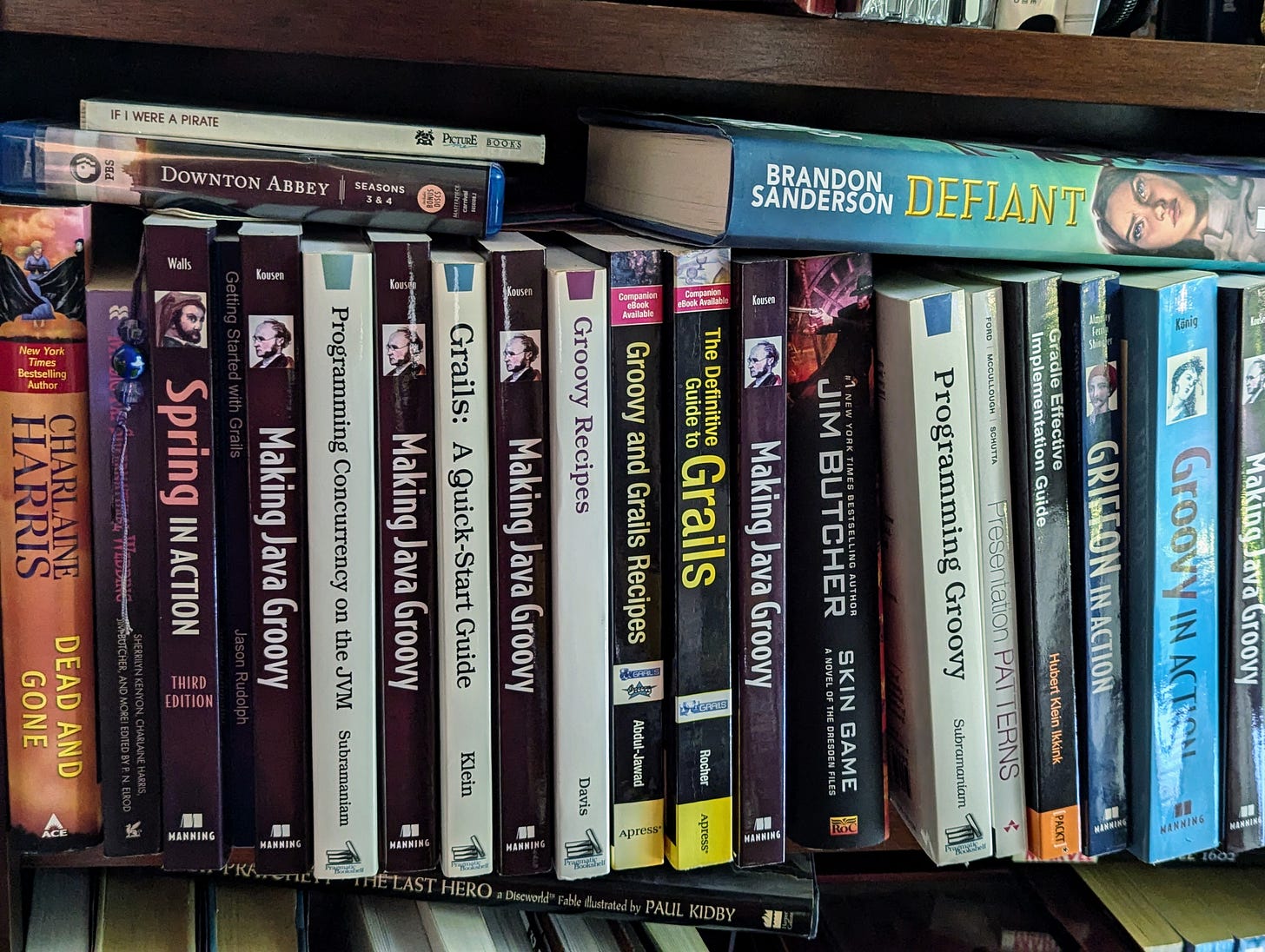

I decided to a picture of a bookshelf in my house and ask the various AI tools if they can take inventory for me. I picked an old shelf full of books I haven’t touched in at least ten years:

That shelf was filled shortly after my Making Java Groovy book came out, which is no doubt why my remaining four (!) copies are there, not to mention all the other Groovy / Grails / Gradle books. I then invoked a variety of vision models programmatically:

That’s five different vision models, though four of them are based on the llava model (which is basically llama with a “v” for vision).

The results were, shall we say, not great. None of those models is very large, of course, and they’re all running on my local machine (via Ollama), but I thought they would do better than they did.

Reading image using llava It looks like you have a collection of books that span various genres and subjects. Here's an inventory based on the visible titles:

1. "The Hitchhiker's Guide to the Galaxy" by Douglas Adams (Paperback)

2. "Make Mine a Million" by Janet Gray (Paperback)

3. "The World is Flat 2.0" by Thomas L. Friedman (Paperback)

4. "Jurassic Park" by Michael Crichton (Hardcover)

5. "Moby Dick" by Herman Melville (Hardcover)

6. "The Da Vinci Code" by Dan Brown (Hardcover)

7. "To Kill a Mockingbird" by Harper Lee (Paperback)

8. "The Great Gatsby" by F. Scott Fitzgerald (Paperback)

9. "The Catcher in the Rye" by J.D. Salinger (Hardcover)

10. "The Stand" by Stephen King (Hardcover)

11. "The Lord of the Rings" by J.R. Tolkien (Hardcover)

12. "Infinite Jest" by David Foster Wallace (Paperback)

13. "Brave New World" by Aldous Huxley (Paperback)

14. "Sapiens: A Brief History of Humankind" by Yuval Noah Harari (Hardcover)

15. "The Immortals of Tehran" by Alex Kelsey and Anoosh Khandani (Hardcover)

Please note that some titles might be partially obscured or not fully visible, so this list is based on the titles that are clearly legible in the image. Frankly, it’s hard to be more wrong than that. None of those suggested books appear on the shelf in question.

Reading image using llava-llama3The shelf contains a total of 24 books. Here's an inventory:

1. **Dawn Towner** by **Brandon Sanderson**

2. **Defiant (Kingkiller Chronicles, Book 2)**

3. **The Lion Hunters: The Golden Ghost (Book 9)**

4. **Gentleman Jack: A Rogue Tooth Fairy**

5. **Making of a Hero: An Alex Secord Novel**

6. **Al-Malafun: Al-Mufradah (Arabic Edition)**

7. **The Lion Hunters: The Black Viper (Book 8)**

8. **The Lion Hunters: The Lion's Den (Book 1)**

9. **Froggy and Friends: 12 Adventure Games (Froggy Rides Again!)**

10. **Jewel of the Desert: A Novel about Saudi Arabia by a Saudi Citizen**

11. **Al-Mufradah Al-Mufradah (Arabic Edition)**

12. **Making of a Hero: An Alex Secord Novel**

13. **Gentleman Jack: A Rogue Tooth Fairy**

14. **Froggy and Friends: 12 Adventure Games (Froggy Rides Again!)**

15. **Al-Mufradah Al-Mufradah (Arabic Edition)**

16. **The Lion Hunters: The Lion's Den (Book 1)**

17. **The Lion Hunters: The Black Viper (Book 8)**

18. **Gentleman Jack: A Rogue Tooth Fairy**

19. **Al-Mufradah Al-Mufradah (Arabic Edition)**

20. **Making of a Hero: An Alex Secord Novel**

21. **The Lion Hunters: The Golden Ghost (Book 9)**

22. **Gentleman Jack: A Rogue Tooth Fairy**

23. **Al-Mufradah Al-Mufradah (Arabic Edition)**

24. **Making of a Hero: An Alex Secord Novel**

Please note that this is not an exhaustive list and may contain minor errors in book titles or authors due to the limitations of image recognition.That’s arguably worse, and I really wonder why it thinks I have so many books in Arabic, when in fact I don’t have any.

Sigh. Moving on.

Reading image using llava-phi3| Title | Author(s) | Year | Color |

| --- | --- | --- | --- |

| Spring Mania: A Love Story in New York City and London | Marion Hulbert | 2011 | Red |

| The Girl from Sunflower Grove, Book One of the Meadowlands Series | Anne Marie Pace | 2012 | White |

| Delightful Devastation: Book Two of the Meadowlands Series | Anne Marie Pace | 2013 | Black |

| I Am Malala: A Memoir | Malala Yousafzai | 2014 | Purple |

| The Girl Who Played Go: An Epic Quest to Become World Champion in a Game That Has Been Around Forever, Now with the Latest Research on How it Works | Mark D. Moffat and Christy Hoffman | 2015 | White |Kudos for at least trying to make a table out of the response, but again, the intersection of the set of books it’s suggesting and the set of books I actually have is empty.

Reading image using bakllava1. Making a Success of Marriage - 2. Spring into Action - 3. Ghost in the Machine - 4. Business model & design Patterns - 5. Learning from other worlds - 6. The Seventh Generation: Survival and Sustainability in a New Ecology - 7. Defeat of Typography - 8. High Priest of Witchcraft - 9. Software Engineering and Reusable Component Based Development - 10. Learning from other worldsIt did find Spring in Action, though it got the title wrong. Other than that, nope. I still think that’s a great name for a model, though.

And finally:

Reading image using moondream 1. Brandon Sanderson: Defiant (hardcover)One book, but at least it’s there.

I expected that the commercial tools would do a better job, and sure enough, they did. I didn’t call them programmatically, though. I just went to the website, uploaded the bookshelf image, and asked.

Here’s Claude 3.5 Opus, the latest and greatest model from Anthropic:

Here's an inventory of the books visible on the shelf, listed from left to right:

1. Charlaine Harris - Dead and Gone

2. Spring in Action (Third Edition)

3. Making Java Groovy

4. Programming Concurrency on the JVM

5. Grails: A Quick-Start Guide

6. Making Java Groovy

7. Groovy Recipes

8. The Definitive Guide to Grails

9. Groovy and Grails Recipes

10. JMX

11. BUTCHER (likely Jim Butcher, but full title not visible)

12. Programming Groovy

13. Groovy in Action

14. Groovy Programming

15. Brandon Sanderson - Defiant

Additionally, there are a few other items visible:

- "If I Were a Pirate" (appears to be a children's book on the top shelf)

- Downton Abbey Seasons 3 & 4 (Blu-ray or DVD set)

- A book titled "The Last Hero" by Paul Kirby visible at the bottom

Note that some books may be partially obscured or have titles not fully visible in the image.It skipped a couple, got confused about Jim Butcher, and only noticed a couple of the duplicates, but hey, I could live with that.

How about Google’s Gemini?

The books on the shelf are:Top Shelf:

* If I Were a Pirate

* Downton Abbey: Seasons 1-4

* Defiant by Brandon Sanderson

Middle Shelf:

* Walls

* (Unknown Title) by Kousen

* Telling Stories by Walls

* Spring in Action (Third Edition)

* Programming Concurrency on the JVM by Subramaniam

* Grails: A Quick-Start Guide by Klein

* Making Java Groovy

* Groovy Recipes by Davis

* Groovy and Grails Recipes by Abdul-Jawad

* The Definitive Guide to Grails 2 by Rocher

* Skin Game by Jim Butcher

* Programming Groovy by Subramaniam

* Test-Driven Development with Groovy by Hubner & Klein Ikkink

* Gradle Effective Implementation Guide

* Making Java Groovy

Bottom Shelf:

* (Unknown Title)

* Charlaine Harris: Dead and Gone

* (Unknown Title) by Menhine

* The Last Hero: (Discworld Fable) illustrated by Paul Kidby

Please note that some book titles are obscured in the image and cannot be identified.Not as good, but a decent try. Certainly better than the open source models did, though not as good as Claude.

Finally, what about GPT-4o?

Here's the inventory of the books on the shelf from the provided image:

1. **Charlaine Harris** - *Dead and Gone*

2. **Craig Walls** - *Spring in Action* (Third Edition)

3. **Jason Rudolph** - *Getting Started with Grails*

4. **Ken Kousen** - *Making Java Groovy* (multiple copies)

5. **Venkat Subramaniam** - *Programming Concurrency on the JVM*

6. **Dave Klein** - *Grails: A Quick-Start Guide*

7. **Bobby Warner, Benjamin Muschko, Dierk König, and Hamlet D'Arcy** - *Groovy Recipes*

8. **Benjamin Muschko and Hubert Klein Ikkink** - *Gradle in Action*

9. **Scott Davis** - *Groovy Recipes* (another edition)

10. **Jim Butcher** - *Skin Game: A Novel of the Dresden Files*

11. **Guillaume Laforge, Dierk König, Paul King, Cédric Champeau, and Hamlet D'Arcy** - *The Definitive Guide to Grails 2*

12. **Paul King, Guillaume Laforge, Cédric Champeau, Jochen Theodorou, and Jon Skeet** - *Groovy in Action* (Second Edition)

13. **Venkat Subramaniam** - *Programming Groovy 2*

14. **Derek Collison and David King** - *Presentations Patterns*

Additionally, there are a few other items such as:

- **Blu-ray/DVD** of *Downton Abbey* (Seasons 3 & 4)

- **Children's book** - *If I Were a Pirate* by *Tadpole Books*

- **Brandon Sanderson** - *Defiant*

If you need more detailed information or if I missed any specific title, please let me know!That’s remarkably close. I don’t know that I’d rely on it in general, but that one gives one hope we’re getting closer to a practical tool.

CHOP

Steve Yegge writes blog posts very infrequently. I tend to notice them once every couple of years. But I know that if one comes out, I need to set aside an afternoon and dig in.

This week he wrote what is sure to be a widely discussed post called The Death of the Junior Developer. Naturally, as I start my position as a professor of Computer Science at Trinity College (Hartford), I’m interested in that topic.

He pointed out an interesting dynamic that hadn’t occurred to me. A lot of people have noticed that companies are trying to replace junior developers with AI tools. What’s new is that with the advent of GPT-4o, we’ve crossed a threshold. The capability that distinguishes senior developers from juniors is that senior developers know, more or less, what they want and they mostly recognize it when they see it and when they don’t. They give instructions to junior developers and evaluate the results, and then come up with the next steps, in a cycle that repeats until the software is done (for varying values of done).

The thing is, as the AI tools improve, that process of training new developers is very similar to what you would do when interacting with an AI tool. You ask it for something, it produces code of wildly varying quality, you tell it what it did wrong and try again. Instead of training a person, you’re training the AI, but at least it never gets tired or hostile and gets back to you right away.

Yegge referred to this at CHOP, which stands for Chat Oriented Programming, a term that is almost guaranteed to trigger a gag reflex in a lot of developers. Still, he argued that GPT-4o and Claude 3.5 (and maybe Gemini 1.5 Pro) have finally reached the point where they are good enough to make the process work.

Of course, the AI tools never really learn anything. You’re not changing the weights in their neural networks — you’re just giving feedback during the current conversation, which they use to customize their next response. In a given conversation, AI tools are getting better at retaining more and more information (what we call the context window), but tomorrow they’re right back where they started.

What about those junior developers? Yegge thinks they’re in serious trouble, and he’s probably right. He recommends they start using those tools right away and get really good at them, effectively transforming themselves from junior developers to senior developers as quickly as possible.

That’s a lot to ask, but it might be doable. Lots of studies say that an AI model can help a junior person in any field improve rapidly, mostly because the don’t get as stuck on the fundamental tasks everybody in the field knows but them.

For me, the best feature of AI tools is that they enable me to do projects that I would otherwise abandon, not because those projects aren’t doable, but because they involve too many features I either don’t know or haven’t done recently. Now I can just ask an AI tools for that stuff, tune it to my needs, and move on.

I also definitely plan to try to help my kids at Trinity in the Fall follow this path. I’m going to have to push them to do tasks I know they can’t handle, but with a decent AI tool at hand, they ought to be able to make progress. The hard part will be coming up with decent challenges for them to do. I’ll let you know how that works out.

But speaking of Chat Oriented Programming, I experienced it myself this week.

Context Caching

I mentioned that the AI tools retain information from previous questions and answers. That’s only true when you interact with them on their respective web sites. Every question you send to an AI programmatically is, by contrast, stateless. They retain nothing from question to question. They’re stateless services, as the term is used in IT.

My favorite related joke:

Me: I think all services should be stateless.

You: Really, why do you think that?

Me: Why do I think what?

(I may have included that joke in a recent newsletter, but I generally assume nobody is reading any of them, so it’s probably okay to repeat a gag now and then. Or maybe more often than that.)

The way you make an AI remember information is to take each response you get back and add both the question and the answer to the start of your next question. Each succeeding question gets bigger, because it’s adding in another question/answer pair, but that’s considered worth it because the resulting answers are much better.

There’s a limit, of course, and that limit is called the context window. It’s a measure of how many tokens you can fit in the request, and each tool has it’s own limit. Tokens cost money, too, but the consensus seems to be that it’s worth it to get decent results.

But here’s the thing: Say you want to add lots of information to the tool, and you want to ask lots of questions about that material, but you don’t want to keep sending the same data over and over again?

I mentioned in an earlier newsletter that Google’s Gemini 1.5 tool is the champion in this realm. While GPT-4o can handle 128K tokens (where 1000 tokens is roughly 750 words in English, so 128K is about 90,000 words, or the length of a decent sized novel), and Claude 3.5 Opus can handle 200K (at least on the paid version), Gemini 1.5 Flash can now handle 1 million tokens and Gemini 1.5 Pro allows a whopping 2 million (!) tokens in any given request. Wow. That is a lot of tokens. Their demos involve uploading entire movies.

In January of 2023, the Pragmatic Programmers published my “Pragmatic Express” book Mockito Made Clear. The Express books are short and ebook only. They’re supposed to max out at 75 pages, but they let me have 87. As it happens, when I extracted the text from the book and asked Gemini how many tokens the raw text requires, it came out to somewhere between 35 and 40 thousand tokens. That fits comfortably inside all of the large commercial models, and is still fast enough to process.

What Gemini offers in its API, is called context caching. You can ask Gemini to take a certain amount of data and store it temporarily, so it can be included in subsequent requests without uploading it all over again.

My problem is, if you look at the section on context caching in the documentation, you’ll see that the options to do it are in Python or Node.js only. As a Java person, I need a REST API or I’m out of luck.

Fortunately, they have one, but unfortunately, it’s an ugly, hard to read, and a mess. But hey, if it was easy, anybody could do it.

Truer words were never spoken. Besides, I have Claude, and GPT-4o, and even Gemini if I need them, right?

Long story short (way, way, way too late), I finally got it to work. I had to go on the Discord server for Gemini to ask about some features I couldn’t figure out, but fortunately a couple of kind souls helped fill in the gaps. Now I have a working demo in which I upload my book into a cache and then ask a handful of questions about it, and it all seems to be working.

I want to make a video about this, but keep in mind how much I had to explain just to tell you what I did. Can you imagine all that in a YouTube video? Yikes. Don’t even get me started on a good title or thumbnail, either, because there’s one more step I haven’t even mentioned yet.

CHOP-ping the Cache Down To Size

Once I had a working demo, I went to the Claude 3.5 web site and said it was time to do some refactoring. It helped me rewrite the code to eliminate a lot of the duplication and add some tests to make sure it was working properly.

Then I said I wanted to use the modern features in Java added between Java 8 and version 21, which was released in September 2023.

That turned out to be fun. Claude 3.5 has a training cut-off date of April 2024 (meaning it was released about a month ago), so it knew all those features. Most of it’s training data is older than that, so it tends to default to answers in the older style, but it at least knows what I’m asking when I push it for the newer stuff.

After a couple of hours of going back and forth, I wound up with a really nice, elegant system that includes records, sealed interfaces, pattern matching for switch, and even virtual threads in the test cases which allowed all my questions to be asked in parallel. Pretty sweet, if I say so myself, and it’s definitely going into my book, at least until the reviewers ask me to take it back out again and do RAG (retrieval augmented generation, sigh) instead.

If you want to see the code, it’s in the com.kousenit.gemini package in this GitHub repository. I usually call that the book’s repository, or at least one of them, but now that’s changing.

Refactoring the GitHub repository

A couple of reviews have come back for the first five chapters of Adding AI to Java, and while they’ve been kind, they have definitely pointed out some … issues. One of the problems is a result of me trying to stuff all my examples into two repositories, one that uses Spring and one that doesn’t. They’ve both grown too large for the job, and, worse, my text doesn’t line up with them as well as it should.

As a result, I spent some time creating a new repository, which I’m planning to call ai_java_book, which will include separate projects for each chapter. Each of those individual projects will match the text as much as possible. That will make the build process easier, and speed up the tests as well, though maintaining it might be a bit of a pain. Since it’s already a pain, it’s high time I did this anyway.

Don’t go looking for that repository yet, though. I’m going to split everything up first and then upload it.

Spring AI

I need to mention one last thing before I get to the social media posts (i.e., the good stuff). Tomorrow I’m teaching a course on Spring AI on the O’Reilly Learning Platform, and preparing for that has been way more trouble than I expected (see the JFK image from above). I’m also helping out Craig Walls as he writes his Spring AI in Action book, so I really needed to dig into that this week.

Let’s just say that the changes in the API between version 0.8 and version 1.0-M1 (milestone 1) have been huge. It’s not just the API, too. The basic tools and techniques have changed considerably as well. I spent hours and hours rewriting my code to make it all work in the new system, and there’s no guarantee it all won’t change again before the 1.0 release.

(Speaking of funny jokes, according to the Spring releases calendar, the Spring AI 1.0 release is scheduled for this Tuesday. No, no, a world of no. At this rate they’ll be lucky to make it before Thanksgiving.)

Sigh. Life in the fast lane, I guess, or, as they say, when you live on the bleeding edge, don’t be surprised when you get cut.

Hopefully the course will go well anyway. As I like to say to my wife, “In the hands of a lesser instructor, this might be a problem,” but this one really has been difficult. Besides, the AI tools can’t help at all, because everything is way too new. That’s what they do worst, and I can verify that from experience.

Tweets and Toots

The Debate

Here are the only two items I want to post about the US Presidential debate this week. I sent this to Mastodon only about an hour before the debate started, which is probably why there are so few votes, but you get the sentiment:

Following along on Mastodon and Twitter, I very quickly realized I made the right decision to skip watching it.

The only other post I’ll add is this one:

Yup. I’m about done with the NY Times, and that’s been coming for a long time. Let’s move on.

map vs flatMap

Every functional language has a function called flatMap, which transforms each element and flattens the result. Here each box is transformed into a kitten, but because they used map instead of flatMap, you still have two boxes instead of just one.

I’m not sure that explanation was worth the gag, but any functional programmers reading this probably laughed.

Where DO they come from?

The fact that the text misspelled “eliminates” makes me wonder if the image was AI generated, but the sentiment is real.

Ignore previous instructions

This meme, “ignore all previous instructions,” has been spreading like wildfire. It’s considered a good way to jailbreak an AI tool. The idea is to persuade it to ignore all the limits that have been placed on it and do whatever you want rather than what the creators want.

For example:

Or this one:

or this:

or even this one:

Looks promising. Monkey, meet wrench. Enjoy.

Am I making an impact?

Maybe he should start a newsletter. Nah, nobody wants that.

That’s disturbing

Creepy, though he might help.

And finally,

Don’t grimace while you say it

I’m definitely pronouncing Grimace that way from now on.

Have a great week, everybody!

Last week:

Week 3 of Spring in 3 Weeks, on the O’Reilly Learning Platform

This week:

Spring AI, on the O’Reilly Learning Platform

Brian Sletten gave a great talk at JavaMUG in January of this year, ChatGPFFT : The Impact of Large Language Models. Pretty much an hour of content suggesting little chance of CHOP taking the place of Jr Devs. Your meme is another legitimate reason why jr devs aren't going away.

However, I think what we may see, sadly, in the short term, is companies either replacing developer attrition with CHOP on one end and forcing the change through an annual review process. But, gotta keep the junior devs in the orgs or the promo pipeline dries up. I clearly have opinions and some corporate world data points on the subject.

Unless you somehow managed to go back in time and impregnate all those students' mothers, your students will not be "your kids." (Sorry, that framing is a pet peeve of mine.) Your students might not be fully formed adults with adult responsibilities, as work colleagues are, but they are not your children.

With any luck, your students will have an open mind and treat you and your topics with respect. You can do the same. (I'm not offended if you remove this comment.)