Tales from the jar side: A Groovy Podcast, When NOT to use Mockito, Peanut butter testing, Genetic algorithms, and On the way to L2

I made a peek-a-boo reference last week and forgot to make this joke: Wound up in the hospital after a peek-a-boo accident. They put me in the ICU. Ha! (I remind you that this newsletter is free)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of December 19 - 26, 2021. This week I had no classes, but I’ve been busy with a couple of writing projects, and I was happy to do another Groovy Podcast.

I should also mention that this issue marks the three year anniversary (yay!) of Tales from the jar side. The first issue was dated December 30, 1018, and appeared on the TinyLetter site. At the end of January, 2020, I switched to Substack and have been here ever since. All the earlier newsletters have been imported, however, so if you need to see any of the older issues, you can find them all in the archive. For free, of course. :)

(Wait, if the first newsletter was dated Dec 30, how can the current edition be the three-year anniversary? The last two years I waited until the last week of the year for the anniversary. Then I realized my newsletter that week (next week this time) would really be the first one of a new series, not the last one of the previous year. So I’m arguing this edition is the end of Year 3.

It’s a lot like when they had that debate about whether the new millennium started Jan 1, 2000 or Jan 1, 2001. My recommendation at the time was to have a party both days.)

When I first told my wife I was considering writing a newsletter, I said I worried that I wouldn’t have enough to say on a weekly basis. I think she’s still laughing at me. In fact, as long-term jarheads are painfully aware, my biggest problem with the newsletter is that I keep running up against the email length limit. Anyway, enjoy the last issue of year three.

If, despite everything, you wish to become a jarhead, subscribe using this button:

Groovy Podcast, with Sergio and Guillaume

This week I managed to record a new Groovy Podcast, this time with Sergio del Amo and Guillaume Laforge. Here’s the YouTube version:

Sergio is the developer advocate at OCI for what they call 2GM — the Groovy, Grails, and Micronaut projects. Guillaume (don’t call me Georgi) Laforge is the former head of the Groovy programming language, who currently works at Google on cloud-related projects.

We talked about the new Micronaut AOT module (7ms start up times!), the latest Grails 5.1 release, the whole Log4J2 CVE fiasco, and spent some time reminiscing about Stéphane Maldini, Grails contributor and co-founder of Project Reactor, who we lost far too soon. Both Sergio and I were aware of Stéphane, but Guillaume knew him personally, so he was able to share some memories with us.

When Doing Nothing Is Good

Recently I’ve been struck by how often the right thing to do is a negative. I’m mostly referring to software, but I’m sure there’s a more profound observation in there somewhere.

One example that I’ve dealt with frequently is in the Gradle build tool. If you look at the performance statistics against Maven, shown on this page, you’ll see there are two ways Gradle improves its speed:

Execute tasks as fast as possible. (Duh.)

Avoid redoing tasks that are up to date.

That latter idea is called task avoidance, and Gradle lives on it. One way the team implements it is by what they call incremental builds. The idea is that any task has inputs and outputs. Gradle checks to see if the inputs and outputs have changed. If not, the console prints “up to date” and Gradle skips the task.

Another way Gradle improves performance is by avoiding configuring tasks that the user doesn’t need. They have a whole section in the User Manual on how to arrange that in the build file. Gradle also has an extensive section on using a build cache, which tries to reuse the results of previously run tasks.

This week I encountered a new area of avoidance, unrelated to Gradle, when working with the Mockito framework for generating mocks and stubs. As I mentioned in a previous newsletter, I have a sample app that accesses a restful web service and processes the results. The goal is to show how many astronauts are aboard each spacecraft currently in space.

To implement this, I have an AstroGateway, which accesses the web service and converts the JSON results into Java records. That’s called from an AstroService, which post-processes the records to turn them into a map of spacecraft name to number of astronauts aboard it. The output of the service at the moment is:

7 astronauts aboard ISS

3 astronauts aboard Shenzhou 13

I wanted to test the AstroService, which is where Mockito comes in. I used Mockito to create a mock gateway which returns a specified group of astronauts when it’s called from the service. That way I can test the service in isolation. Any errors that result are definitely in the service, so they should be easy to find.

At the moment, my fake gateway results in:

2 astronauts aboard Babylon 5

4 astronauts aboard Cerritos

1 astronaut aboard Nostromo

(Babylon 5 is also the name of my new Mac, which I’m using to write this newsletter. The Cerritos is the ship on Star Trek: Lower Decks. Ellen Ripley is aboard the Nostromo, and rumor has it she needs to avoid setting down on LV-426 or she’s going to have a bad time. Other samples I have include the Jupiter 2, Voyager, and the Rocinante.)

The question is how to test the gateway. I have a test that makes sure the returned output is reasonable, i.e., the number of astronauts is greater than or equal to zero, and the list of name/craft combinations has a size that matches the total number of astronauts:

This is an integration test — calling the getResponse() method on the gateway accesses the internet to get the data and uses a parser to convert the data into records. From a testing point of view, the gateway itself has two dependencies: the networking library and the JSON parser. If the network goes down, or the parser fails, my test will fail even if the logic I wrote is correct. What can I do about that?

Here’s the current implementation of the AstroGateway class, in case you’re interested, from this GitHub repository. It’s simpler than it looks, but this is Java, so everything looks complicated.

To make a true unit test for the gateway, I would need to supply mock objects for both the networking classes and the parser. As it turns out, that’s not easy. The networking library is fairly involved, so I wouldn’t be able to mock a single class or method — the set of mocks will get complicated quickly. I think the parser wouldn’t be a problem, but it might, and the mocks will be tied directly to the parsing library I’m using. Also, I have no easy way to get the mocks into the gateway. I could add one (after all, every problem in computer science is solved by adding a layer of indirection), but is all this work worth doing?

As it turns out, according the Mockito team, the answer is no. They have a pretty good discussion of similar issues on this wiki page in their GitHub repository. They make a few of the arguments I just listed, then conclude with:

Don't mock a type you don't own!

This is not a hard line, but crossing this line may have repercussions! (it most likely will)

The bottom line is, my integration test is good enough. When the people who wrote the framework are telling you not to use the framework, I’m going to listen.

The reasons I like this argument are:

It gives me less work to do, which is always a good thing.

It agrees with my own opinion, which makes me worry about confirmation bias but seems okay this time.

I’m really, really good at not doing stuff.

A Rabbi once told me that the commandments in the Bible are divided into two categories: positive and negative. The negatives ones are great, because whenever you do something positive, someone could argue they did it better, but nobody can say that about negatives. I can perform a negative commandment as well as the greatest sage in history. For example, I just spent an hour catching up on my twitter feed and managed not to murder anybody. I put that in the win column.

New No Fluff Talks

Each year around this time, those of us on the No Fluff, Just Stuff conference tour propose new talks for the coming season. That’s always a challenge. Sometimes it’s enough to revise an existing talk, but the organizers really want as many new ones as possible. I usually try to add at least two or three completely new ones, and revise several others.

(Then there is the inimitable Venkat Subramaniam. Legend has it he archives all his old talks and makes up all new ones every year, and I believe it. As usual, the real message is never compare yourself with Venkat Subramaniam on any scale whatsoever, except maybe height.)

I have several significantly revised talks, but here are my truly new ones for 2022:

Advanced Kotlin: Coroutines and More

Spring Data and JPA

Testcontainers for Easy Integration Testing

Property-based Testing: Concepts and Examples

Genetic Algorithms for Evolutionary Computing

The first two are based on talks and courses I’ve given before. I’m a bit concerned about the coroutines talk, because that stuff gets hard fast, but it’s important and I need to know the subject better. Nothing forces you learn something like giving a talk on it.

The Testcontainers project is really cool. The project makes it easy to add databases (and more) to existing projects by automatically downloading and installing Docker containers. I’ve only used it a bit, and I sat in a training course on the O’Reilly Learning Platform by my friend Ben Muschko. Testcontainers is definitely a project on the rise, so it’s worth investing some time.

The property-based testing talk uses a Java library called jqwik. Property-based testing is usually abbreviated PBT. The tester determines certain properties of the system, and the library generates data for tests, including edge cases and corner cases, and runs everything for you.

Honestly, the biggest problem I have with PBT is that I still read it as Peanut Butter Testing, and I’m not sure what to do with that yet. I guess I have a few months to come up with some decent related jokes.

The talk on genetic algorithms goes back to my time at United Technologies Research Center during the 90s. I spent a few years in a group that focused on Artificial Intelligence applications to engineering problems. I worked on neural networks (NN) a bit (a hot topic these days), but I didn’t really enjoy it.

The problems with NNs, at least for me, were:

Most of the time, they just confirm what you already know.

When they don’t, it’s often because of weird edge cases that mess up your data.

When they do find something new and interesting, they’re such a black box you have no idea why they gave the new results.

These days, they’re massively oversold, so clients expect miracles.

Like anything in statistics, it’s not about the processing, it’s about insight, and comping up with good insights is hard. For example, AlphaZero enormously impacted the chess world, but it’s hard to understand why it does what it does. It’s not exactly going to tell you.

Genetic algorithms are a completely different idea. With those, you encode possible solutions to a problem as a population of a series of 1s and 0s called chromosomes. Then you evaluate them, let them “breed” (usually by crossover) by taking segments of two parents to make a child, introduce an occasional mutation of a 1 to a 0 or a 0 to a 1, and let the population evolve over time, dropping the weakest members and reproducing the best ones until the optimal solution emerges.

Here’s an image from this site:

Genetic algorithms are a nonlinear optimization technique. The reason I haven’t talked about them much is that my knowledge of nonlinear statistics isn’t that great, and this stuff tends to get pretty heavily mathematical pretty quickly. I think if I can put together a few simple demos, however, this will be a fun talk.

Eye In The Sky

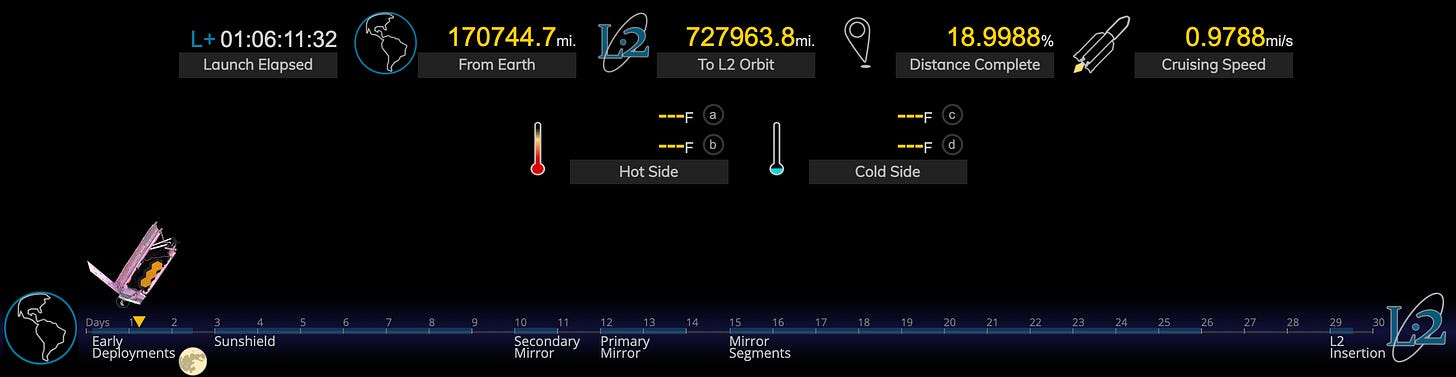

Like everybody, I’ve been captivated by the launch of the JWST, the James Webb Space Telescope, and its journey to the L2 point and subsequent unfolding. The main site has a page called Where Is Webb, which shows the current position.

Here’s a sample, as of early Sunday afternoon:

I like how the figure shows how far it is to the L2 point. L2 is one of the Lagrangian points:

Lagrangian points are locations where the gravitational pull between two objects balances.

This is all very stressful, given the complexity of the procedures and that fact that if something goes wrong, you’ve basically got a paperweight. This page from the Planetary Society details all the steps involved in getting ready over the next six months.

Good Tweets

(If you can’t see the image, it’s a PS2 and a PS3 duct-taped together to make a PS5. :)

This is the first of a long tweet storm by Glen Weldon, which he retweets every year. It’s irreverent and very funny.

Weldon lives for this time of year. Here’s another of his:

This is for my developer friends:

If you’re curious, here’s a page discussing SQL injection attacks.

This is a wild optical illusion:

Finally, enjoy this commentary on NFTs:

Enjoy your holiday break.

As a reminder, you can see all my upcoming training courses on the O’Reilly Learning Platform here and all the upcoming NFJS Virtual Workshops here.

Last week:

No classes.

Recorded a Groovy Podcast.

This week:

No classes.

Lots of writing, both for a client and for my new Mockito project.

So, there is a third way of testing your AstroService which would not require you to mock anything at class level yet make your tests independent of network or availability of the web service your code talks to - mock out the http responses of the web service. There are many libraries that can do it but my favourite one is WireMock. Hopefully it’s cause it’s good and not because I personally know the creator… 🙂