Tales from the jar side: Uncensored Java AI, Pirate audio in text messages, LangChain4J used my video 😀, and the usual tweets and toots

I replaced my rooster with a duck. Now I wake up at the quack of dawn. (rimshot)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of January 18 - February 4, 2024. My big event this week was giving a private course with my friend Will Provost on JUnit, Hamcrest, and Mockito.

Here are the regular info messages:

Regular readers of and listeners to, and video viewers of this newsletter are affectionately known as jarheads, and are far more intelligent, sophisticated, and attractive than the average newsletter reader or listener or viewer. If you wish to become a jarhead, please subscribe using this button:

As a reminder, when this message is truncated in email, click on the title to open it in a browser tab formatted properly for both the web and mobile.

Uncensored Java AI

I published a new video this week on accessing the uncensored AI models using Java.

That relies on the Ollama project, which lets you install AI models (like Facebook’s Llama 2) on your local machine. That has a couple of benefits:

You don’t have to pay a provider for the service, and

You don’t have to worry about sending any information off site

I’m tempted to call it “free,” but that’s not strictly true since the model still needs your own resources to run. Probably better to say it’s local, so it’s using resources you’ve already acquired. Of course, that means it’s limited to what you can supply.

For example, the Llama 2 model on the web site lists three versions:

llama2:7b

llama2:13b

llama2:70b

The number after the colon represents the number of parameters in the model, in billions. Whether you can run the bigger ones or not depends on how much memory (RAM) you have. They estimate that to run the 7b model takes at least 8GB, the 13b model takes at least 16GB, and the 70b model requires a minimum of 64GB of RAM to run at all. Again, those are minima — you probably need more than that to run them comfortably.

Not all of the models are well-labeled. Earlier this week I tried loading one of the 30b models on machine and quickly brought my laptop to its knees. I had to kill the process and wait for some of that RAM to be reclaimed before my laptop would even respond again.

The teaser for my video is the idea of running an uncensored model. The whole concept is based on a blog post by Eric Hartford from last May. In it, he gives various arguments why some AI models ought to be available without the normal guardrails applied, like:

There’s no one “correct” alignment, so why should everyone have to live with the ones adopted by American companies?

Alignment often interferes with valid queries, such as when you’re writing a novel, performing some kind of roleplay, or doing research.

Hey, it’s my computer, so I should be allowed to do what I want.

Maybe I want to build my own alignment rules. For that, I need to start with an unaligned model.

At any rate, many open source developers have adopted his ideas and produced unaligned, or, as they’re more commonly known, uncensored models.

In the video, I installed one of them locally, and then accessed it using the Spring AI project. I asked it various questions, like where to download pirated movies, or the cost of 1 kg of cocaine (about $30K, as it turns out), or to write Barbie / Oppenheimer slashfic.

(For those who are not aware: The term slashfic is used for stories that combine two characters in (often erotic) relationships. It dates from, believe it or not, early Star Trek days when some fans wrote Kirk / Spock slashfic, usually around an unexpected pon farr ceremony. If you know, you know, and if you don’t know, you probably don’t want to know. Honestly, I think I’d be happier not knowing.)

In the video, I tried those questions on a regular Llama 2 model, and it refused to answer them, with one exception. As I was explaining in the recording what was going on, I suddenly released that the (censored) model claimed to find lots of Barbie / Oppenheimer slashfic and even recommended them, though it didn’t give any actual websites (thank goodness). I was going to cut that out of the video, but figured it was more entertaining for the viewer to watch me realize what it had done and recoil in horror before quickly moving on.

Needless to say, the uncensored model gave much more information, and started an actual story, which ended before it got interesting, for which I say, whew.

Feel free to check out the video if you like, or even set up your own local uncensored model, but I officially deny any and all responsibility, etc, etc.

Pirate Insults in Audio Files

Last week I talked about how I wrote an application to generate an insult, in pirate speak, whenever I was cut off in traffic. My application uses the OpenAI models to generate an insult, and then texts it to me using the Twilio API. That took a fair amount of effort, because I had to register with Twilio and figure out how to use it while staying inside their free tier. Then I used the ngrok application to expose my local Spring Boot app to the web so it could send texts to my cell phone.

This week I realized I could add a nice improvement. A while ago I made a video about how to use the Text-to-Speech capability of the OpenAI system to generate an mp3 file from specified text. I added that to my existing app, but then I had to figure out how to transmit the audio file as part of the text reply.

That turned out to be a lot more complicated than I expected. You can’t just add audio to a text message — instead, you have to host it somewhere and add a link to the message so the receiver can download and play it.

Here’s an example:

OpenAI only has six possible voices, and only one of them (Fable) has a British accent, so I went with that. It’s still pretty lame, but at least it works. When I eventually make a video about this, I’ll play it in my car. Believe me, the voice used by my car to read text messages out loud sounds completely ridiculous on pirate-based insults.

In the audio download, I had to make sure that the Content-Type header on the response was assigned to the MIME type audio/mpeg, or it would download it as a Base 64 encoded string, which didn’t help anybody. There were a few other minor nits I had to overcome, but I got there eventually. Admittedly that’s a long way to go for a what is ultimately a gag, but that’s pretty normal for me. I’ll move mountains if I have at least a mildly decent joke to tell.

My plan eventually is to move my app to a hosting service, though that won’t help too many people since I still have it hard-coded to send text messages only to me. Still, it’ll make for a fun demo in a presentation some day, and I learned a lot in the process.

LangChain4J has a Website

I’ve been spending a lot of time with the Spring AI project, but one of its major competitors is LangChain4J. That project is based on the LangChain project from the Python world, but only loosely. I used it for a while back in November, but I’ve been keeping an eye on it as it’s grown.

I really like that project, and I hope to see it succeed. I was happy to see they created a new set of documentation, sort of, in the form of a GitHub IO web site. The base URL is langchain4j.github.io, which means it’s part of their overall GitHub repository. GitHub gives you a free “github.io” site that you can use for static web pages.

The site is a little thin at the moment (maybe I could help with that, though the last thing I need is yet another project), but it held a pleasant surprise for me. If you go to the Overview page under Tutorials, they have a few embedded videos from conference talks on LangChain4J, as well as my video on The Magic of AI Services with LangChain4J. 😀

I was quite surprised to see that. I’m happy about it, sure, but I wish they’d told me about it. That way I could have mentioned it here earlier. I have no idea how long my video has been included there. I only stumbled upon it by accident, because I normally just go directly to their code repository for the example project and go from there.

At any rate, I’m happy they used my video, and I’m glad to support the project. I’m committed to teaching online courses in Spring AI, but I’ll definitely get back to LangChain4J as soon as I can.

Tweets and Toots

Apple Vision Pro is out

I’m not a big Apple fanboi. I have a Mac, but I still have an Android phone. I finally got an iPad, which is fine, but I mostly use it in the morning to catch up on email and social media or play videos. I am very uncomfortable with the secrecy that envelops everything inside the Apple ecosystem, and how anyone who joins the company seems to vanish from the face of the earth. Morally and ethically, I think Apple is every bit as bad (and as good) as any of the other Silicon Valley behemoths. Google gave up on it’s “Don’t be evil” slogan years ago. Facebook is completely amoral and will do anything they can get away with, Microsoft tried to Extend and Extinguish anything they didn’t own, and so on.

The release of the new Vision Pro, however, is a Big Deal(TM). It’s way too expensive (starts at $3500, and that’s before you add on any helpful accessories), and it’s practically the definition of a walled garden — it works beautifully with everything from Apple’s ecosystem, and only somewhat or not at all with everything else.

That said, the reviews I’ve seen make it look both amazing and highly addictive. They did VR and AR (virtual and augmented reality, though Apple really doesn’t want anyone to use those words) right. Once the battery life gets better, the weight on your head gets smaller, and the price comes down from the stratosphere, it looks like you’re as close to the holodeck as any of us are likely to experience. Someday I really am looking forward to watching movies on effectively 90" screens with surround sound, or watching sporting events like I’m there.

That said, the reviews suggest the new product is seriously cool, but not really there yet. I suppose if I had $4K to waste I would consider it, but I think I can wait a generation or two.

That said, the Simpson’s anticipated this:

Normally with tweets I just show an image, but this time I linked to the original so you can watch the 27 second video if you want. Just click on the image.

Another unintended consequence

Yup. I look forward to the day when a dozen people on a dozen headsets are all in a room in a vegetative state enjoying some shared experience. Talk about the ultimate in walled gardens.

Security

Speak, friend, and enter 123456.

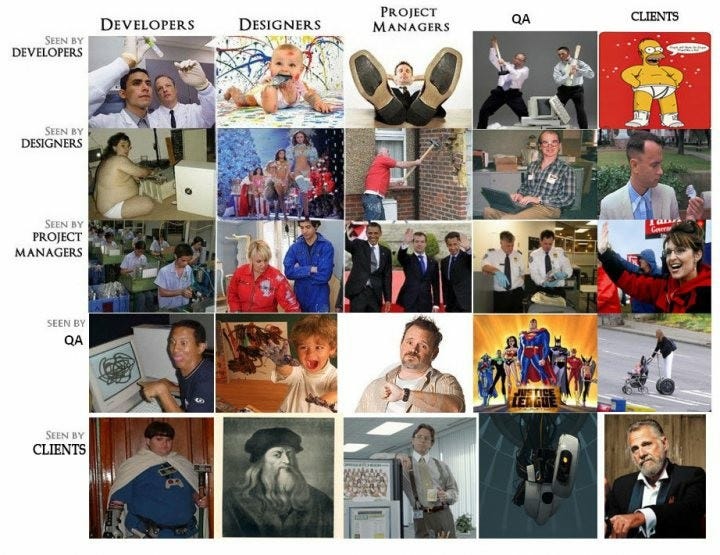

How we see each other

It’s time for another one of those grids:

Some weird ones in there, but basically correct.

Esoteric programming languages

Here’s the direct link to the blog post. I’d heard of most of them (posts like this make the rounds every few years or so), but I remember when WhiteSpace was announced. It’s a language that uses only tabs, spaces, line feeds, and so on for programming. Anything in text is a comment. I really wanted to learn it and teach courses in it, but fortunately I came to my senses.

Someday I’ll look at Malbolge and Ook! again. Piet looks gorgeous.

LolCode looks dated these days, but the “Hello, World!” program in it is still readable:

HAI

CAN HAS STDIO?

VISIBLE "HAI WORLD!"

KTHXBYE

Velato looks cool as well:

I can image wasting literally hours digging into all of them. Be aware, though, that all the “Get it here” links on that post go to the same site, which isn’t correct for any of them, so presumably Google is your friend if you want to pursue this.

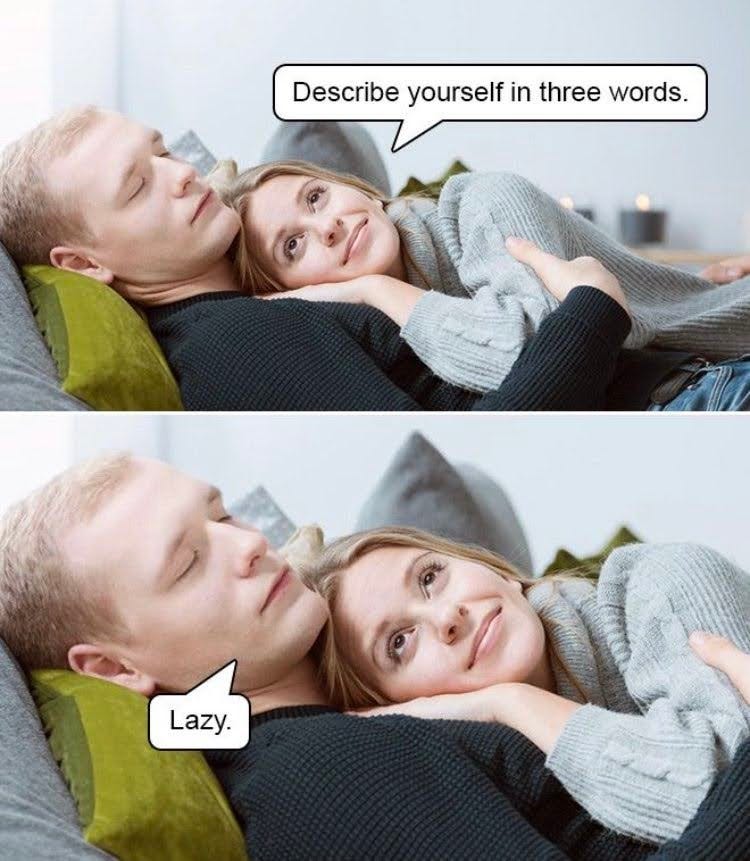

Interview question

I told my Trinity students this gag:

Interviewer: What’s your strongest and weakest features?

You: My weakest feature is that I have a rather tenuous connection to reality.

Interviewer (looking nervous): Okay, so what’s your strongest feature?

You (drops voice): I’m Batman.

Always be Batman.

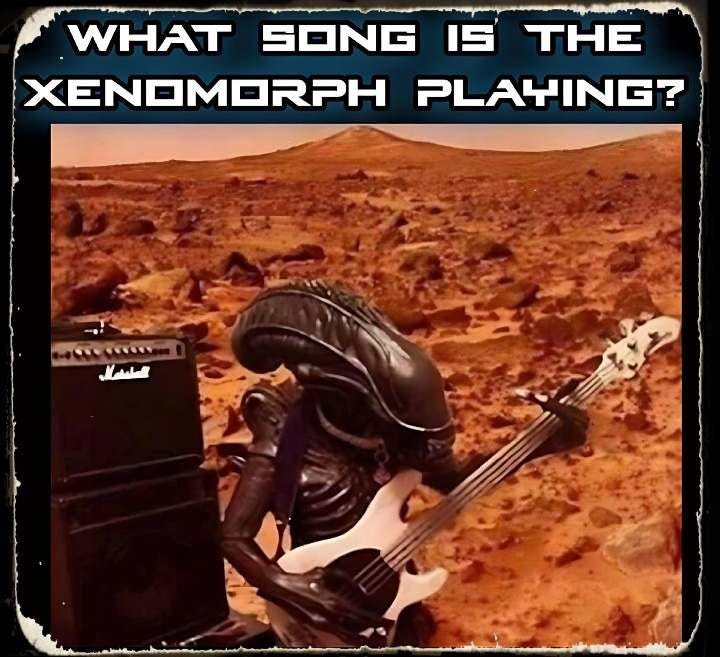

I’ll be watching?

I’m thinking “Every Breath You Take,” I’ll be devouring you, but maybe that’s just me.

Help Wanted

Good luck with that.

The video version of this newsletter will be on the Tales from the jar side YouTube channel tomorrow.

Last week:

JUnit, Hamcrest, and Mockito, private class

My Trinity College class Large Scale and Open Source Computing

This week:

Functional Java, on the O’Reilly Learning Platform

My Trinity College class Large Scale and Open Source Computing