Tales from the jar side: Tool support in LangChain4j, My Kotlin book in a Java bundle, Lunar landers, and the usual silly tweets and toots

Don't give up on your dreams! Go back to bed. (rimshot)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of February 18 - 25, 2024. This week I only had my regular Trinity College course on Large Scale and Open Source Development, where I was happy to host the inimitable Paul King as a guest speaker.

Here are the regular info messages:

Regular readers of, listeners to, and video viewers of this newsletter are affectionately known as jarheads, and are far more intelligent, sophisticated, and attractive than the average newsletter reader or listener or viewer. If you wish to become a jarhead, please subscribe using this button:

As a reminder, when this message is truncated in email, click on the title to open it in a browser tab formatted properly for both the web and mobile.

Tools in LangChain4j

I talked about this last week, and I planned to make a video about it, but I’m really glad I waited because I didn’t understand what I was doing. Fortunately, now I know better, and I was able to make a decent video about what I learned.

I was going to call that video, I didn’t get LangChain4j tools … until I did, but in the end I went with, The Definitive Guide to Tool Support in LangChain4j:

Last week I talked about using a Calculator class as a tool to do some calculations:

The overall job was to extract information about a Person record from a paragraph:

Captain Picard was born in La Barre, France on Earth

on the 13th of juillet, 281 years in the future.

His given name, Jean-Luc, is of French origin. He and his brother

Robert were raised in the family vineyard Chateau Picard.The problem was that the AI tool then used my add and subtract methods randomly, sometimes calling them and sometimes not, and often using them with numbers pulled randomly from the text. It was a mess, and I was going to make a video describing how erratic it was.

Instead, I decided to dig into it further. After looking at the code, I found that the tool support in LangChain4j actually uses the Function calling section of the OpenAI docs, and that’s something I’d been wondering about for a while. My friend Craig Walls, who spends most of his time on the Spring AI project when dealing with these issues, has been telling me for weeks that he wanted Spring AI to adopt function calling, and I couldn’t understand why. It all looked so tedious, and if you look at the docs, the AI doesn’t even invoke the function — it just tells the caller to do it, with the proper arguments. What fun is that?

Once I realized what LangChain4j was doing, however, everything became much clearer. LangChain4j calls the function for you automatically. That’s the beauty of it. You don’t have to monitor the type of response, extract arguments, and call the function yourself, because the framework does it instead.

So why were my functions having so much trouble? I finally realized I wasn’t giving the AI enough information. Instead of adding a simple add method and a subtract method, I replaced it with a method specifically designed to compute the number of years from now:

public class DateTimeTool {

@Tool("Get the year for a given number of years from now")

public int yearsFromNow(int years) {

logger.info("Called yearsFromNow with years=" + years);

return LocalDate.now().plusYears(years).getYear();

}

}Now my method is called yearsFromNow, and its job as listed in the @Tool annotation is to “Get the year for a given number of years from now”.

Suddenly everything worked, exactly as it should:

DateTimeTool - Called yearsFromNow with years=281

Tool execution result: 2305

Person[firstName=Jean-Luc, lastName=Picard, birthDate=2305-07-13]And there you have it. I just had to be more specific.

Suddenly I “got” tools in LangChain4j. The idea is to give it specific methods to handle what AI models do poorly, like doing math or accessing remote web services, and be specific enough about the details that the model knows to call the tools at the right time.

I added a more elaborate example that accessed a restful web service containing currency exchange rates (the site is Open Exchange Rates). Again, that’s something the AI models can’t normally do. My class downloaded the exchange rates and added a method to convert prices from one currency to another:

@Tool("Convert a monetary amount from one currency to another")

public double convert(double amount, String from, String to) {

logger.info("Converting {} from {} to {}", amount, from, to);

return amount * rates.get(to) / rates.get(from);

}In order to test this, I opened a private window in my browser so I wouldn’t log in, then I accessed Amazon.com in several different countries around the world and searched for a Macbook Air. That resulted in this test:

At the Amazon.com website in various countries, a Macbook Air costs

718.25 GPB, 83,900 INR, 749.99 USD, and 177,980 JPY.

Which is the best deal?Not surprisingly, the best deal was in USD, but more importantly, the AI modeled called the convert method four times (including once unnecessarily, to convert USD into USD):

Converting 718.25 from GBP to USD

Tool execution result: 910.668867740324

Converting 83900.0 from INR to USD

Tool execution result: 1012.2831328172177

Converting 749.99 from USD to USD

Tool execution result: 749.99

Converting 177980.0 from JPY to USD

Tool execution result: 1182.7091351231416

After converting the prices to USD, the Macbook Air costs:

- £718.25 in GBP is approximately $910.67 USD

- ₹83,900 in INR is approximately $1,012.28 USD

- $749.99 USD remains the same

- ¥177,980 in JPY is approximately $1,182.71 USD

Therefore, the best deal for the Macbook Air is in USD at $749.99.Sweet. The only performance penalty was in the initial download of the current exchange rates, and that was minimal. The rest is look-ups in a map and a couple of multiplications. The result is an AI where I can ask questions that use the tool automatically.

(I suppose the next step would be for the model to go over the web to look up the actual prices for me, and I assume that’s possible, but it probably involves a service I would have to pay for. Yeah, no. I just wanted to demonstrate that this was all doable, and it is.)

On a personal note, in this video I did all the recording inside Tella, which I really like but is rather limited. After exporting all the segments, I imported them into Screenflow, which is the editor I’ve been using the last year or so. That allowed me to replace the green screen background with a virtual image (everywhere except for the one brief spot I missed) and add text labels all over the place, as I am wont to do.

(Anyone who has watched my newsletter videos each week knows how much commentary I add in those labels.)

Screenflow also has an easy tool that lets me normalize the audio throughout, so that helps too. The result is … pretty good. I still need to work on it, and I may just go back to the regular green screen until Tella included that automatically, but we’ll see.

If you’re interested in Tella, here is my affiliate link for you to check it out.

Adventures in Lunar engineering

Like so many people in IT, I was a career changer. My original degrees were in mechanical engineering and mathematics, and my graduate degrees (the first time) were in aerospace engineering. I worked as a research scientist for about 12 years before I went back to school for computer science.

Lest you think that makes me a rocket scientist, let me stop that right away. No, I was an airplane scientist. Specifically, I worked on computational and mathematical models for the aerodynamics inside of jet engines, which meant lots of math and lots and lots of Fortran.

(Shudder. The nightmares went away eventually, but it took a while.)

As a child of the late 60s and early 70s, of course I wanted to work for NASA and/or be an astronaut. Truth to tell, I actually wanted to go to Starfleet Academy, but that future persisted in not getting here, so I settled for the rest.

That means of course I’ve been following all the spacecraft that have been sent to the Moon over the past few months, culminating with the attempt this week by the company Intuitive Machines to land a craft on the Moon. Which they did, sort of. It landed, and like the Japanese craft a few weeks ago, tipped over onto its side. See this guest article at the New York Times for details.

Look, I get it. They’re spending about one tenth of one percent of the Apollo budget, so you have to expect problems. Also, low gravity is an issue. Angular acceleration is velocity squared divided by radius, and everybody knows the Moon’s gravitational acceleration is about 1/6th that of Earth. In other words, the inertial mass implies if it starts to tip, it’s going to be hard to stop, while the lower gravitational acceleration means it’s much harder to keep it upright once it starts going.

Still, you would think these companies might consider an alternative design:

Okay, sure, long way to go for that gag (literally), but hopefully it was worth it.

I think we’ve got five more attempts at lunar landers this year, so hopefully the others will have better luck.

Kotlin Cookbook

Apparently, a couple people noticed my Kotlin Cookbook recently. First, it got listed among the “Most Recommend Books” for Kotlin:

(I think I brought this up last week, but it’s still true.)

Then, this week I got a text notifying me it has been added to a Humble Bundle from O’Reilly Media:

O’Reilly knows I wrote an actual Java book, right? It’s called Modern Java Recipes, and I wrote it for them. Why then are they including my Kotlin book in a bundle of Java books?

Oh well. I should just be happy and not worry about it, and I will.

Tweets and Toots

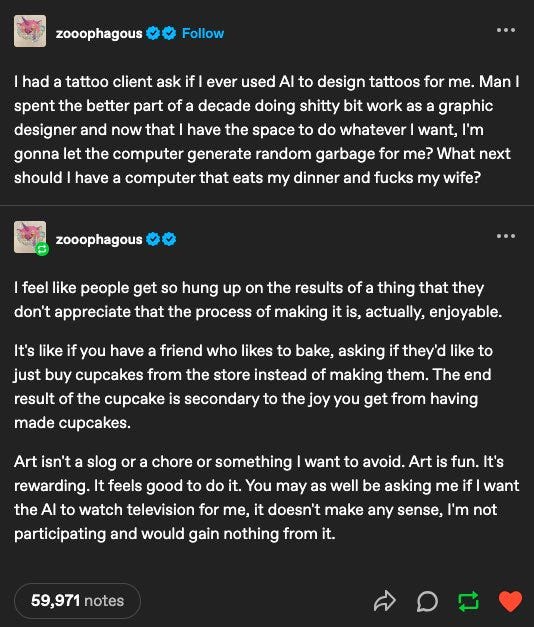

Watch TV for me?

That’s an excellent explanation of the joys of creation. The problem is that it glosses over the struggles. When I’m stuck, when the words or the music or the code won’t come, that’s when I could use a little AI assistance. Most of the time AI tools aren’t very helpful. They’re getting better, however. Who knows what they will do in the future?

The real problem for me is, how quickly will I be willing to lower my standards enough to accept what an AI tool provides? What if I’m tired, or under a deadline? At what point does AI-generated output become “good enough”?

I guess we’ll see.

Ask an image generator

That’s an old gag. To give it a twist, I went to DALL-E 3 and asked it to draw that, and here’s what it produced:

Apparently DALL-E 3 prohibits images based on copyrighted song lyrics. Go figure.

I decided to try this instead, from the Tales from the jar side theme song (K-pop version) I use at the end of most of my videos now. I assured the generator that these lyrics are NOT copyrighted. (In reality, they were generated by GPT-4 about six months ago.)

Here’s the image it generated:

So … that happened. I think I’ll just back away slowly and leave it alone.

It’s a stable coin

You better believe I added that joke to my video described above when I used exchange rates.

Waiting for Oppenheimer

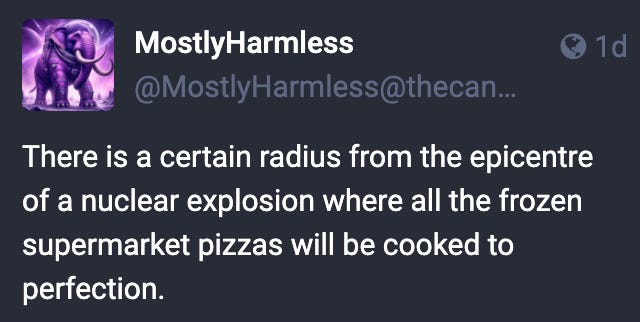

The Barbie movie has been out for weeks. I’m not sure why the Oppenheimer movie hasn’t yet made it to cable. To tide you over, here’s this observation:

The temperature will be right, but there may be other issues.

Music for Buzz Lightyear

What’s the buzz? Tell me what’s happening!

Liver and let die

Liver, favah beans, and a nice Chianti. Uh, make that “to go.”

Easter is coming

I was going to save that for a few more weeks, but it’s too good not to share.

Git stash

All it needs is a picture of a shiba inu.

Is this meme already too dated for me to bring it up in my Trinity class this week? I’ll let you know.

Beyond the pale

I feel like that every time I watch one of my own videos.

AI vs Cats

And finally:

Arrgghh, matey!

Have a great week, everybody!

The video version of this newsletter will be on the Tales from the jar side YouTube channel tomorrow.

Last week:

No classes, so I got the video on tool support in LangChain4j done. :)

My Trinity College (Hartford) class on Large Scale and Open Source Software is Tuesday night, with a guest lecture by the inimitable Paul King, head of the Apache Groovy project and all-around awesome dude.

This week:

Spring and Spring Boot, on the O’Reilly Learning Platform

Oppenheimer is on Peacock