Tales from the jar side: Silly Java, Google Thinking, OpenAI promises, Gukesh is champ, and the usual silly toots and skeets

It's officially time to think about Xmas shopping. Not yet time to start, but definitely time to think about it.

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of December 15 - 22, 2024. This week I taught week 3 of my Spring in 3 Weeks course on the O’Reilly Learning Platform.

My Silliest Line of Java Ever

I’ve got a video planned that is going to show the silliest line of Java I’ve ever managed to write. It’s in a test case, and it works with Local Variable Type Inference. Since you’re kind enough to read this newsletter, I’ll spoil it for you now. I managed to make this test case work:

See line 8? Here it is again:

var var = Var.var(“var”);I have to admit, that makes me chuckle. The test compiles and passes, too.

Expect a silly video about it this week.

AI Announcements

Google Thinking Mode

There’s a lot of talk in the AI world about how AI models are hitting a limit in how much they can learn merely by scaling up the data. A lot of that is blamed on a lack of data — once you’ve trained on pretty much the whole internet, what else can you learn? Many companies are trying to generate “synthetic” data, which seems promising in limited areas. It’s hard to know how much you can gain from efforts like that.

Two other approaches were announced this week. Google released its Gemini 2.0 model, though it emphasized it’s only experimental for now. While it’s supposed to be faster and better than its predecessor, I’m not sure by how much. It also accepts true multi-modal inputs, meaning you can send it text, images, and audio together, Eventually you’ll be able to get text, images, and audio out as well, but only text is available right now.

My own experiments show I can access it, but again, I’m not sure what makes it all that much better than before. It supposedly ties into various Google apps, like Maps and Search, but I haven’t done that yet.

They also play up its “agentic” qualities, which means in principle it can go beyond just telling you what to do and actually do it for you. I’m way less enthusiastic about that idea than the AI vendors seem to think I’ll be. I don’t trust the AI tools not to mess things up, and I certainly don’t want it to do so on my authority and with access to my credit card number. As much as the vendors insist this is the future, I’m willing to wait a bit longer for that to get here.

The other feature Google enabled was a “thinking” model on their Gemini 2.0 model, which means you can see its thought process as it reasons its way to an answer. I’m sure that’s very helpful for the Gemini developers, and it’s probably useful for debugging, but I don’t see how that’s going to affect my life very much, at least not directly.

OpenAI makes more promises

Meanwhile, OpenAI did what they always do: they tried to upstage any announcement by any other vendor with one of their own. The big one this time, out of their “12 days of OpenAI” (which I again found rather underwhelming), was the upcoming o3 model.

You may vaguely recall their o1 model, which used reinforcement learning to improve the model’s solutions to difficult problems. That idea is to do lots of extra post-processing of multiple answers, in an effort to iterate on them and get better results. I tried it, but apparently I don’t do many problems suitable for it, so I just found it overly expensive and slow. I also remarked at the time that o1 is a terrible name, because o2 is, after all, the chemical symbol for oxygen, if you ignore case. Besides, do you want to name your product after a letter that looks like a zero?

Apparently, British Telecom has a provider named O2, which is trademarked, so that wasn’t available. Therefore OpenAI jumped to o3 instead. They announced o3 with great fanfare, showing how to solved tons of problems and won all the benchmark tests, but — and here’s they key that is so typical of OpenAI — you can’t have it yet. In other words, despite the breathless reporting of many in the press, everything about o3 is vaporware at this point. Given how OpenAI’s Sam Altman lies constantly, even when he actually knows what he’s talking about, it’s hard to have any faith in anything they say.

To be fair, at least Sam Altman is not Elon, but that is an incredibly low bar. Any time I hear either of them, I simply assume they are trying manipulate their audiences with any content they think they can sell. I immediately discount anything they say for that (and other) reasons.

OpenAI has promised an o3-mini model by the end of January, and a full o3 shortly thereafter. They implied that they’ll have o4 and o5 models after that at roughly three or four months intervals.

Yeah, yeah. I’ll believe it when I see it.

Model Context Protocol

On the other hand, Anthropic introduced something called the Model Context Protocol. The idea there is that it’s a standard way to plug your own applications into an AI model. Again the motivation is agents, so you can give your own app to Claude (Anthropic’s model of choice) and let it take actions through apps you provide.

This gets more into the weeds of how to implement an agent rather than trying to sell the whole idea. I went through one of their tutorials, which shows you how to let Claude access a local SQLite database on your own machine. That was interesting, though the whole API is pretty awkward at this point.

The Spring AI people jumped in right away and showed how use the MCP protocol inside a Spring AI application. That is remarkably fast for them. Their demos also include accessing a SQLite database, as well as the file system, but that’s probably because they were building on top of Anthropic’s demos.

Now that my semester is over, I’ll have to take a look at that and see where it’s headed.

OpenAI has an official Java API

Another announcement from OpenAI is that now they actually have an official Java API. It’s contained in a GitHub repository, and once again it’s really early. They even list it as alpha, with the current version being 0.8.1, which I couldn’t get to work. I had to back up to 0.8.0 to find it.

It’s okay, more or less. I mean, all the necessary classes are there, and it appears to handle everything from text, to image generation to audio to fine tuning, but it’s really verbose an awkward.

I was going to include their basic example here, but it’s the kind of coding that gives Java developers a bad name. I mean, what’s up with ChatCompletionCreateParams.builder() and ChatCompletionUserMessageParam.Role.USER and even ChatCompletionUserMessageParam.Content.ofTextContent(…) all in a basic “Hello, World” program?

Yeah, no. I’ll stick with either LangChain4j or Spring AI. Sure, they can be verbose, too, but both of them have already mapped the AI models into Java and are much farther along than this.

The amusing part is that it appears that their “official” Java API apparently comes from an effort to port their Kotlin API to Java. I had no idea they had an official Kotlin API. I’ll have to look for that when I get a chance.

The King Is Dead, All Hail The King

Gukesh, at age 18, is now the 18th World Chess Champion. The match was far more interesting than most people though it was going to be, given the former world champion Ding Liren’s recent performance. Clearly the title has been hard on Ding during the two years he held it. Still, he put up a strong defense, and the match was very close.

Both players made inaccuracies as they went along, but Gukesh consistently pushed for more and Ding just as consistently kept trying to simplify into drawish positions. In the end, in the final game of the match, Ding tried too hard to simplify and made a blunder, and Gukesh won it all.

I enjoyed all the games, which I followed via GM Daniel King’s PowerPlay Chess YouTube channel.

Congratulations to the new champ. Long may he reign, though we all know Magnus Carlsen is still the best player in the world. :)

Tweets and Toots

Is it, though?

At least we can say we’ve now passed the Winter Solstice and the days will now be getting longer.

Brace yourself

Yup, I think we’re all like that this year.

Already chaotic

I imagine by now you’ve seen the meme that goes, “I never thought the leopard would eat MY face!” says the person who voted for the Leopards Eating People’s Faces Party. The chaos among them has already begun. It’s only going to get uglier from here.

Lots of Xmas Gags

Tis the season, so here we go.

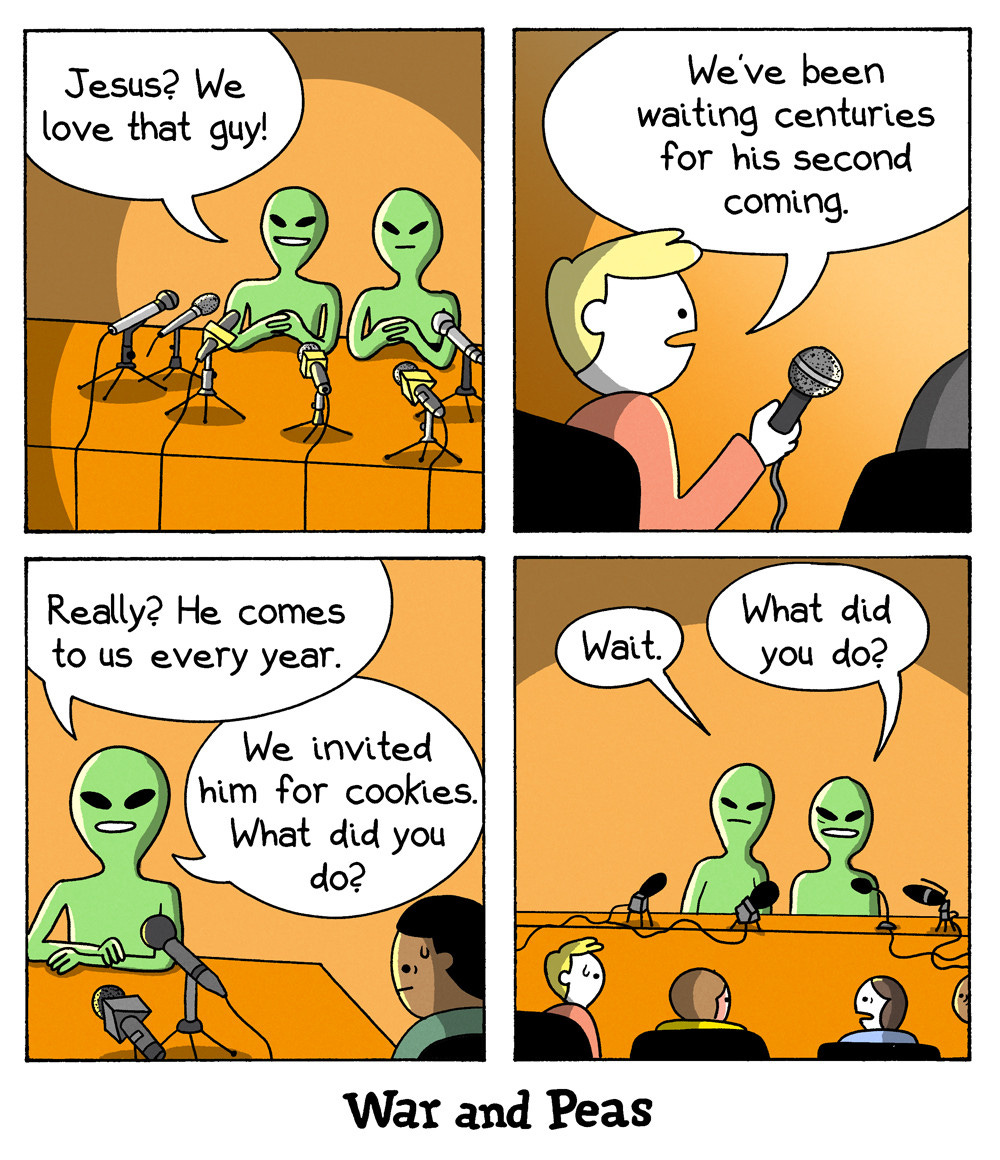

Indeed. Don’t we all.

What indeed.

Yeah, explain away that one, Clarence. I don’t think a flaming rum punch is going to do it.

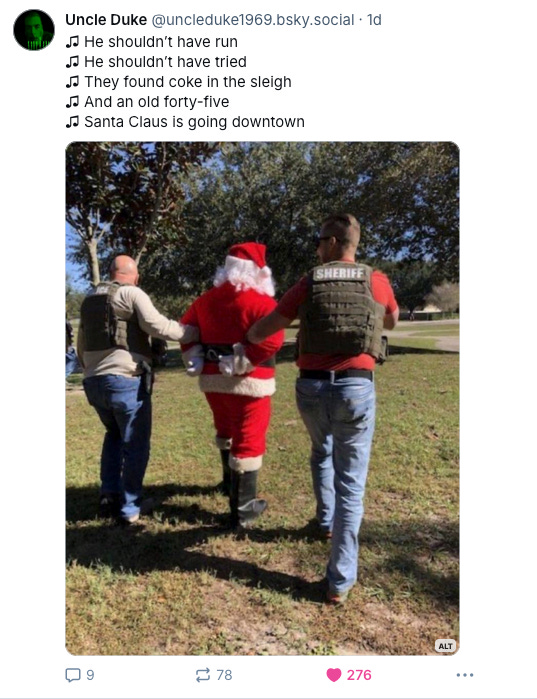

That’s definitely how that song should have gone. Continuing the musical theme:

That’s a twist. Here’s a variation:

This is a bit too relatable right now:

And finally:

Have a great week, everybody!

Last week:

Week 3 of Spring in 3 Weeks, on the O’Reilly Learning Platform

Grading my courses from Trinity College

This week:

Not much. Probably a video or two, but I’m actually going to try to take a break.