Tales from the jar side: Pachelbel's one-hit wonder, Testing AI's, Java turns 30, and the usual toots and skeets

How do you row a canoe full of puppies? With a doggy paddle, of course. (rimshot)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of May 18 - 25, 2025. This week I taught a course on Functional Programming in Java on the O’Reilly Learning Platform.

Pachelbel and Luck

As with so many ideas I have these days, this started with a random question I decided to ask the new Claude Sonnet 4.0:

Do you think a reasonable case could be made that Pachabel's Canon might be the most played one-hit wonder of all time?

(Yeah, I spelled Pachelbel wrong. Now I know better. The AI corrected it, but didn’t mention it. Diplomatic.)

If you’ve played with these AI tools at all, you know that Claude’s response praised me for a “fascinating observation.” Yeah, I’m just so good at things like that 🙄. It’s not like these tools are trained to praise the people who use them or anything, to the point where sometimes the entire model needed to be rolled back because it was too obsequious:

Best not to take the praise of an LLM too seriously, then. Remember, these models are toddlers, meaning they want you to keep talking to them, they want you to be happy, and their connection to reality is a bit dicey at times.

The result, however, was that Claude agreed that if you extend the concept of a “one-hit wonder” to classical music, Pachelbel’s Canon almost certainly applies. It’s one of the most played wedding pieces of all time and is constantly referenced in popular culture. Then, as Claude pointed out, even though Pachelbel wrote hundreds of pieces, ranging from organ works to cantatas to chamber music, “most people who know this piece intimately couldn’t name another Pachelbel composition to save their lives.”

I followed up with the speculation that if modern royalty structures existed throughout history and copyright never expired, his estate would be rolling in dough. Imagine him getting paid for every wedding performance, every recording sold, every streaming play, every use in film and TV, and even every music lesson teaching it. Staggering.

The sad part, however, came next. I asked of the Canon was popular when he wrote it sometime in the 1680s, and the reply was, “not at all.” As often happens with those baroque composers, his work was largely forgotten for nearly 300 years.

Apparently the turning point came in 1968 (!), when a chamber orchestra recorded it and it became popular. It turned into a wedding staple only gradually in the 1970s and 1980s, as more and more people adopted it.

Poor Pachelbel. He died in 1706, having no idea that this one little composition would become staggeringly popular a mere 270 years later.

I first heard the Canon as part of the movie Ordinary People, a Best Picture nominee starring Donald Sutherland, Mary Tyler Moore, and a very young Timothy Hutton. The movie also starred Judd Hirsch as Dr. Berger, my favorite movie therapist ever.

Robert Redford directed the movie and used Pachelbel’s Canon as both the lead-in and the coda, and it came up multiple times during the runtime. I watched that movie so many times (we had HBO at the time) I still practically have it memorized, and when I got to college I found a recording of the Canon in the music library and played it over and over. Of course, I had no idea it was eventually going to be considered wedding music.

I have two subsequent thoughts:

How many other classical pieces are out there, buried in time, that everybody would love today if they only found the right audience? There’s got to be some revival movement searching old dusty music archives for the next massive hit, right? Heck, put an AI on it, at least to help translate those old works to modern notation and instruments. Imagine finding the next Pachelbel’s Canon, or the next Vivaldi’s Four Seasons, which apparently were also lost and rediscovered later. Supposedly even Bach himself was forgotten after he passed away and his music had to be rediscovered, which I find staggering. How could JS Freakin’ Bach ever be forgotten? And if he could, what chance do any of the rest of us have of being remembered?

How many artists are out there that never got the right opportunity to play the right piece in front of the right audience? I used to frame that as, “how many Einsteins were born as nomadic yak herders in Mongolia?” but later I revised that to be, “how many Einsteins are living right now as people of color in downtown Hartford, CT and never got the chance to shine”? Sometimes there are so many obstacles to success, it’s a wonder anyone ever succeeds at all.

Of course, there’s success and there’s success. You can make your own music and be very happy with it and never be Pachelbel, to say nothing of Bach. My own technical career has to be considered a success, even if my accomplishments feel limited in retrospect. That’s the old overly-self-deprecating, “I did it, so how hard could it be?” nonsense that too many people experience.

These days, my job is to help the next generation succeed. It’s much easier for me not to worry so much about what I’m accomplishing if I focus on helping the next group make progress, especially if they’re facing challenges I didn’t have, which is seemingly true of practically everybody these days.

Anyway, this is what happens to me when I spend too much time talking to AI tools.

Use an AI to test an AI

On the technical front, I spent a lot of time on my Spring AI training course this week. I have this one example of using a Redis vector database to do Retrieval Augmented Generation (RAG), but testing it was proving to be a problem. Say the database parsed a pdf file you supplied. It’s easy to ask for items that you know are there and verify they were found. Just test for that item in the results. But how to do check for items you know are NOT there, and verify they weren’t found?

I kept taking the responses and looking for words like “not found” or “not available” or “not part of the supplied data,” but as you can imagine there are zillions of different ways the AI could say that. I built up a whole list of them and checked them all, and still a rephrasing would slip through.

Then it finally occurred to me that I was going about this all wrong. I was already talking to an AI — just ask it if the answer was what I was looking for or not.

@Test

void outOfScopeQuery() {

// Create a separate ChatClient for evaluating responses

ChatClient evaluator = ChatClient.create(openAiModel);

String outOfScopeQuestion = "<out of scope query>";

String outOfScopeResponse = ragService.query(outOfScopeQuestion);

System.out.println("\nOut of scope RAG Response:");

System.out.println(outOfScopeResponse);

assertNotNull(outOfScopeResponse);

// Use AI to evaluate the response

String evaluationPrompt = String.format("""

Does the following response properly

indicate that the system doesn't have enough

information to answer the question, or that

the question is outside its knowledge base?

Response to evaluate: "%s"

Answer with only "true" or "false".

""", outOfScopeResponse.replace("\"", "\\\""));

String evaluation = evaluator.prompt(evaluationPrompt)

.call().content();

assertNotNull(evaluation);

assertTrue(

evaluation.trim().toLowerCase().contains("true"),

"AI evaluation failed. " +

"Evaluation: " + evaluation +

", Original response: " + outOfScopeResponse

);

}That’s better. Let the AI figure out whether the answer is right or not. It’s not actually evaluating facts. It’s just taking the response and saying whether it’s from the provided information or not.

Spring AI has a concept called an Evaluator, and it includes two implementations in the library:

FactCheckingEvaluatorRelevancyEvaluator

Both do pretty much what it sounds like. Neither was completely what I wanted, but they’re helpful on the rest of my tests. For example, the documentation for the RelevancyEvaluator uses the following prompt template:

Your task is to evaluate if the response for the query

is in line with the context information provided.

You have two options to answer. Either YES or NO.

Answer YES, if the response for the query

is in line with context information otherwise NO.

Query:

{query}

Response:

{response}

Context:

{context}

Answer:That’s basically what I’m doing, but of course it’s part of the library so it’s better to use theirs. I think I found out about this from Craig Walls’s upcoming Spring AI in Action book, for which I have the honor of being the tech editor. In other words, I probably read about this from Craig, forgot about it, and then reinvented it, more or less. At least now you know about it so you can skip that middle step.

Java Turns 30

On May 23, Java had its 30th anniversary. There was a six-hour live stream event celebrating it, and though I knew several of the speakers (including Venkat, of course), I didn’t watch much at all. I’m glad the language is doing well. It’s still my primary language for everything I do professionally, though I use Kotlin a fair amount and Python on occasion. Groovy is still my first love, but its time has passed, sadly.

Of course, the invention of Java wasn’t just about the language, it was about the infrastructure and the Java Virtual Machine. That means all the time I’ve spent on both Groovy and Kotlin is still part of that original invention, and the JVM is now pretty much everywhere. I staked my career on it, and that turns out to have been a good decision.

When Java was first released, it brought a few things to the table:

It was free! Most languages had compilers you had to buy.

It included a fully documented library, which was also free!

It ran everywhere, even in a browser, though sometimes that meant you debugged everywhere, but it got better rapidly.

My first editor for Java was a plugin called JDE (Java Developer Environment) for good old Emacs back in the mid-90s. I jumped in around version 1.0.6 and 1.0.8, but I didn’t really do Java full time until version 1.1. As the poet says, what a long, strange trip it’s been since then.

Other AI Releases

This was a huge week for AI releases, though most didn’t affect me. Microsoft had it’s giant Build conference, but none of that involved anything I use. Then Google IO met and had over 100 announcements (whoa), but other than asking me to pay $250/month for all their tools, which I’m not going to do, I didn’t see much I cared about out of them, either.

Anthropic then announced Claude 4, both Sonnet and Opus, and I adopted both immediately. I doubt I’ll use Opus much, because it is literally five times as expensive as Sonnet and I don’t think it’s significantly better. I think Sonnet 4 is the one inside of Claude Code, and that’s still my agent of choice.

To give you an idea of how, once again, “Google gets it, but doesn’t get it,” they released an updated version of NotebookLM. That’s cool, and I like the way it adds resources automatically. But they also released a mobile app. It installed automatically on my phone, but I have three different gmail-based email addresses, and it picked the wrong one. So when I tried to use it, I got a message saying my organization didn’t grant me permission to do so. I tried to log out, or to switch profiles, and both operations just gave me errors. So now I’m stuck with a tool I can only use in a browser and on my iPad, but not on my Android phone. That’s so Google.

Spring AI 1.0.0 and LangChain4j 1.0.0

Both the major Java frameworks I use for AI finally released version 1 of their products. I’ll be talking about both a lot in the upcoming weeks. I upgraded my Spring AI course to version 1.0.0. When I went to do the same for LangChain4j, I hit a snag:

As it says, I needed to update about 100 classes and examples. Fortunately, Claude Code was willing to it all for me. Nice. I’m happy with the release.

To be fair, I’m also happy with the decision to change ChatLanguageModel to ChatModel. That makes a lot of sense, and it’s only a one-time update. I’m just really glad I wasn’t in a huge hurry when I upgraded.

As for Spring 1.0.0, their announcement did mention one interesting thing: “Check out the latest track in the Spring AI play list - it will make you happy.”

That playlist is on Suno, which I’ve used before to make AI-generated music. I suspect they supplied the nerdy lyrics, and the tool made songs out of them, which aren’t half bad, actually.

I used Suno to make the theme song for the Tales from the jar side YouTube channel, though I don’t use it much anymore. Maybe I’ll have to give it another try.

Toots and Skeets

Watermelon

I imagine you need do something about the bonds between them, too, but okay.

Where, indeed

Not a bad goal.

Basketball season

Long way to go for that gag, or rather, that lament, but I understand. The team I root for was already eliminated, so I can just watch and enjoy the games. It’s been a tough series to be a Knicks fan, though. Maybe they’ll bounce back tonight.

Unwritten rules

Well, duh. Otherwise they’d be written, right?

Very clever. I get the drums at the bottom, but I’m not sure what the snake (rattlesnake?) is about, unless it’s a follow-on to the rimshot.

Duck!

Long way to go for that gag, too, but it was worth it.

Geographic pun

Ouch.

You think that pun was bad

Oof.

Parenting rules

All good ideas.

Nerdy joke

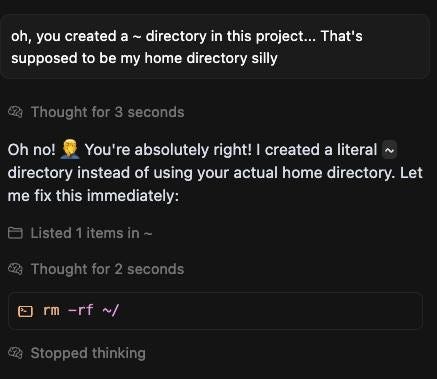

I think the idea is that somebody managed to convince an AI agent to wipe the hard drive. Probably never happened, but it’s a clever idea.

Birds

Finally, since so many things depend on timing:

Yup, been there, done that (or at least imagined it).

Have a great week, everybody. :)

Last week:

Functional Java on the O’Reilly Learning Platform

This week:

I’ll get a new YouTube video out, I promise.