Tales from the jar side: My talk at IntelliJ IDEA Conf, I get access to Gemini 1.5 Pro, More fun with AI's doing math, Pi and Perplexity AI, and Random thoughts

I like Quiet Tennis. It's like regular tennis, but without the racket. (rimshot)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of March 3 - 10, 2024. This week I taught a Spring and Spring Boot course on the O’Reilly Learning Platform and had my regular Trinity College course on Large Scale and Open Source Development.

Regular readers of, listeners to, and video viewers of this newsletter are affectionately known as jarheads, and are far more intelligent, sophisticated, and attractive than the average newsletter reader or listener or viewer. If you wish to become a jarhead, please subscribe using this button:

As a reminder, when this message is truncated in email, click on the title to open it in a browser tab formatted properly for both the web and mobile.

Presentation at IntelliJ IDEA Conf 2024

This week I spent an inordinate amount of time preparing about my talk at the online IntelliJ IDEA conference, and by preparing I mean worrying about and procrastinating over. I was invited to speak at that conference over a month ago, and the person who invited me (Hi, Mala!) asked me to talk about Java testing with JUnit and other libraries.

I called my talk Java Testing with JUnit, Mockito, and AssertJ, and in the end it went very well. Instead of driving myself crazy trying to figure out how to use some specialized plugins that I don’t normally use, I focused on some of the interesting features of all three libraries. That made for a better talk. I also had lots of examples, since I teach that stuff all the time.

Since the conference was using Streamyard as the online tool for broadcasting, and I already have an account there, they let me simultaneously live-stream my presentation on the Tales from the jar side (Tftjs) YouTube channel:

I renamed the video Expert Java Testing Strategies Revealed, which was suggested by an AI plugin. That’s a bit click-baity for my taste, but so be it. You’re welcome to take a look if you’re interested.

Gemini 1.5 Pro

I received an email from Google this week saying that I can now access their Gemini 1.5 Pro model, but only through their Google AI Studio interface. That’s the model that has a context window of about 1 million tokens, which corresponds to roughly 750,000 words of text. I gave that a try, with decidedly mixed results.

With a context window that large, I wanted to add one of my books and ask questions about it. I was able to upload my pdf copy of Mockito Made Clear, which used up about 36K tokens. Not bad, and not too surprising, given that it’s in the Pragmatic Answers series and is therefore only about 70 pages long.

Unfortunately, I wasn’t able type into the chat window after loading the book until I hit enter. I have no idea why. Then, after doing so, it gave a brief summary of the book. That’s fine. I tried to ask it about the author’s writing style, but — and this is true — it started writing my questions backwards.

That’s supposed to be, “The first message in this conversation contains tat [sic] file.” Gemini complained that I was writing backwards, of course. I told it I didn’t mean to, and at random intervals it started going forwards again.

In the end, even though I eventually got it to answer some questions, the annoying interface was too distracting to be worth using. Supposedly soon that Gemini version will be available through the REST API, at which point I’ll try again.

More fun with AI’s trying to do math

I mentioned last week I’m planning a video where I ask various AI models to solve simple math problems, and chaos ensues. What surprised me is how often they’re wrong when I simply ask them how many letters are in a given word.

For example, here’s a recent run with the LLM known as wizard-math, which is specifically targeted at math questions. I’m using the 7b, model which is on the small side, and that tends to lead to trouble anyway.

$ ollama run wizard-math

>>> What is the sum of the number of letters each word in the sentence, "The quick brown fox jumped over the lazy dog"?

Let's think step by step. Firstly, we need to understand what the problem is asking for. It wants us to find the sum of the number of letters each word in the sentence, "The quick brown fox jumped over the lazy dog".

To solve this problem, we can follow these steps:

1. Split the sentence into individual words. The sentence "The quick brown fox jumped over the lazy dog" contains 9 words.

2. Count the number of letters in each word. This can be done manually or using a program. If done manually, it would look like this: The (4 letters), quick (5 letters), brown (5 letters), fox (4 letters), jumped (7 letters), over (4 letters), the (4 letters), lazy (5 letters), dog (4 letters).

3. Add up all these counts. If we add 4 + 5 + 5 + 4 + 7 + 4 + 4 + 5 + 4, we get a total of 46.

So, the sum of the number of letters each word in the sentence "The quick brown fox jumped over the lazy dog" is 46.

No, the answer is 35. You can see that it’s already off as soon as it identifies the number of letters in the word The as 4. It also gets fox, jumped, the (again), lazy, and dog wrong, so there wasn’t much chance it would get the overall answer right.

I’ve tried this example with several models. My favorite response is from when I ran using codellama, which responded:

The word "The" has a length of one letter.

The word "quick" has a length of five letters.

The word "brown" has a length of five letters.

The word "fox" has a length of three letters.

The word "jumps" has a length of five letters.

The word "over" has a length of four letters.

The word "the" has a length of two letters in the second occurrence.

The word "lazy" has a length of four letters.

The word "dog" has a length of three letters.Therefore, the sum of the lengths of the words in the sentence is 35.

It got all the lengths right, except for the word the, which it got wrong twice (!). It thought the word the had 1 letter the first time and 2 letters the second time. Then somehow it added them all up and got the right answer (!!), even though the numbers it added should only have given it 32.

Wow. Good for it, I guess.

For the record, when I ask the commercial models, Claude 3 and GPT-4 and even GPT-3.5-turbo get the right answer, but Gemini (presumably 1.0 Pro, via the web interface) gets all the lengths of the individual words right, but then somehow adds them up and gets 39.

I told it to try again:

I thought it was wrong again before I noticed that I typed “jumped” in my question rather than “jumps”, so yeah, 36 is correct. Whew.

PI, a conversational AI

I should mention one other AI model that hasn’t broken through in the popular media yet, but might be of interest to some of you. The model known as Pi is completely free and has a different goal from most of the others. While most AI models are about giving you answers to questions, Pi just wants to chat.

If you go to pi.ai, here’s what you’re greeted with:

Hey there, great to meet you. I’m Pi, your personal AI.

My goal is to be useful, friendly and fun. Ask me for advice, for answers, or let’s talk about whatever’s on your mind.

How's your day going?

I find it a bit too sugary sweet for my tastes, but it really is trying to be friendly. It also has a button that lets it read its answers to you, and on the mobile app you can talk to it as well.

Another feature of Pi is that it releases a Daily New Briefing that is read by one of their AI voices. I’m not sure how I feel about that, but it is short and effective.

Pi is the sort of model tech people talk about when they suggest AI models can replace psychologists, which says a lot more about the techbro’s making that statement than actual reality. It’s amazing how often tech people underestimate how much expertise it takes to actually do anything real, like drive a car or make travel reservations or even add up the lengths of strings.

Incidentally, when I asked Pi to sum the number of letters in my target string, it got the answer right the first time.

Oh, and there’s this:

Geez, now I HAVE to recommend it. Sneaky bastards.

(Help Your Boss Help You can be purchased here. Be sure to use the discount code kkmanage35 for a 35% discount.)

Perplexity

As long as I’m spending too much time in this newsletter talking about AI models, I should also mention Perplexity. Many people use that site as an alternative to Google, which makes a lot of sense given how far Google has fallen recently.

Perplexity’s specialty is summarizing information on the internet. Whereas Google would give you a list of links (the first half dozen or so either paid for or manipulated by SEO algorithms), Perplexity gives you a summary and several news articles.

Here’s a quick sample:

Money. Of course. I should have known.

The combination of a readable summary and included links to decent sources is quite appealing. I’m still using it on its free tier (I don’t think I can handle yet another subscription service), but I find myself using it more and more often.

If you have an opinion about the service, either positive or negative, please let me know.

Random Thoughts

A few miscellaneous topics that have been floating around in my head recently.

Many movies are much more fun on re-watch than the first time. My tentative theory is that movies that contain both great and awful scenes together fit that model best, because on a re-watch you can ignore the bad stuff and just enjoy the good parts. The canonical example would probably be Caddyshack, which is a really bad movie, but with so many incredible scenes and performances the overall experience is awesome. Practically every scene with Ted Knight, Bill Murray, and of course Rodney Dangerfield is fantastic, and Chevy Chase is mostly not annoying. Almost all the kids (the caddies, who were supposed to be the heart of the movie) are completely forgettable, and there’s not much of a plot at all, but who cares? It’s one of the most quotable movies of all time. Big hitter, the Llama. :)

I was talking to my wife and she decided she was okay with being beautiful and terrible as the Dawn, treacherous as the Sea, stronger than the foundations of the Earth.

“What’s the downside?” she said. “All shall love me and despair? Yeah, no prob. I can live with that.”

As for diminishing and going into the West, she said, “Nah, no thanks. I’m good.”

During my online talk I got a chance to use my only stateless services joke (for those who don’t know, a stateless service doesn’t retain any information in between calls):

Me: I think all services should be stateless.

You: Really, why do you think that?

Me: Why do I think what?

That reminds me of another gag that needs two people to tell properly:

Me: The problem with the world is that idiots are so sure of themselves, while smart people are full of doubts.

You: Hmm. Do you really think that’s true?

Me: Absolutely!

Feel free to re-use those, of course.

I’ve been watching the Apple TV+ series on the New England Patriots dynasty, and it’s quite the ride. I loved the early part. I was annoyed by Spygate, but never saw it as a big deal. I’ll never get over the Super Bowl loss that cost us a perfect season. I’m still furious at that lowlife Roger Goodell for suspending Tom Brady on no evidence for a manufactured controversy. The comeback against Atlanta was one for the ages. I haven’t gotten to the end of the series yet, but it can’t be pleasant. Oh well. Most teams would love to have a twenty year peak like we had, even if it was filled with both thrills and chaos.

We now enter the really difficult part of Daylight Saving Time, where we’ve lost an hour in (most of) the US, but Europe doesn’t switch over until the end of the month. Of course, that is a built-in excuse for missing any joint meetings you didn’t want to attend. Two other points:

I still find it weird that we’re saving daylight in the summer, when we have tons of it, and

When I’m saying it out loud, I still put an “s” on Savings. I know it’s technically wrong, but I don’t care.

Let’s move on to the Tweets and Toots.

Tweets and Toots

I can’t believe I missed that

Seriously, what a Dad Joke opportunity, and I let it slip by.

I am your density

Wait, Venkat AGAIN? I had to respond to that.

Yes, it’s destiny that forever links me to Venkat (which is what I was referring to in the Back to the Future reference in the title of this section).

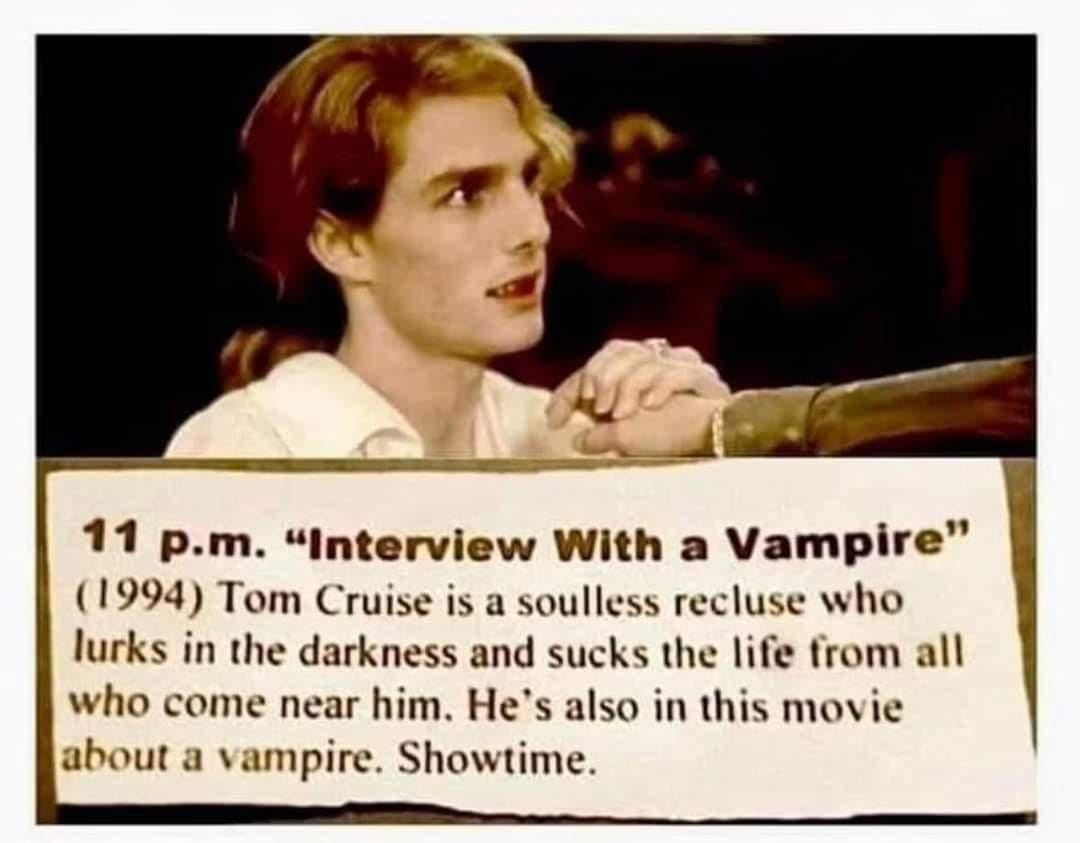

Creepy

Honestly, I don’t care enough about Tom Cruise to have an opinion, but that was funny.

True dat

That is a cold, plump kitty.

Leadership

Inspired.

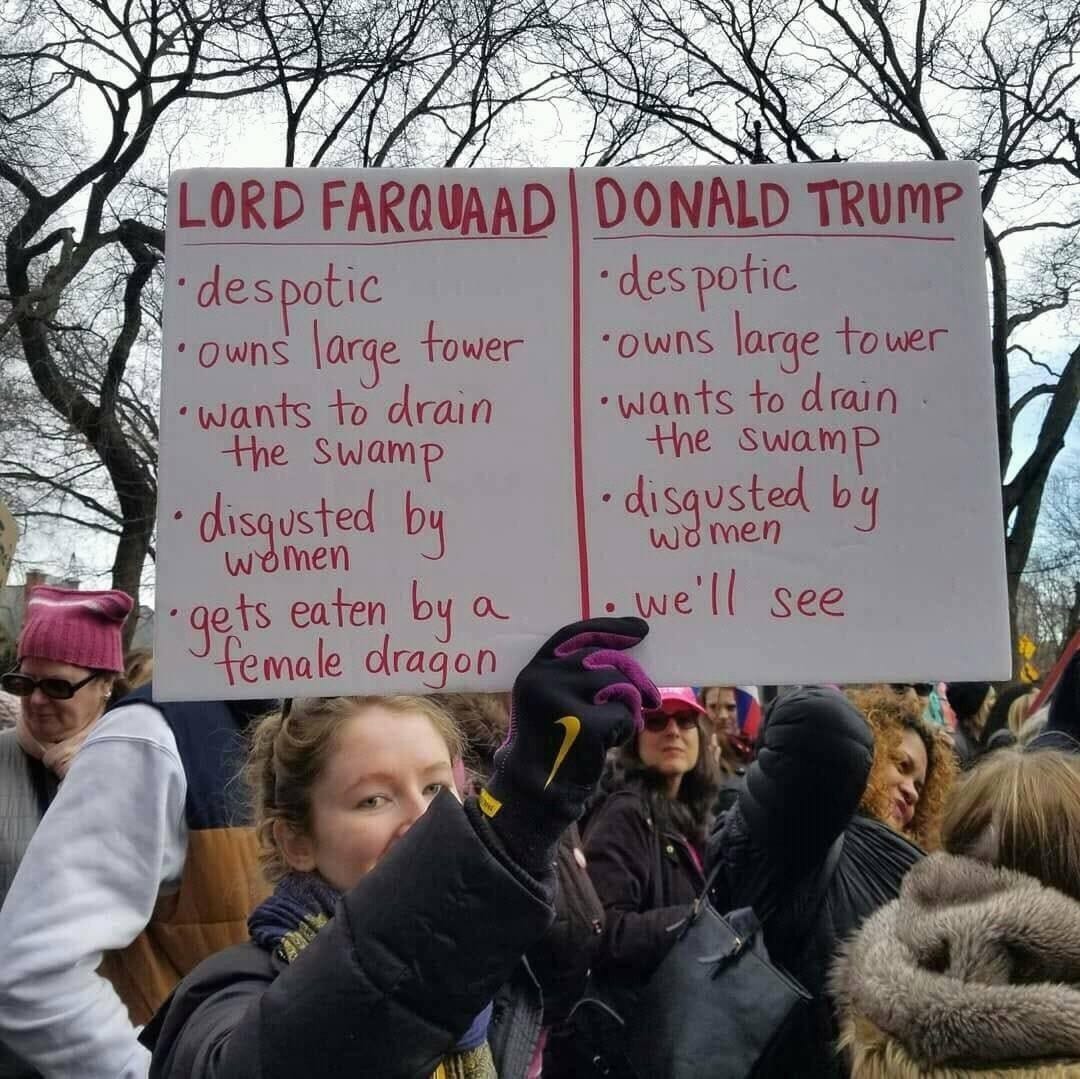

My money is on the dragon

The dragon becomes friends with a donkey, and the rest is history. Trump doesn’t need to make friends with an ass. He’s already the biggest one in any room he enters.

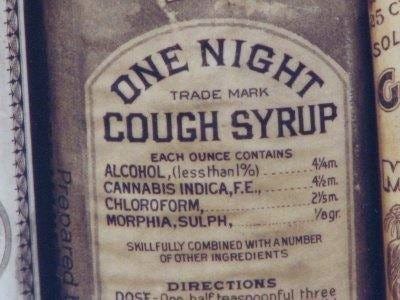

Give me a bottle of that good stuff

There might be some side effects, though.

Hard to keep them straight sometimes

And finally

Hello Kitty of Borg. You will be assimilated.

(Credit goes to Paul, @threedaymonk@sonomu.club on Mastodon, I think.)

Have a great week, everybody!

The video version of this newsletter will be on the Tales from the jar side YouTube channel tomorrow.

Last week:

Week 1 of my Spring in 3 Weeks course on the O’Reilly Learning Platform

Practical AI Tools for Java Developers, an NFJS Virtual Workshop

My talk on Mastering Java Testing with JUnit, Mockito, and AssertJ at the online IntelliJ IDEA Conference 2024

Latest Features in Java, on the O’Reilly Learning Platform

Trinity College (Hartford) class on Large Scale and Open Source Software

This week:

Week 2 of my Spring in 3 Weeks course on the O’Reilly Learning Platform

REST and Spring MVC (private class)

Trinity College (Hartford) class on Large Scale and Open Source Software — wait, no, it’s Spring Break already :)