Tales from the jar side: Modern Java features and AI, More ragging on RAG, Claude poetry, and the usual silly tweets and toots

I am struck by the ill. Bring me the Quills, both Day and Ny. (rimshot, h/t to MostlyHarmless on Mastodon. Also, I'm fine. Just a good gag.)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of March 24 - 31, 2024. This week I taught my Gradle Concepts and Best Practices course as an NFJS virtual workshop, and my regular Large-Scale and Open Source Computing course at Trinity College in Hartford, CT.

Regular readers of, listeners to, and video viewers of this newsletter are affectionately known as jarheads, and are far more intelligent, sophisticated, and attractive than the average newsletter reader or listener or viewer. If you wish to become a jarhead, please subscribe using this button:

As a reminder, when this message is truncated in email, click on the title to open it in a browser tab formatted properly for both the web and mobile.

AI endpoints and Modern Java

I was unfortunately too busy to make a new video this week, but I know what the next one is going to be about. I’ve been working with AI tools as REST services, and I found a natural application for several of the latest Java features (sealed interfaces, records with compact constructors, and pattern matching for switch) when accessing them.

The Ollama system lets you install various open source AI models on your local machine. In addition to downloading and running the models on demand, it includes a small web server that exposes a couple of endpoints on port 11434. For example, the simplest chat can be done by sending a POST request to

curl http://localhost:11434/api/generate -d '{

"model": "llama2",

"prompt": "Why is the sky blue?",

"stream": false

}'If you install a model that also has “vision” capabilities (meaning it can read an image and tell you what’s in it), you can also send a request to the same endpoint:

curl http://localhost:11434/api/generate -d '{

"model": "llava",

"prompt":"What is in this picture?",

"stream": false,

"images": ["iVBORw0KGgoAAAA..."]

}'The images array contains base64-encoded string representations of the images. Those requests are very similar — the only difference is the second one includes the images array.

Here is a sealed interface, which is an interface that can only be implemented by “permitted” classes, along with records that implement the interface and represent a text request and an image request, respectively:

public sealed interface OllamaGenerateRequest

permits OllamaGenerateTextRequest,

OllamaGenerateImageRequest {

String model();

String prompt();

boolean stream();

}

public record OllamaGenerateTextRequest(

String model,

String prompt,

boolean stream)

implements OllamaGenerateRequest {

}

public record OllamaGenerateImageRequest(

String model,

String prompt,

List<String> images,

boolean stream)

implements OllamaGenerateRequest {

// Transform file names into base64-encoded strings

public OllamaGenerateImageRequest {

images = images.stream()

.map(image -> isBase64Encoded(image) ?

image : FileUtils.encodeImage(image))

.collect(Collectors.toList());

}

private boolean isBase64Encoded(String image) {

// Implement Base64 format validation.

return image.matches("... long ugly regex ...");

}

}The sealed interface defines the three properties the records have in common, and only permits two types to implement the interface. The first record is routine, but the second one has a compact constructor. That’s a constructor (more of a block, actually) that you can use to transform input data into what the record wants. Here it checks to see if the image strings are already base64 encoded, and, if not, it does the encoding automatically.

My corresponding method to creates the requests looks like this:

public String generate(OllamaGenerateRequest request) {

return switch (request) {

case OllamaGenerateImageRequest imageRequest -> {

System.out.printf(

"Reading image using %s%n",

imageRequest.model());

yield ollamaInterface.generate(imageRequest).response();

}

case OllamaGenerateTextRequest textRequest -> {

System.out.printf(

"Generating text response from %s...%n",

textRequest.model());

yield ollamaInterface.generate(textRequest).response();

}

};

}That’s pattern matching for switch. Since the method mostly just forwards the request to somewhere else (the ollamaInteface (not shown)), I didn’t have to do that at all, but since I wanted to log whether I was reading an image or generating text, this was a really easy way to do it. The switch expression is exhaustive, too (meaning it doesn’t need a default clause), because there are only two permitted options and both are covered.

I tested this with a few text models and with two image models. Those image test looks like this:

@ParameterizedTest(name = "{0}")

@ValueSource(strings = {OllamaService.LLAVA, OllamaService.BAKLLAVA})

void generateWithImage(String model) {

var imageRequest = new OllamaGenerateImageRequest(

model,

"What is in this image?",

List.of(

"src/main/resources/images/happy_leaping_robot.png"),

false);

var response = service.generate(imageRequest);

assertFalse(response.isBlank());

System.out.println(response);

}The image models are called llava (the “v” stands for vision, not Vendetta), and bakllava (whose name is an obvious pun).

For the record, the Ollama models documentation says:

🌋 LLaVA is a novel end-to-end trained large multimodal model that combines a vision encoder and Vicuna for general-purpose visual and language understanding.

BakLLaVA is a multimodal model consisting of the Mistral 7B base model augmented with the LLaVA architecture.

Here is the image I asked it to read, which I generated using DALL-E 3:

The descriptions that came back were:

llava: “The image shows a robot with a humanoid form, adorned with green foliage. It's standing in a lush environment with trees and bushes around it. The robot appears to be celebrating or cheering, with its arms raised in the air and a joyful expression on its face.”

bakllava: “3D robot with leaves coming out of it, posed in a triumphant position, with its arms raised. The background is green and appears to be computer-generated.”

Sure, I’ll go with that. Hopefully I can get the corresponding video made this week, but that might be tough since I have an NFJS tour event in St. Louis on Friday. We’ll see.

Ragging on RAG again

A couple weeks ago in this newsletter I talked about Retrieval Automated Generation, which is where you upload your own files to an AI tool and ask questions about them.

At least that’s what’s supposed to happen. The challenge is that AI tools have a limited amount of memory, called the context window. In the early days of GPT 3.0, the context window was only 4K tokens (1000 tokens is about 750 words). GPT 3.5 raised that to 8K. Then Claude, from Anthropic, raised the bar to a full 100K tokens in its context window. GPT-4-turbo matched that, and these days both GPT-4 and Claude 3 allow 200K in the context window. The new limit is much bigger still. Google’s Gemini 1.5 (still in beta, but now I have access!) allows a whopping 1 million tokens in the context window, and rumor has it they’re working on a 10 million token version.

The whole idea behind RAG is that it provides a way to deal with tiny context windows. Since your entire document (supposedly) can’t fit into the context window, in the RAG process you:

Load the document.

Split it into chunks.

Encode each chunk as a vector of doubles.

Store those vectors in a vector database that supports similarity searches.

Get a question from a user and convert it to a vector as well.

Do a similarity search that finds the vectors from your docs that most closely align with the question.

Add just those chunks to the context window, and finally,

Ask the question of the AI tool with those chunks in the context.

Fairly complicated, but it’s the same process every time, and you can prepare your own documents for the vector database ahead of time. You just have to implement the search, or let one of the major frameworks do it for you.

The thing is, though, the fundamental assumption at the beginning is no longer as true as it used to be. Sure, it’s tough to fit a document into 4K tokens, but 200K is a lot, and a million is a whole lot.

I decided to do an experiment. I used the Apache Tika project to read in a PDF copy of my book HELP YOUR BOSS HELP YOU and count the tokens. Gemini has a REST endpoint just for counting tokens, so I set up a method to access it and did this test:

@Test

void countTokens() {

int totalTokens = service.countTokens(

"What is the airspeed velocity of an unladen swallow?");

assertThat(totalTokens).isEqualTo(12);

}That sentence contains 12 tokens. What about my entire book?

@Test

void countBookTokens() throws Exception {

String hybhy = PDFTextExtractor.extractText(

"src/main/resources/pdfs/help-your-boss-help-you_P1.0.pdf");

int totalTokens = service.countTokens(hybhy);

System.out.println(totalTokens);

}The book is about 130 pages of text, or about 150 pages overall. That corresponds to just over 63,000 words. The result from this method is: 79,446 tokens. So my 60K word book needs about 80K tokens in all, which is consistent with the roughly 3/4 metric mentioned above.

I don’t need Gemini 1.5 to hold that many tokens. Both Claude 3 and GPT-4 can handle 80K with room left over for the answers. I submitted this request to Gemini 1.5 anyway:

@Test

void getCompletionWith15Pro() throws Exception {

String hybhy = PDFTextExtractor.extractText(

"src/main/resources/pdfs/help-your-boss-help-you_P1.0.pdf");

String prompt = """

Here is the text from the book "Help Your Boss Help You":

%s

Answer the following question based on information

contained in the book:

%s

""".formatted(hybhy,

"What are the top five major points made in the book?");

GeminiResponse response = service.getCompletionWithModel(

GeminiService.GEMINI_1_5_PRO,

new GeminiRequest(List.of(new Content(

List.of(new TextPart(prompt))))));

System.out.println(response);

String text = response.candidates().getFirst().content()

.parts().getFirst().text();

assertNotNull(text);

System.out.println(text);

}This is what I got back:

## Top 5 Major Points from "Help Your Boss Help You":

1. **Conflict with your manager is inevitable, but you can make it productive.** You and your manager have different agendas and incentives, leading to potential clashes. However, by understanding these differences and employing the right strategies, you can turn conflict into opportunities for growth and mutual benefit.

2. **Build a relationship based on constructive loyalty.** This means being a reliable and supportive employee while maintaining your own integrity and ability to push back when necessary. Trust is essential, and you can foster it by demonstrating responsibility, responsiveness, and respect for the chain of command.

3. **Master the art of "good-enough answers."** Don't get bogged down trying to find perfect solutions to every question. Learn to provide timely, preliminary answers that address your manager's immediate concerns and offer a path to further exploration if needed. This shows responsiveness and builds trust.

4. **Communicate effectively by understanding your manager's personality type.** The book explores personality frameworks like MBTI and Keirsey Temperament Sorter to help you tailor your communication style to your manager's preferences. This ensures your arguments are heard and understood effectively.

5. **Know how and when to push back against decisions you disagree with.** Use the "Tit for Tat" strategy from game theory to object to decisions that negatively impact you, but do so in a way that preserves the loyalty relationship. Negotiate, then get back to work regardless of the outcome. This trains your manager to consider your needs in future decisions.

These five points provide a framework for building a healthy and productive relationship with your manager, navigating inevitable conflicts, and ultimately achieving your career goals.

That’s not a bad summary, actually, but the real point is that the entire text of the book fit comfortably inside the context window, and Gemini was able to read it and answer questions about it.

So much for RAG. That’s not completely true, of course. It can’t be a bad thing to split your own information into easily digestible chunks and store them in a database for searching, but it’s like the old days when computers were constructed using stone knives and bearskins.

Back then (for me, the 80’s and early 90’s) we had tiny amounts of RAM to work with, and we played tricks to fit our data into it, swapping the relevant chunks in and out. Nowadays we have gigs and gigs available, and we are only limited by how much money we are willing to spend.

Everybody in AI, and I mean everybody, talks about RAG and writes blog posts or videos about it. My suggestion is that if your data consists of 10 complete books or less, you might consider just loading them all into the context window and see if that works. Just a thought.

More AI Poetry

Claude 3 from Anthropic comes in three varieties: Haiku, Sonnet, and Opus. That’s in order of smallest to largest, which also aligns with fastest to slowest, least to most expensive, and worst to best answers. I few weeks ago in this newsletter I talked about using Haiku to write a haiku. It finally occurred to me that if I’m going to do that, I might as well ask Sonnet to write a sonnet, and Opus to write an opus.

Here are my tests, part of a larger set:

@Nested

class CompositionTests {

@Test

void haiku_writes_a_haiku () {

String question = """

Write a haiku about Java development

with AI tools.

""";

var response = claudeService.getClaudeMessageResponse(

question, ClaudeService.CLAUDE_3_HAIKU);

System.out.println(response);

assertThat(response).isNotBlank();

}

@Test

void sonnet_writes_a_sonnet () {

String question = """

Write a sonnet about Java development

with AI tools.

""";

var response = claudeService.getClaudeMessageResponse(

question, ClaudeService.CLAUDE_3_SONNET);

System.out.println(response);

assertThat(response).isNotBlank();

}

@Test

void opus_writes_an_opus () {

String question = """

Write an opus about Java development

with AI tools.

""";

var response = claudeService.getClaudeMessageResponse(

question, ClaudeService.CLAUDE_3_OPUS);

System.out.println(response);

assertThat(response).isNotBlank();

}

}Here are the responses:

Haiku about Java development with AI tools:

Coding with AI,

Algorithms optimized,

Java's power shines.

Whaddyaknow, the number of syllables is right on all three lines. Imagine that.

Here's a sonnet about Java development with AI tools:

With Java's power, we build and create,

Crafting code that runs on every device,

But now AI tools aid our coding fate,

Enhancing our skills with insights so precise.

Intelligent assistants, ever at hand,

Suggest solutions, optimize our flow,

Guiding us through complexities so grand,

Helping us learn and letting our skills grow.

From code completion to bug detection,

AI tools empower our development quest,

Streamlining tasks with utmost perfection,

Allowing us to focus on what's best.

Java and AI, a harmonious pair,

Elevating our craft with ingenuity rare.

Let’s see: a Spenserian sonnet consists of 14 lines of iambic pentameter in the form ABAB, BCBC, CDCD, EE. Pretty close, and there are other rhyming schemes allowed. Not one to write home about, but certainly better than I could do. Plus, it took about 4 seconds for the AI to produce.

What about the opus? Prepare yourself for this saga:

In the realm of code, where logic reigns supreme,

A tale unfolds, a story yet unseen.

Of Java's might and AI's guiding hand,

A partnership, a future bright and grand.

From humble roots, a language born and bred,

Java's influence, like wildfire, quickly spread.

With objects, classes, and inheritance true,

Developers found power, their skills grew.

But as the years passed by and projects swelled,

Complexity arose, a challenge to be quelled.

Enter AI, a beacon in the night,

To aid the coder, to make their burden light.

With machine learning, deep within its core,

AI tools could analyze, could explore.

Patterns found, suggestions made with ease,

Guiding developers, their minds to appease.

IDEs enhanced, with AI's gentle touch,

Autocomplete, refactoring, and such.

Errors caught, before they could take hold,

Saving time and trouble, a story to be told.

And as the projects grew, in scale and size,

AI's role, it did not minimize.

Code review, automated, thorough, and fair,

Ensuring quality, with nary a despair.

Performance tuned, with algorithms smart,

AI optimized, right from the start.

Faster, leaner, code that could endure,

A testament to AI's power, pure.

But AI's reach, not limited to code,

Documentation too, a heavy load.

Generated, updated, with precision clear,

Keeping knowledge current, year after year.

And as the future dawns, a new era bright,

Java and AI, a partnership of might.

Together they shall forge, a path unknown,

Empowering developers, their skills full-grown.

So let us raise a toast, to Java's reign,

And AI's aid, in every domain.

For in this opus, a tale to be told,

Of a future bright, and a partnership bold.

I mean, don’t give up your day job, but again it’s a lot better than I could do, especially because I’d give up on such a silly task pretty quickly. But it worked, right? I originally got so caught up thinking of Opus as a penguin, I didn’t think about what the names of the Claude varieties meant.

Tweets and Toots

And Toto too?

When Toto released their song Africa in 1982, we all knew it was good, but I never expected it would have this kind of staying power. Yet now I expect to have it going through my head for next hour or so, and in all probability so will you, too. You’re welcome.

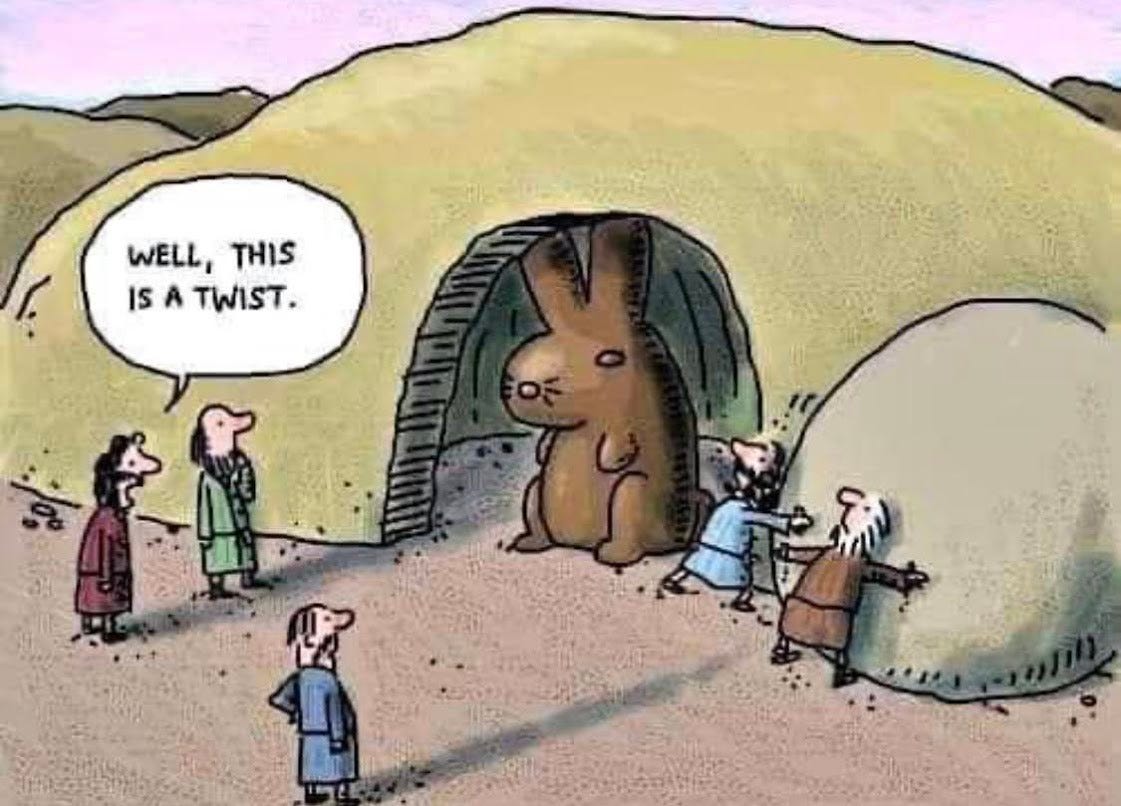

Easter gag

That’s an old one, but still funny.

The last egg hunt

I hadn’t seen this one before. Just edgy enough to be blasphemous, so buyer beware.

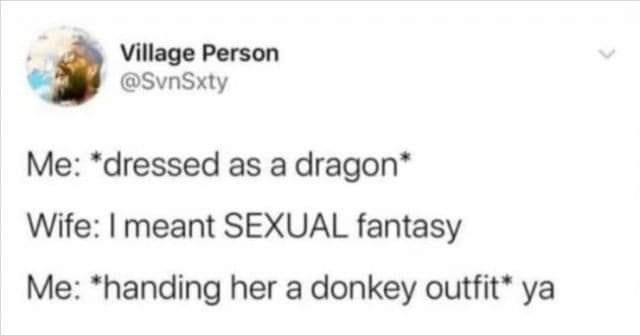

AITA?

From what I’ve heard, furries tend to be very kind and friendly people, but I suppose everybody has their limits.

Whoa, a Shrek joke

Speaking of furries. I think.

TDD FTW?

That’s the only good argument I’ve ever heard against writing tests.

Vendors Beware

I HAVE to find somewhere to use that gag. I should probably work on my Rorschach impression first.

Pride in Dad Jokes

Oof. Good one, though I might have said “goeth” just for the sheer pretentiousness.

Poor Commissioner Gordon

I totally get that now.

User Stories

I’ve been telling my Trinity students about user stories, but I’m afraid to bring up these examples.

We Went There

That’s it. We’re done. Seriously, there’s no coming back from that.

Have a great week, everybody!

The video version of this newsletter will be on the Tales from the jar side YouTube channel tomorrow.

Last week:

Gradle Concepts and Best Practices, an NFJS Virtual Workshop

Trinity College (Hartford) class on Large Scale and Open Source Software.

This week:

Managing Your Manager, on the O’Reilly Learning Platform (APAC time zones)

Upgrade to Modern Java, an NFJS Virtual Workshop

Trinity College (Hartford) class on Large Scale and Open Source Software.

Travel to St. Louis for the Gateway Software Symposium and give 5 talks on Friday, of which 4 are AI-related. Whee!