Tales from the jar side: Metadata, Arc of AI, College basketball, Really final, April Fools, and the usual toots and skeets

I replaced my rooster with a duck, and how I wake up at the quack of dawn. (rimshot)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of March 30 - April 6, 2025. This week I taught week 3 of my Spring in 3 Weeks course on the O’Reilly Learning Platform, and I spoke at the Arc of AI conference in Austin, TX. I also taught my regular schedule at Trinity College (Hartford).

Going Meta

When my friend and fellow speaker Nate Schutta and I talk to students about giving presentations, we often emphasize that you shouldn’t “go meta”. The term meta comes from metadata, which is data about the data.

(I’m sorry, Facebook doesn’t get to steal the term meta from us, especially without compensation.)

That means talking during the talk about the talk itself, or the process you followed to put it together. Nobody cares but you, and it distracts from the information you’re trying to impart. Most of the time when a speaker goes meta, they’re usually apologizing for some deficiency that the audience might never notice anyway. In a YouTube video, going meta is when the person making the video apologizes for how long it’s been since they made the last one, or how difficult it was to put this one together, or how life events got in the way.

There is a time and a place for that, but in general you shouldn’t apologize for a transgression that nobody noticed but you. Sure, there are some fans who will care if you miss a regular schedule (if I ever miss publishing this newsletter on a given Sunday, there are a handful of subscribers who would ask me if I was okay), but most people really don’t care.

That said, let me go meta for a moment. In last week’s newsletter, I did two things I don’t normally do:

I totally forgot to add a joke to the subtitle. I meant to. I just forgot. Sorry about that. No greater meaning should be taken from it, other than as another indirect sign of me getting old. Maybe I need to start using a checklist. To make up for last week’s missing joke, I’ll just say, “I was planning to cook an alligator, but unfortunately I only had a croc pot.” (rimshot)

I went on a bit of a rant about trading in my Tesla because of how appalled I am at Elon Musk.

I did get some messages about that. Most were favorable. One complained that they came here for Java material and didn’t want to read anything political (especially when they obviously didn’t agree with me).

Just for the record, and for any new readers, let me be clear about the purpose of this newsletter. I spend a lot of time teaching and learning Java-related topics. That includes Java-based languages like Java itself, Groovy, and Kotlin. I also spend time on frameworks like Spring, build tools like Gradle, testing frameworks like JUnit, and application areas like AI. Each week, when I learn something new or interesting, I report it here. Some weeks that involves lots of Java code, and some weeks not so much. I also tend to comment on what’s going on in the world around me.

Oh, and lots of subscribers come here only for the funny social media posts at the end, which is totally fine. :)

In effect, I like to say that this is a company newsletter for a one-person company. Some issues will include lots of technical content, and some issues won’t. But I do have to say that if you’re going to be offended by criticism of malicious, blithering idiots like Elon Musk, you might want to subscribe to a different free newsletter.

Speaking of which:

That’s from Steal My Tesla, a clever entrepreneur taking advantage of a market opportunity if there ever was one.

Also:

If you want some actual, honest to goodness Java content, read on.

Really, really final

I was sure this was an April Fool’s joke, but no, this is an actual thing:

The Java Enhancement Proposal draft is here. Make really final mean final.

I love it. I keep having flashbacks to A Few Good Men:

“This variable is final.”

“Overruled. With reflection, I can change it.”

“Your honor, I strenuously object. This variable is really final.”

“Oh, okay then.”

I totally want to see this adopted. I don’t care much about the underlying issue, probably because I’ve never been burned by it, but I really (!), really want to have that conversation some day.

Arc of AI Conference

This week I traveled to Austin, TX, for the Arc of AI conference. Given my college teaching schedule, I wasn’t able to stay long, but I did enjoy the time I spent there. Many of my friends were there, including both NFJS speakers (Nate Schutta, Brian Sletten, Craig Walls, Venkat Subramaniam, and others) and Null Pointers band members (Freddy Guime, Frank Greco — all we needed was a drummer to have a show right there), and several other friends.

My talk was on Prompt Stuffing vs RAG in AI models. I’ve discussed that in this newsletter before, but the basic idea is that all AI models have a training cut-off, after which their weights do not change. How, then, do you get your own data to be considered, whether it occurred after the end of training, or it’s proprietary, or otherwise unavailable to the model?

One way is to stuff all your information into the message you send to the AI. That’s (unofficially) called prompt stuffing, because, well, you’re stuffing everything into the prompt. That has some advantages and disadvantages:

Prompt Stuffing Pros:

The AI has all the information you want to supply immediately.

It’s really easy to do.

Over time, the amount of data you can fit in a message keeps going up.

The number of tokens you can fit inside the input message is called the context window. In the early days of AI models, that was only about 4K, which expanded to 8K, 16K, and even 32K if you paid extra. (Note: 1000 tokens is roughly 750 words in English.)

These days, the context window for GPT-4o is 128K, for Claude 3.7 it’s 200K, and for Gemini it’s a whopping 1 million tokens. That’s a lot. How effective the AI’s are at dealing with that much information is a matter of debate, but at least you’ve got the room if you need it.

Cons:

Context windows are sizable, but they’re not infinite.

Tokens cost money.

Depending on the query, much of the information you supply may be irrelevant.

The cost per token varies a lot, but by way of example, OpenAI charges $2.50 / million input tokens and $10 / million output tokens. An entire pdf book might be only about 75K tokens as input, and the output would probably be limited to 4k tokens or less, so the resulting cost would be about 22 cents. Not bad, given that you’re adding an entire book to the context window. There are also batch approaches, so if you have several questions to ask, you can cut way down on the cost of subsequent messages as they reuse the uploaded information.

Of course, that’s not zero, and if you’re asking questions about specific topics, a lot of that book is likely to be wasted information. The RAG approach (retrieval augmented generation) splits up the book into segments, encodes those segments, and stores them into a vector database. Then, when you ask a question, the question is also encoded, and the database is queried to find out which segments are “nearest” the query. That way, the only segments that are added to the actual input message are the relevant ones.

RAG Pros:

Input messages are much smaller.

Only relevant data is included in the input.

Scales to much larger amounts of data.

Cons:

More complex to set up and use.

Not good for questions that need a lot of the information, like summarizing the data.

Takes time to do the “similarity search”, which the database uses to return the most relevant information.

As my example, I used a system I’ve discussed here before, which is the Wikipedia page on the rap beef between Kendrick Lamar and Drake. That page is large and growing, and it contains information both before and after the end of training for all the major models. Heck, it’s considerably larger than when I last looked at this problem in my AI Integration class last semester.

I’ll make a long story short by summarizing a couple of the results. Prompt stuffing is great is your data fits comfortably in the context window, and previously reported errors (like having difficulty with specific facts at the start or end of data) are going away as models improve. RAG scales beyond that, but has problems losing context. For example, I added that Wikipedia page to my RAG system and asked a simple question like, “What new developments occurred in 2025?” None of the models could answer that. Most noticed that the year 2025 was referenced in the document, but they didn’t know how that connected to specific events. The parsing and splitting process broke everything up to the point where the AI didn’t know which parts belonged together any more.

I think that’s significant, because every company that talks about AI immediately wants a RAG system, and I doubt many of them realize what they’re losing in the process.

Anyway, the talk went well, the attendees were interested and enthusiastic, and I had a good time. The code is in this GitHub repository. Look at the JUnit tests inside the src/test/java hierarchy, in the package com.kousenit.rag.

One postscript: After returning from the conference, I was planning to record a new video about the topic. I was just about to do that, when a new comment came in to the Tales from the jar side YouTube channel asking a question about that exact example. It reminded me that I recorded a video back in February covering a lot of this material, and somehow I managed to forget that I’d already done that. I may record the new video anyway, given that I’ve updated everything, but I didn’t realize I now have enough videos that I can forget one I recorded only a couple months ago. Sigh.

College Basketball

I have two basketball items to note. The first, which I meant to include last week, is that my tiny little liberal arts college, Trinity College in Hartford, CT, won the Division III men’s national championship.

The link above points to the article in our school newspaper.

Now I’m embarrassed I wasn’t paying more attention. I had no idea this was going on, or I would have done something to support it, either in my classes or out of them. Pretty amazing, though.

I’m not much of a college basketball person, with the exception of UConn women’s basketball. They’ve been great for the last 30 years, literally. It’s been ten years since the women won a title, however, and they’re playing in the NCAA championship this afternoon at 3 pm EDT, so I have to watch that. I’ll try to get this newsletter out before then, because if they lose, I may be too depressed to finish it. We’ll see.

Toots and Skeets

There were a fair number of April 1 jokes from brands. Some were reasonably funny.

Text to Bark

“We fine tuned llama 3 to understand dogs, not just llamas.”

Okay, this is awesome. The article is Text to Bark: a Breakthrough in AI Pawdio. Finally, a use case for AI that everyone can agree is worthwhile.

Oh, great, it’s Monday

Here’s a direct link to the post, necessary because of Elon. This allows you to select Monday as a voice mode in ChatGPT, which gives a world-weary, unenthusiastic answer to your question from an AI that would clearly prefer not to be bothered. It worked on my app.

For those readers of a certain age, it sounds to me like how I imagine a female-voiced version of Marvin the paranoid android would sound in Hitchhiker’s Guide to the Galaxy.

The Monday voice is available until the end of the month, though I doubt I’m going to use it again.

Touch grass without going outside

The article says, “Dbrand wants you to feel less guilty about having your face buried in a screen all day and not getting outside to ‘touch grass.’ The company’s latest collection of skins lets you wrap your gadgets in bright green artificial turf so you can touch grass whenever you want and no matter where you are.”

Well, that’s one way to handle the problem, I guess.

Bluesky limits character counts

On April 1, Bluesky announced this:

It helps to know that the normal character count on Bluesky is 300 characters. For one day only, it was 299.

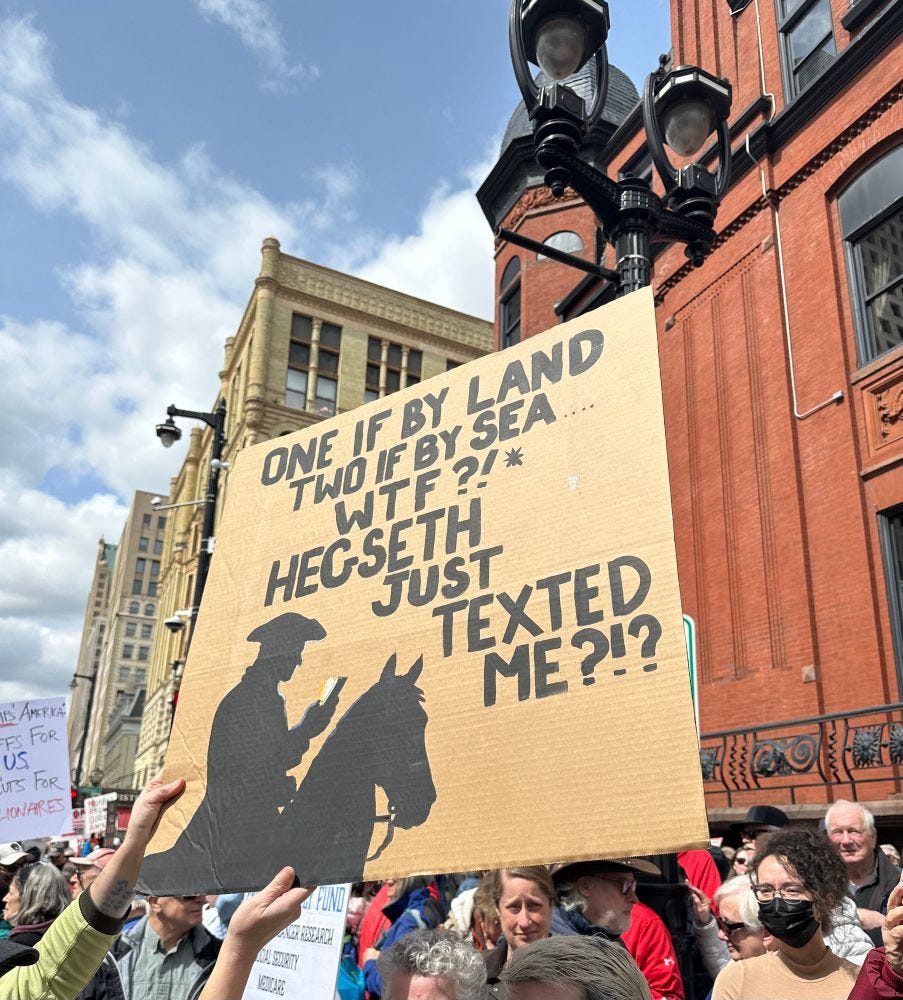

Protests around the country

I’m not going to say much about the “Hands Off!” protests around the country objecting to all those idiots in power, but a couple of signs were great.

Yeah, I can see how that might be a problem.

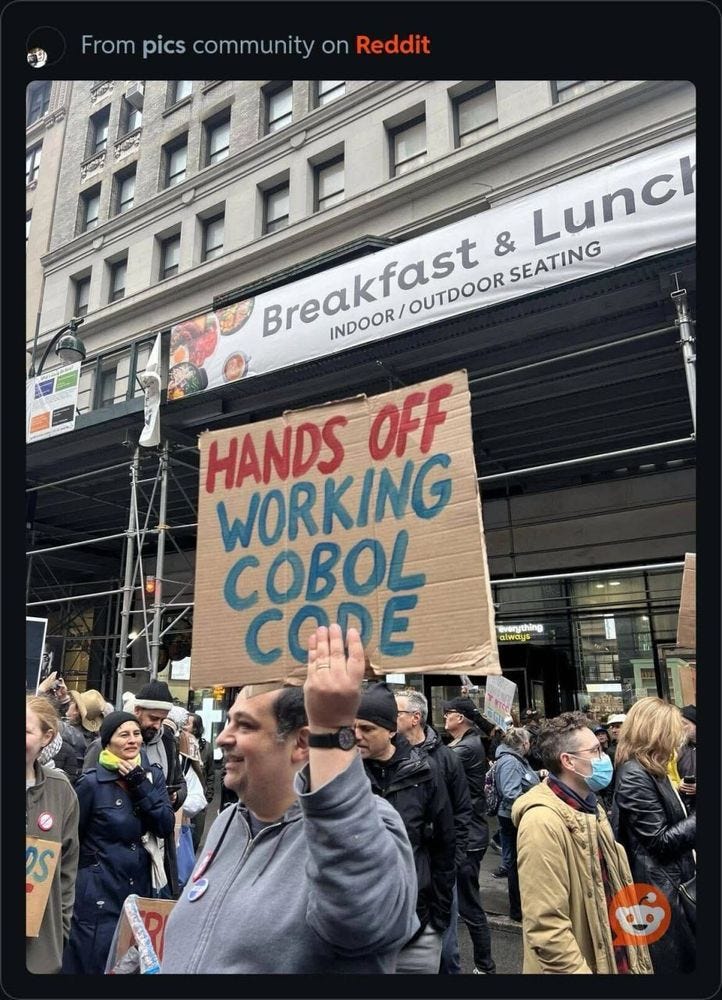

For the IT people, this was great:

They’ll find out how true that is, won’t they?

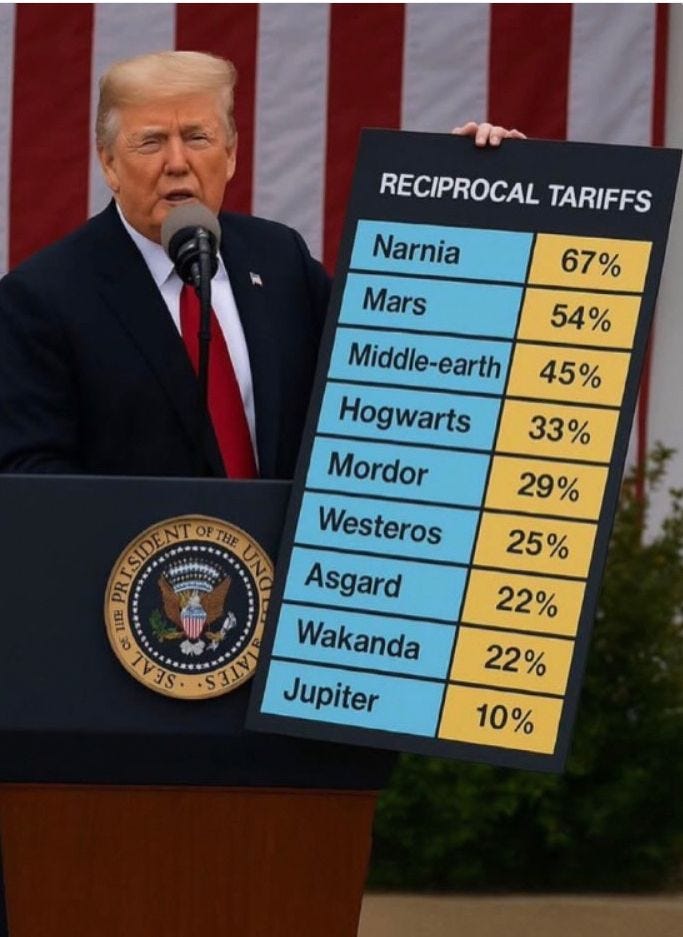

Tariffs

Again, I’m not going to dwell on this, other than a couple of good gags.

You might have seen this one, which made the rounds:

I’m concerned about a flightless instructor teaching flightful (well, what would you call them?) birds, but here we are.

Here they’re haranguing the ambassador to those penguin-only inhabited islands included in the tariffs:

Don’t take the deal, whatever it is.

They’re everywhere

What does it cost when the abyss stares back?

Split those hairs

Sadly, I know some descendants of that line.

Finally, a TV reference

I’m not sure why that strikes me as funny, but it does.

Have a great week everybody.

Last week:

Arc of AI conference in Austin, TX

Week 3 of Spring in 3 Weeks, on the O’Reilly Learning Platform

My regular schedule at Trinity College (Hartford)

This week:

My regular schedule at Trinity College (Hartford)