Tales from the jar side: Luma AI video answers the old question, Stable Diffusion stochastic parrots, Async requests in Java, and the usual tweets and toots

I love how the Earth rotates. It really makes my day. (rimshot)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of June 9 - 16, 2024. This week I taught week one of my Spring in 3 Weeks course on the O’Reilly Learning Platform and both my Practical AI Tools for Java Developers course and my Gradle Principles and Practices course as NFJS Virtual Workshops.

Regular readers of, listeners to, and video viewers of this newsletter are affectionately known as jarheads, and are far more intelligent, sophisticated, and attractive than the average newsletter reader or listener or viewer. If you wish to become a jarhead, please subscribe using this button:

As a reminder, when this message is truncated in email, click on the title to open it in a browser tab formatted properly for both the web and mobile.

Luma AI Video Generator

This week a company called Luma Labs released an AI text-to-video generator, called Dream Machine. It also works from existing images.

My experience with video generators is that they’re mostly awful. I’ve only used the one from Stability AI (the company that makes the open source image generator Stable Diffusion), because I can access that programmatically. The images are generally fine, but the one video I made was so creepy and weird I stopped experimenting.

(I’d include it here, but then you’d have nightmares too. You’re welcome.)

Anyway, for Luma AI, I registered and went back to DALL-E 3 to generate an image for Luma to automate. My prompt was, “Draw a photo-realistic image of a chicken crossing a road,” in order to try and answer the age-old question:

Why did this particular chicken cross this particular road? I have no idea. My wife suggested that the crosswalk made it look like this a country version of The Beatles Abbey Road album, so there has to be a related joke (no doubt a cover (rimshot)), but I haven’t found it yet.

Wait — how about this:

Q: Why did the chicken cross Abbey Road?

A: Because (… the wind is high, it blows my mind, ah-ah-ah-ah-ah-ah)

Yeah, I’ll keep working on it.

I uploaded the chicken image to Luma and this is what it created:

(Something in the way she moves … (rimshot). Oh, and Here comes the sun — the most striking image in the background.)

I imagine that you’re probably as confused as I am right now. Why is the road moving? At least the chicken walks like a chicken.

As I mentioned to my editor, when it comes to AI tools, my assessment is:

Text to text (“chat”) is pretty solid by now, hallucinations aside.

Text to image is at least one generation behind that, though it’s getting better.

Text to video is at least one generation behind text to image, and very strange.

Image to video is about as bad as text to video.

Text to audio is pretty good, though the APIs generally don’t let you correct pronunciation or add your own voices, with some exceptions.

Audio to text is also pretty good, depending on the language.

My book (did I mention my upcoming book, Adding AI to Java, from Pragmatic Programmers, hopefully to be released in beta next month?) discusses chat, image generation, text-to-speech (that’s generating an mp3 from text), and audio-to-text (both transcription and translation), but I’m leaving video alone. Maybe that will be in the second edition, but it’s totally not ready for prime time yet.

(Okay, one more: Why did the chicken cross Abbey Road? To avoid Maxwell’s Silver Hammer. Yeah, I’ll show myself out.)

Stable Images

Stability AI made a small splash with a new release this week, when they announced Stable Diffusion 3 Medium. According to the “key takeaways” section of that news item:

“Stable Diffusion 3 Medium is Stability AI’s most advanced text-to-image open model yet.”

“The small size of this model makes it perfect for running on consumer PCs and laptops as well as enterprise-tier GPUs.”

There’s more, but you get the idea. I didn’t bother downloading the weights and trying to run it locally. I’m happy to use their cloud service, which costs me some credits, but accessing a REST API like that is what my book is all about. According to the documentation, the cost per model is:

sd3-mediumrequires 3.5 credits per generationsd3-largerequires 6.5 credits per generationsd3-large-turborequires 4 credits per generation

Prices are $10 per thousand credits, so those individual model prices are really in cents (e.g., 3.5 cents for medium, 6.5 cents for large, and 4 cents per turbo). I figured, what the heck, let’s run them all in a JUnit 5 parameterized test. Here is the result for the brand new sd3-medium model, with the prompt “stochastic parrots playing chess”:

Not bad, though the green parrot has some issues with one of its wings. How did the sd3-large model do?

That looks rather sweet, if you don’t look too closely. A few of the chess pieces are part white and part black, too, which brings up some interesting questions.

How about the sd3-large-turbo model?

Much more like a cartoon this time, and the parrot on the right has a claw at the end of its wing, though that would make it easier to move the pieces. The white player has at least three Kings, which is a bit awkward, and that’s not counting the black-and-white King. I think if I was doing this for real, I’d try that one over again. All in all, though, I think the medium model is doing fine.

(Sure, go ahead and say it: This whole example is for the birds. Rimshot.)

Async Requests

I spent some time this week playing with Ollama again. One of the characteristics of the Ollama REST API is that it defaults to streaming one word at a time. For example, if I access it using a curl request:

$ curl http://localhost:11434/api/generate -d

'{ "model": "llama3", "prompt": "Why is the sky blue?" }'This is what I get:

{"model":"llama3","created_at":"...","response":"What","done"} {"model":"llama3","created_at":"...","response":" a","done"} {"model":"llama3","created_at":"...","response":" great","done"} {"model":"llama3","created_at":"...","response":" question","done"} {"model":"llama3","created_at":"...","response":"!\n\n","done"} {"model":"llama3","created_at":"...","response":"The","done"} {"model":"llama3","created_at":"...","response":" sky","done"} {"model":"llama3","created_at":"...","response":" appears","done"} {"model":"llama3","created_at":"...","response":" blue","done"} {"model":"llama3","created_at":"...","response":" because","done"}

...and so on, until the response is complete. In my book, I’ve been careful to add "stream":false to all my requests to avoid this. With that property set, the system waits until the response is complete before returning anything.

It finally occurred to me that I could still work with the streaming system by using asynchronous requests. Here’s the code, which I imagine most readers will happily skip:

The important parts are the sendAsync method, which returns a CompletableFuture, the Accept header, which is set to text/event-stream (that may not be necessary), the ofLines method on BodyHandlers, which returns a Stream<String> for each returned element, and when I’m printing I only use “print” rather than “println” for each element until the last one.

I’m probably going to make a video about this, but here’s the test case:

and here’s the reply I got back:

According to the text of J.R.R. Tolkien's The Lord of the Rings, Frodo was not physically able to fly on a eagle due to his weakened condition from the Ring curse. Additionally, the story emphasizes the importance of using feet and legs to travel long distances, which is why Samwise Gamgee (Frodo's faithful companion) was sent ahead of him with the orders to gather supplies and then meet Frodo at the Black Gate of Mordor. Ultimately, Frodo's physical limitations and the need for caution led him to use a pack to carry his supplies instead of flying on an eagle.I’m not sure I buy that, but it’s an answer, in case you were wondering.

Tweets and Toots

Venn diagram

I don’t think the situation is quite that bad, but I laughed anyway.

i^i is the loneliest number

That’s the sort of math I used to do in the old days … before the Dark Times; before the Empire. I suppose, though, you can take the math out of the nerd job, but you can’t take the math out of the nerd. That’s still a really easy demo for me to follow.

What occurs to me is that sure, i raised to the i is real, but what happens when you raise one transcendental number (e) to another transcendental number (pi)? Nothing good, I imagine. I’ll be wandering off into Hilbert space now. I’ll let you know when (if?) I get back.

You can make a difference

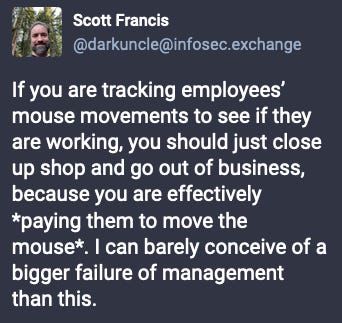

Mouse jiggling

If you don’t get the reference, check out this article, entitled “Wells Fargo Fires Employees Over "Mouse Jiggling". Here's What It Means”. Here’s the important part:

Wells Fargo, one of America's leading banks, has taken strict action against employees caught "mouse jiggling" to fake work.

According to media reports, several remote workers were fired after allegedly simulating keyboard activity to give the impression that they were actively working from home.

Wells Fargo told the BBC that it has strict standards and won't “tolerate unethical behaviour”.

Yeah, right, “unethical”. As though moving or not moving the mouse is indicative of whether an employee is actually working or not. Ugh. The fact that managers are monitoring that is deeply evil.

Software architecture hot tips

I have a few friends I intend to send this to.

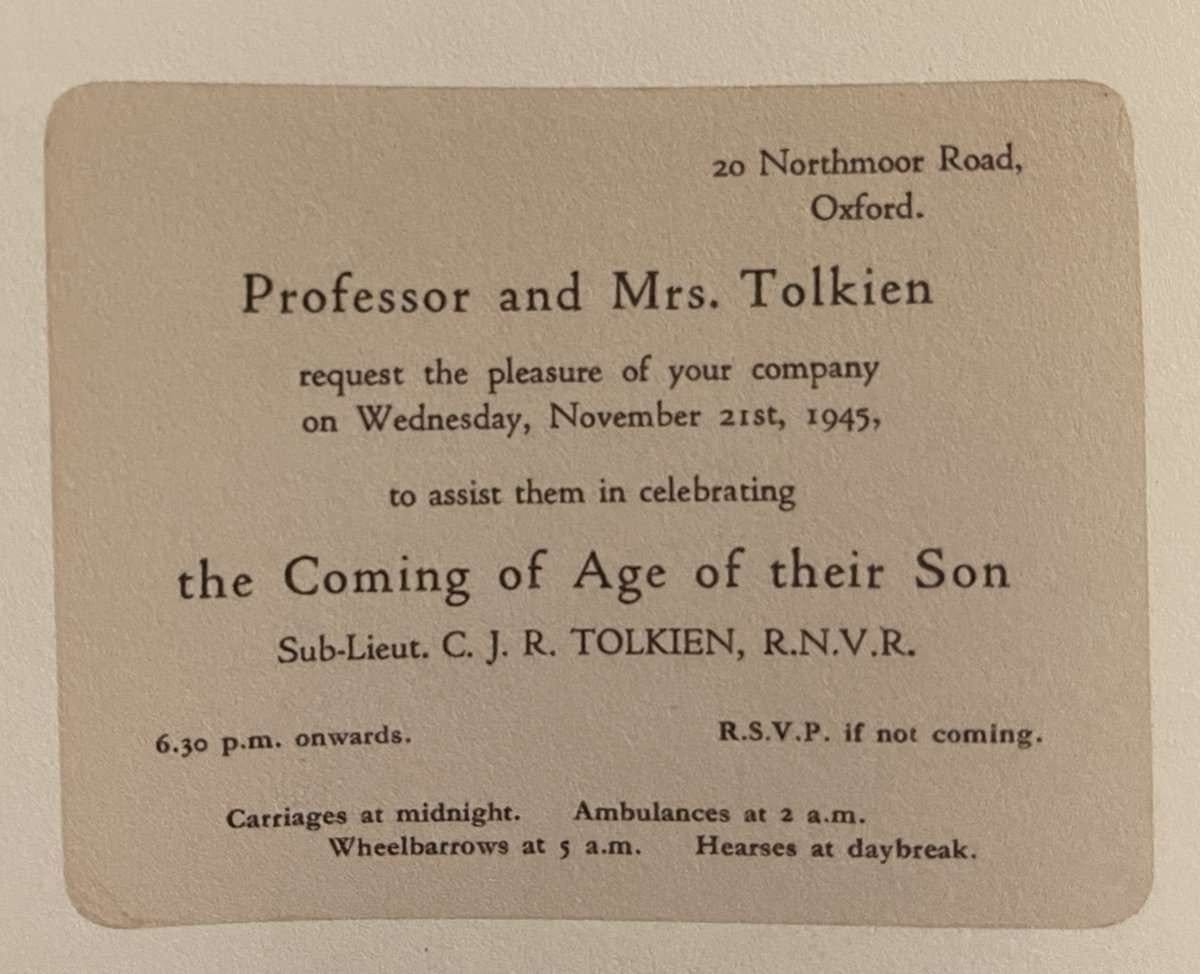

The postscripts make the invitation

Nothing like covering all the possibilities.

Have a drink

I think I’ll just leave that without comment and move on.

And finally, an old gag, but a good one:

Mac supports Windows

How could I not end my newsletter on Father’s Day without a dad joke?

Have a great week, everybody!

Last week:

Week 1 of Spring in 3 Weeks, on the O’Reilly Learning Platform

Practical AI Tools for Java Developers, an NFJS Virtual Workshop

Gradle Fundamentals, an NFJS Virtual Workshop

This week:

Week 2 of Spring in 3 Weeks, on the O’Reilly Learning Platform