Tales from the jar side: Junie vs Claude Code, I finally get what MCP is for, I contribute to LangChain4j, and the usual toots and skeets

You can't just sweep the elephant in the room under the rug. (rimshot)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of June 1 - 8, 2025. This week I taught a training course on LangChain4j on the O’Reilly Learning Platform, and did a live stream with Anton Arhipov comparing Claude Code and JetBrains Junie.

Claude Code vs Junie Live Stream

On Monday I joined Anton Arhipov on a live stream from the JetBrains YouTube channel. They used StreamYard, and so do I, as their host platform, so I was able to share the result on the Tales from the jar side YouTube channel as well.

Here is their recorded video:

We had a good time, but I felt a bit bad about the whole thing. The original plan was to take a project and have each of us modify it. I would use Claude Code and he would use Junie, and then we’d compare the results. As it turned out, I got overenthusiastic, which means I kind of took over and tried to do both, and that didn’t quite work. While we had a really fun discussion and accomplished a lot, it really wasn’t the direct comparison we were hoping for.

I also didn’t quite finish the feature we were working on in the time required. It took me another 5 minutes or so (yeah, we were that close) to fix the last issues and complete it. The result is a brand new feature, an analytics dashboard connected to my Certificate Service. Here’s the service:

Here’s the resulting certificate:

and here’s the new dashboard:

Nice that it’s now working. I managed to deploy it to Heroku, and replaced the in-memory database with a real one. Feel free to take a look if you want.

I’ve already talked to Anton, and we’re probably going to try again. If so, we’ll stick to the plan and split up our changes into separate Junie vs Claude Code updates. I’ll let you know if and when that happens.

I finally get what MCP is for

I mentioned in last week’s newsletter that I’ve jumped on the Model-Context-Protocol (MCP) bandwagon for AI. The idea there is that MCP gives LLMs (large language models, like Claude, ChatGPT, or Gemini) a way to talk to external tools or even other AIs. The example I talked about last week came from a blog post by Daniela Petruzalek, where she got her own computer to answer like the computer on the Starship Enterprise.

Her: Computer, run a Level 1 diagnostic.

Computer: <outputs data about memory, processes, etc.>

It all worked based on a utility called osquery, which she wrapped inside an MCP server. The LLM would convert her requests into relevant calls for the MCP server and summarize the responses. I managed to do the same using Spring AI. You can find my MCP wrapper for osquery here. That’s grown a bit in complexity as I added more and more features, but it’s basically the same idea she used. I also added an MCP client based on JavaFX, but I haven’t published that yet.

This week, however, it finally dawned on me what the real purpose of all this MCP stuff is:

MCP allows you to put a conversational AI in front of a complicated service.

(Okay, that’s just one use case, but it’s a really nice one.)

The osquery utility comes with a lot of documentation, but to use it you need to formulate the proper SQL queries. That means you have to know the tables and columns, and any quirks in the SQL flavor you need to use. I like the results, but who wants to dig that deep into the docs just to ask simple questions?

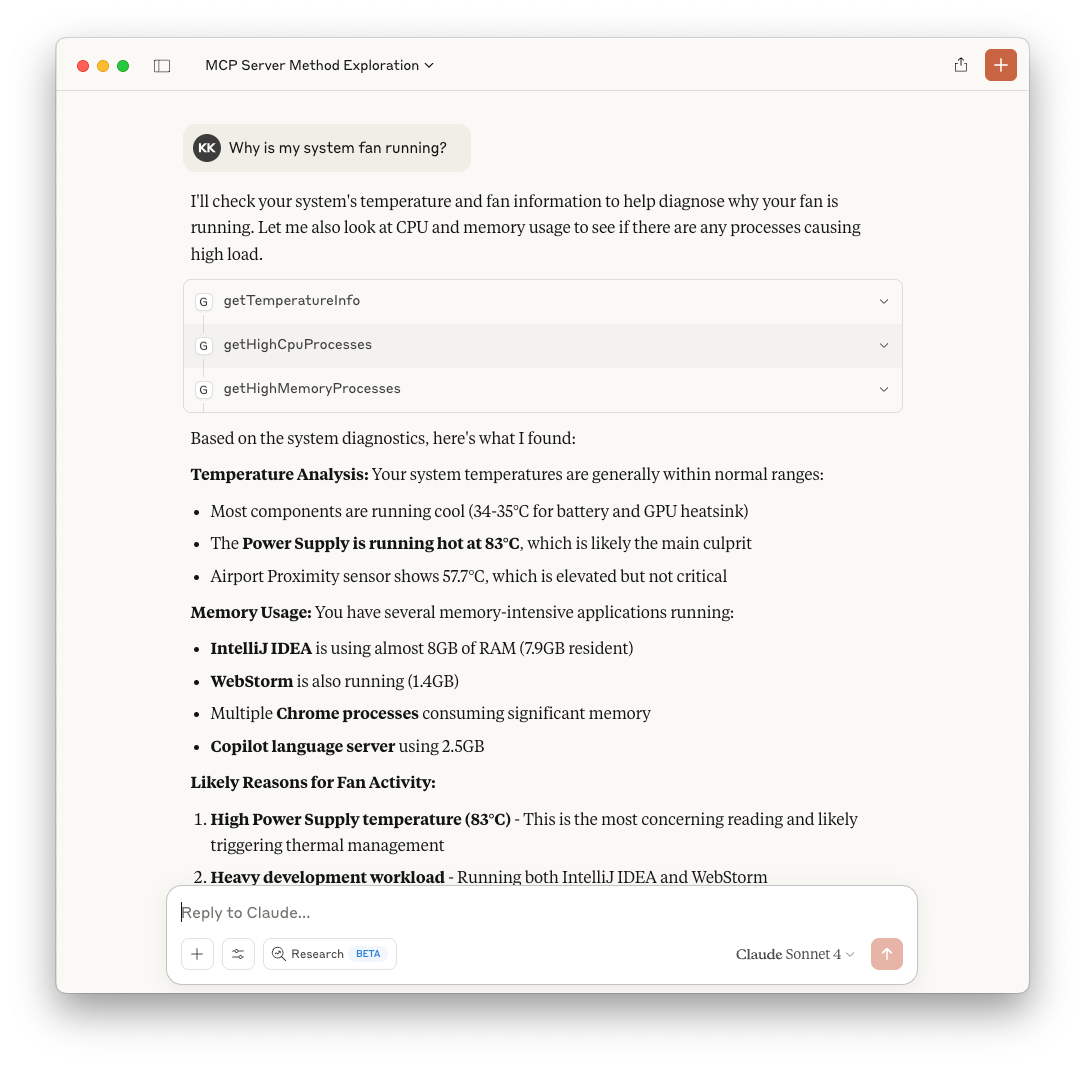

By wrapping the utility inside an MCP server, I can make it accessible in any client, like Claude Desktop, and then just ask simple questions:

The query says, “Why is my system fan running?” Claude then makes three separate queries to the osquery wrapper, using the appropriate SQL, and summarizes the results. It’s really easy.

I know MCP services have more uses than that, but that’s huge, at least to me. In fact, I wrote another wrapper this week.

Many of the examples of MCP servers talk about using the official MCP service for the GitHub source code repository. When you look at how to use it, it gives you the following JSON block:

{

"mcpServers": {

"github": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-e",

"GITHUB_PERSONAL_ACCESS_TOKEN",

"ghcr.io/github/github-mcp-server"

],

"env": {

"GITHUB_PERSONAL_ACCESS_TOKEN": "<YOUR_TOKEN>"

}

}

}

}This is somewhat problematic for two reasons:

I don’t like putting personal access tokens inside unencrypted files, even if they’re not exposed to the internet.

The service runs through a docker container, and docker can be quite the resource hog.

I found a way around both issues. There’s a utility you can install locally called gh, which is GitHub’s command line tool. Here’s an image from their home page:

The idea is that anything you would normally do with GitHub, like raise issues, commit changes, send pull requests, and so on, you can do with gh. You normally need to do some authentication, which is easy enough to do using

$ gh auth loginand it all works from there. It occurred to me that rather than building an MCP client around the official GitHub service via docker, I could just wrap the gh command locally.

I did that, again with Spring AI, and this is the result. Now I can have Claude Desktop (or any other MCP client) access GitHub using gh locally, already authenticated (I did that at the command line), and without needing docker at all.

What would I do with that? This week I needed to find out when my single contribution to the open source Groovy project occurred, and I wanted Claude to figure it out for me.

That brings me to:

My first contribution to open source in over a decade

Last week I talked about how I needed to demonstrate streaming responses from AI tools and ran into thread-related issues. What I mean is, I could pass a simple query to, say, GPT4.1-nano, like “Why is the sky blue?” and instead of waiting for the entire response to complete, I wanted to see the tokens as they were returned individually. In a recent blog post, I wrote about doing that with Spring AI three different ways.

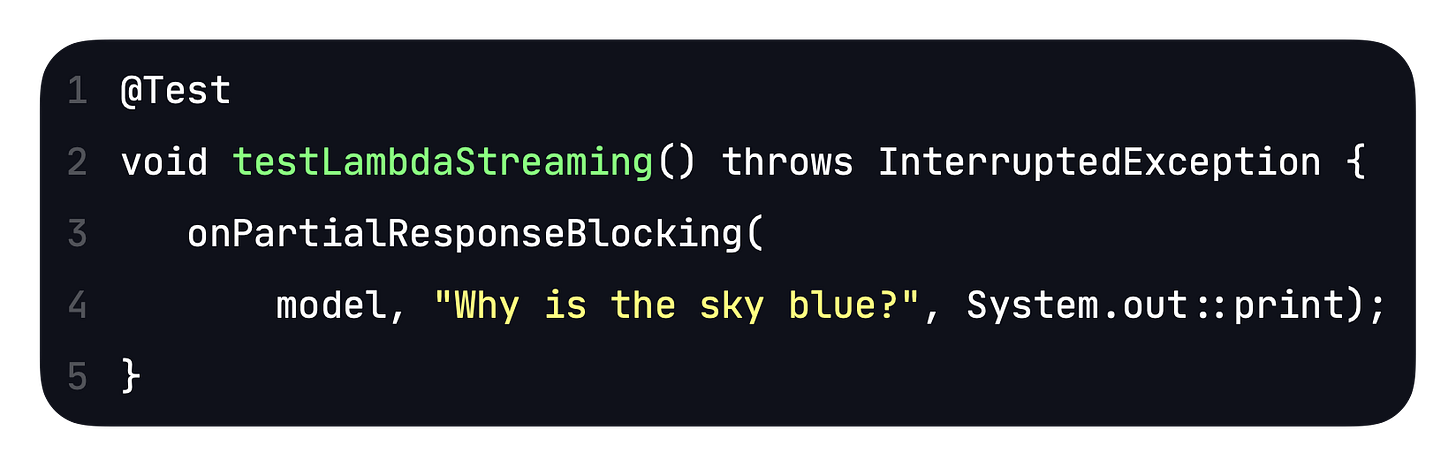

As it happens, the mechanism using LangChain4j is not quite as straightforward. Their sample code for handling streaming responses looks like this:

The second argument to chat is an anonymous inner class that implements the StreamingChatResponseHandler interface. Each token goes through the onPartialResponse method. When the response is done, it calls onCompleteResponse, and if something goes wrong, it calls onError.

The thing is, that runs into the same threading problem I mentioned in my blog post: if I run this test, the test exits before the response comes back. Since the query is running on a separate thread, the test doesn’t know to wait for it to complete.

That’s the first case I talked about in my blog post, where I used a CountDownLatch to wait for the thread, and only counted down on it when the response was complete or failed. I realized later that a CompletableFuture could do something similar:

That works, but is getting complicated. The LangChain4j people offered a simplification in their docs:

where the onPartialResponse method is a static method in the LambdaStreamingResponseHandler class. Unfortunately, that test exits without a result, too. You either have to have a process that stays running for a while, or you need to add a lock or a future and manage things manually again.

That’s all a build-up to my new implementation. I added two new methods to LambdaStreamingResponseHandler that included a CountDownLatch inside the implementation:

static void onPartialResponseBlocking(

StreamingChatModel model,

String message,

Consumer<String> onPartialResponse)

throws InterruptedException;

static void onPartialResponseAndErrorBlocking(

StreamingChatModel model,

String message,

Consumer<String> onPartialResponse,

Consumer<Throwable> onPartialResponseAndError)

throws InterruptedException;Now I can just call those methods, and the system waits for them to be done:

That does the trick, because the implementation blocks for me with a CountDownLatch.

I decided to contribute that back to the LangChain4j project, which took some doing. Eventually I ran through the required steps:

Fork the LangChain4j repository.

Clone it locally.

Implement my fix and add tests to prove it worked.

Commit everything and push it to my fork.

Issue a pull request.

Wait for it to be approved.

As it happened, a step I didn’t know about is that the project uses a code formatter called spotless, and my code wasn’t formatted the way the rest of the project was as a result. Eventually I got that fixed, and now I’ve made an actual contribution to the LangChain4j source code.

(Now I’m not sure if I should have used CompletableFuture instead of CountDownLatch, so the methods would not have needed to throw an InterruptedException. Or I could have caught the InterruptedException in my code. Oh well. If you’ve actually read this far and have an opinion, please let me know.)

It’s been a long time since my last open source contribution. With the help of that gh MCP service, I eventually got Claude to track it down. My other contribution was adding a JsonBuilder to the Groovlets API (whoa) back in March of 2012.

Hey, one contribution every dozen years or so, I guess.

Toots and Skeets

A plague on both your houses

I’m not going to say much about the Trump vs Elon feud. I hope they tear each other apart indefinitely, but it’s all really just a distraction that they are doing individually and together.

I’ll just point out one thing. The political left turned on Elon when it became obvious he was a Nazi. Now Elon is attacking Trump in public, which means the political right is turning on him, too. That may very well make him the Most Hated Person in America, which is quite an achievement given the competition.

He’s clearly hoping to outlast (or maybe outlive) Trump in public life, and he may be right. It’s all going to be pretty ugly during the process. I’m also convinced the Tesla brand will never recover. We’ll see in the coming weeks how SpaceX and twitter hold up.

Phrasing

So every time he decides to clean his glasses…?

Sweet!

Works for me.

Junior Devs

That’s partly why I’m a computer science professor now. One side effect was that I realized if I’m going to tell the students how the open source world works, I should at least make a contribution. Thus, the rest of this newsletter.

Poor kitty

Attack!

With or without you

That joke works a lot better in text than out loud.

Unfinished symphony

4 out of 5 dentists, too.

Good way to celebrate

They always get you on the shipping and handling.

Our backyard

My wife is hosting the church’s Music Board meeting tonight, and this is how I imagine it going.

I think I’ll stay in my office and watch basketball. If the walls start bleeding, I’ll let you know.

Have a great week, everybody. :)

Last week:

LangChain4j, on the O’Reilly Learning Platform

Junie Live Stream, with Anton Arhipov

This week:

Integrating AI into Java, on the O’Reilly Learning Platform.

Can Junie read Word. docx files?