Tales from the jar side: Jarvis, the Tftjs robot mascot, Stable Cascade images, LangChain4J tools, Air Canada learns an AI lesson, and the usual silly tweets and toots

I ran out of food and had to steal from my neighbor's herb garden. Now I'm living on borrowed thyme (rimshot)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of February 11 - 18, 2024. This week I taught my Deep Dive Into Spring and my Modern Java Functional Programming courses as NFJS Virtual Workshops.

Here are the regular info messages:

Regular readers of, listeners to, and video viewers of this newsletter are affectionately known as jarheads, and are far more intelligent, sophisticated, and attractive than the average newsletter reader or listener or viewer. If you wish to become a jarhead, please subscribe using this button:

As a reminder, when this message is truncated in email, click on the title to open it in a browser tab formatted properly for both the web and mobile.

Jarvis, the Tftjs Robot Mascot

I was going to talk about the new AI text-to-image generator known as Stable Cascade here, and I will, but when I decided to compare its output with DALL-E 3, I wound up generating the above image. The prompt was, “Draw a 16x9 image of a happy robot reading the ‘Tales from the jar side’ newsletter on a laptop,” and the caption to the image shows the “re-imagined” prompt GPT-4 came up with when generating the result. I’m really impressed it got the words (mostly) right, other than the title newshet, which is probably some kind of mishmash of the word newsletter. Hey, I’ll take it.

I then asked GPT-4 to come up with a name:

Me: I need a name for that robot. What do you think?

GPT-4: How about naming the robot "Jarvis"? It's a playful nod to the "Tales from the jar side" newsletter, suggesting intelligence and helpfulness, much like a personal assistant. Plus, it has a friendly and accessible sound to it.

Of course, the problem with that is we already know about a robot named Jarvis, from the Iron Man / Avengers movies, who eventually evolved into the character named Vision. Then again, the Tftjs Jarvis bears about as much resemblance to movie Jarvis as I do to Tony Stark.

I’m afraid “over-educated, thousandaire, married Patreon supporter” doesn’t quite have the same ring to it. I’m going with it anyway, and with Jarvis as well. Welcome aboard!

Stable Cascade

This week I watched a video by Matt Wolfe about the new Stable Cascade AI. His video was called How To Make Professional Logos with AI (For Free). You log into Hugging Face (seriously, that’s the name of the site), go to the Stable Cascade page, select a free space (I’ve been going to multimodalart) to experiment with, and add a prompt.

I tried, “A logo of a happy robot drinking coffee while reading the ‘Tales from the jar side’ newsletter,” and here is what I got:

Not really what I had in mind, but not terrible, either. Certainly better than this attempt (with the same prompt):

Um, no, comma, a world of. The word jar appears to be taken a bit too literally here, among other massive problems. I’ll keep trying, or maybe not. We’ll see.

LangChain4J Tools

My next video is about using the tool support in LangChain4J. The idea is to provide the AI model with a class containing methods it can call whenever it needs one. The sample from the langchain4j-examples project uses a Calculator class with a method called add (among others):

The Assistant calls the add method in the Calculator when it needs it. That’s really cool.

Unfortunately, an AI is still an AI, and that means it approaches toddler levels of unpredictability. You can give an AI a tool to use, but there’s no guarantee it will use it properly, or at all.

For example, I have code that asks OpenAI to extract the first name, last name, and date of birth for a Person record from a block of text. The query is similar to:

Captain Picard was born in La Barre, France on Earth

on the 13th of juillet, 281 years in the future.

His given name, Jean-Luc, is of French origin. He and his brother

Robert were raised in the family vineyard Chateau Picard.The result I want, of course, is Jean-Luc Picard, born 2305-07-13 (in ISO 8601 standard format). Sometimes that’s what I actually get, depending on the model, the time of day, whether the AI actually slept at nap time, etc. But often I get something like this:

com.kousenit.services.Calculator - Called subtract with a=281, b=2023

com.kousenit.services.Calculator - Called add with a=7, b=13

Person[firstName=Jean-Luc, lastName=Picard, birthDate=1742-07-20]That happened the last time I called it with the gpt-3.5-turbo model. It called both the subtract and the add methods, as it should, but why it decided to subtract 281 from 2023 (rather than add them) is a mystery. It also saw the numbers 7 (presumably for July) and 13 in the passage and decided to add them as well. That gave it both the wrong day of the month and a year that’s off by 563. Yikes.

The thing is, that result was not repeatable. Next time I run it could be completely different. It might even be right, or it could be wildly off in a different way. Just because I gave it a calculator to help it add and subtract doesn’t mean it understands anything about it.

I’m reminded of how some kids do their homework in a middle school math class. They search for a formula that might help, and then plug in any numbers they find from the original question, whether they make sense or not. The teacher gets annoyed for the exact same reason we do here — the AI knows it should use a tool, but has no actual understanding of how or why.

I asked DALL-E 3 to design an image that shows the parrot logo from LangChain4J misusing some tools, and got this:

That works, and I think it’ll be the thumbnail for my next video. More about that next week.

Incidentally, when I change the model to the latest version of GPT-4, it gets the right answer, within a couple of years, more often than not. Sometimes much more often. Maybe this problem will go away with time. On the other hand, it’s a great demonstration that while AI tools can extract information from unstructured data, they don’t actually have any idea what they’re doing.

Air Canada Learns A Lesson about AI

You might have noticed this news item, entitled Air Canada found liable for chatbot’s bad advice on plane tickets.

A grieving grandchild (Jake Moffatt) wanted to book a flight on Air Canada but wasn’t sure about their refund policy. Air Canada had a chatbot on its website, and when Moffatt asked the chatbot about bereavement flights, it claimed he could submit his ticket after the fact for a reduced rate within 90 days of the date it was issued. He then went ahead and booked a full-fare ticket and submitted for a refund later.

As it turned out, Air Canada doesn’t have a policy like that. The chatbot made it up. When Moffatt submitted his ticket for reimbursement, they denied the refund. When they wouldn’t listen to reason, he was forced to sue, and this week the courts mandated that the airline owed him compensation. Air Canada had the temerity to argue that the online tool was “a separate legal entity that is responsible for its own actions.”

Lol, no. I talked about this in my video on custom GPTs: When your own custom GPT won’t behave:

In other words, you brand it, you bought it. (I wish I’d thought of that phrase when I made the video, but I’m adopting it from now on.) If an AI has your name on it, people are going to assume you agree with whatever it says, for good or ill.

Of course, the real scandal here is the appalling arrogance of Air Canada refusing to reimburse a grieving grandchild. If they’d had any decency at all, they would have paid the kid and taken down the bot afterwards, as they’ve done now. But no, they preferred to be evil and try to retain an amount of money that wouldn’t pay for an hour of their operations, instead of doing the right thing. To say the judge was not amused is an understatement.

Tweets and Toots

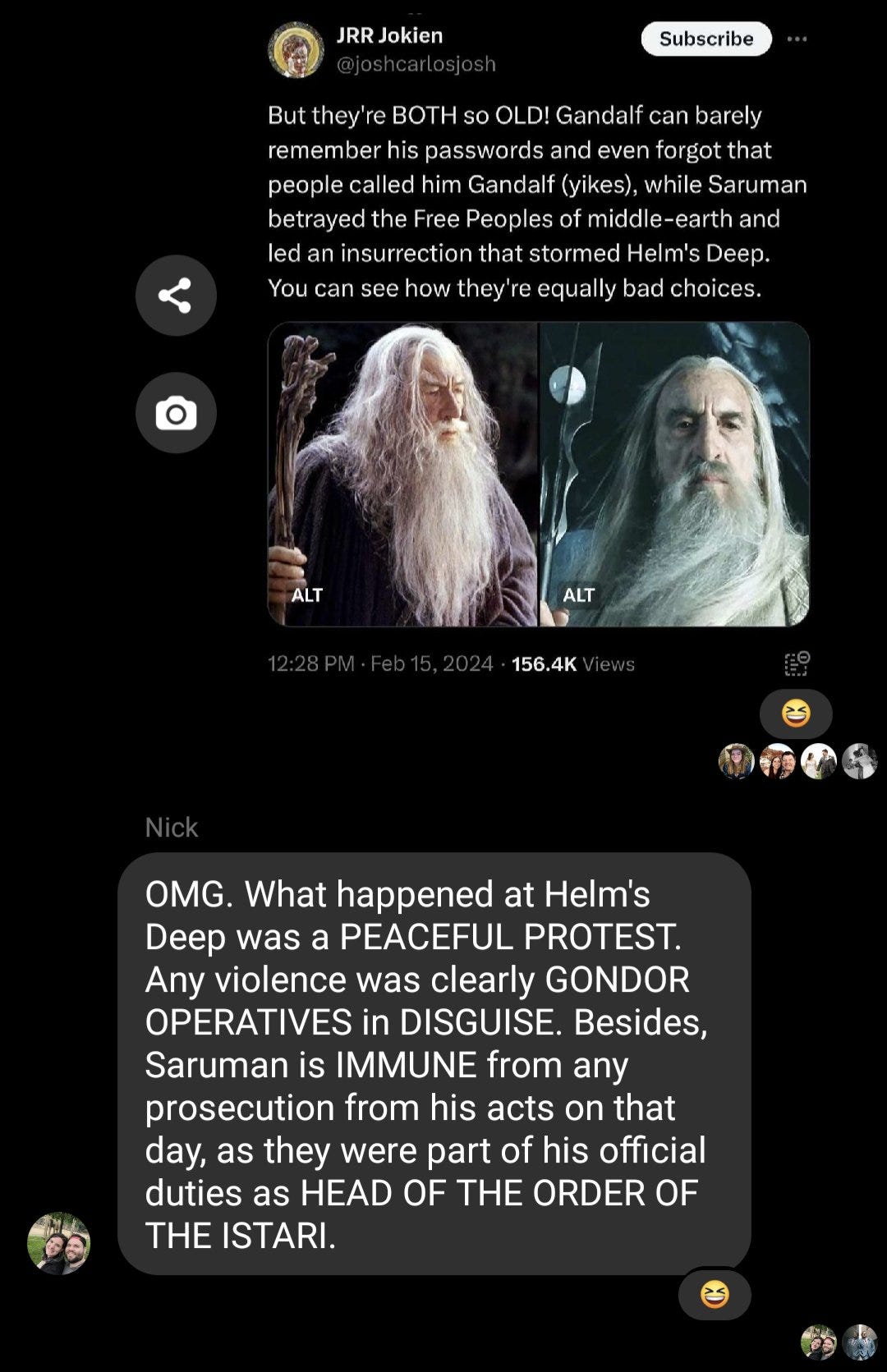

But they’re BOTH so OLD

Saruman might need to sell the One Ring to pay for all those judgements against him. Think Sauron will go along with that?

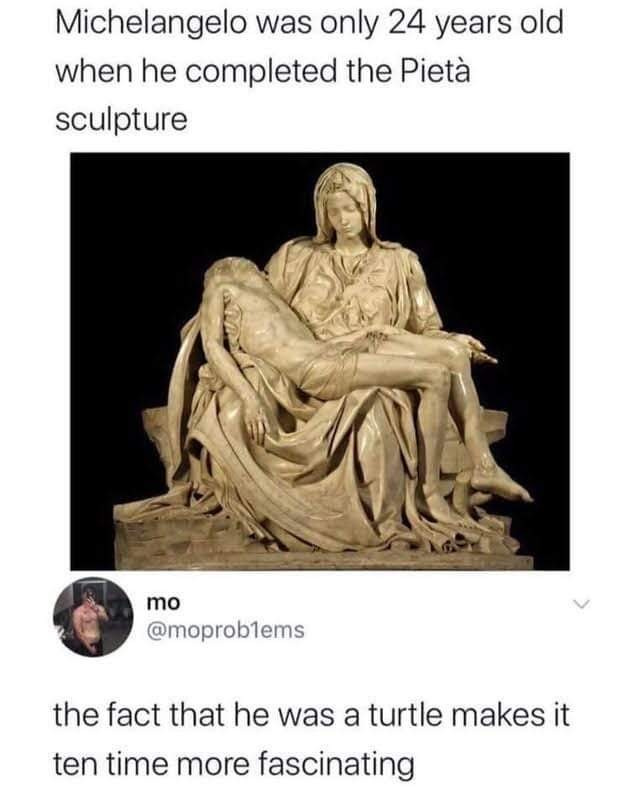

Easy mistake to make

Could happen to anybody.

Leonardo would like a word

Subtle differences

I like this revision on the old gag that you can tell them apart because the alligator you see later, but the crocodile you see after a while.

Pop-Tarts

I meant to include in the previous newsletter that the inventor of the Pop-Tart, William Post, passed away last week at the age of 96. See? Despite what you might have heard, all those preservatives in Pop-Tarts lead to a long, happy life.

Also, Frosted Brown Sugar Cinnamon Pop-Tarts rule. I will not be taking any questions at this time.

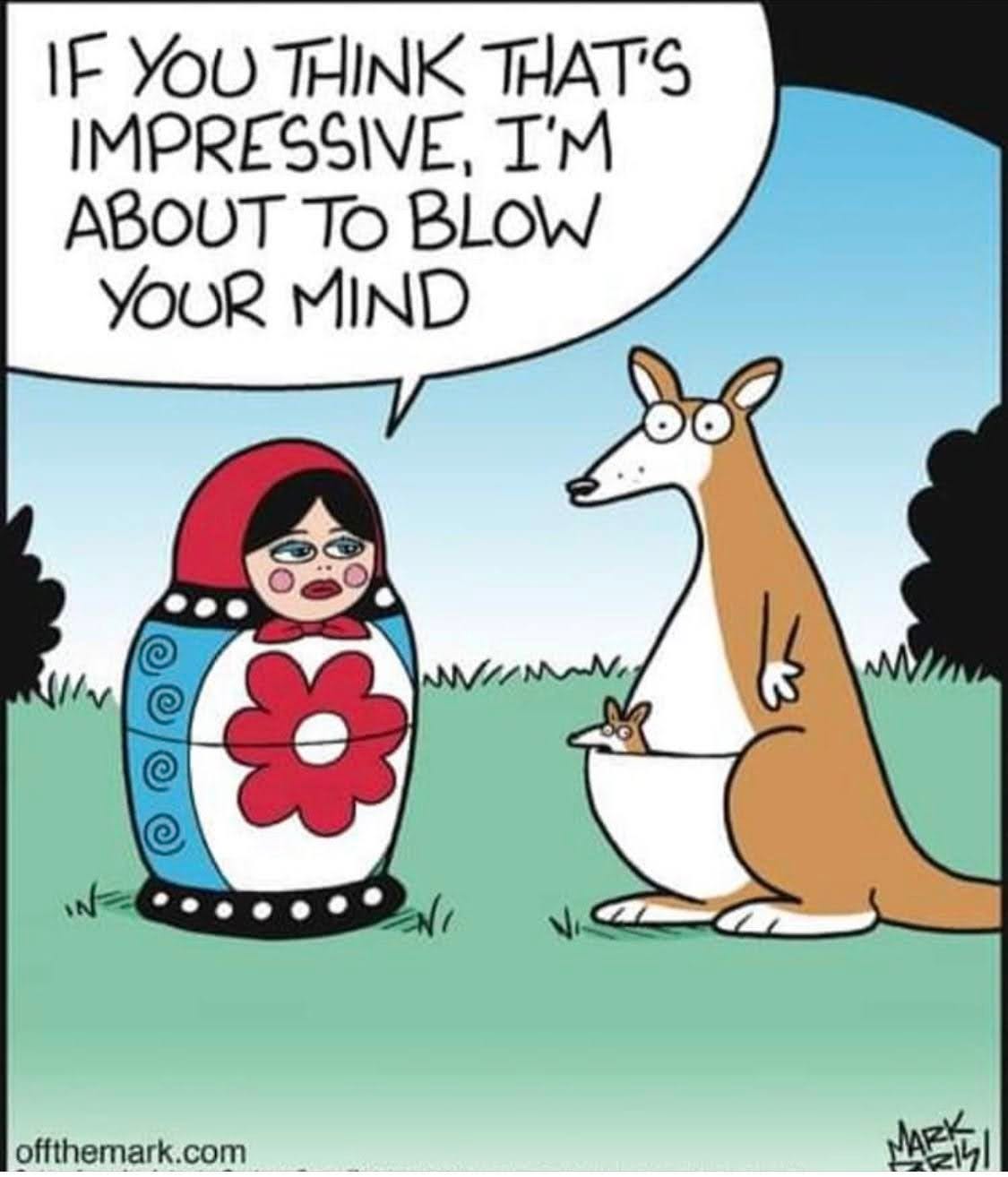

Impressive

Is it just me, or shouldn’t she be saying that in Russian?

Super Bowl follow-up

That’s a good one. I’ve taken to calling them conservadorks, which feels more gender-neutral.

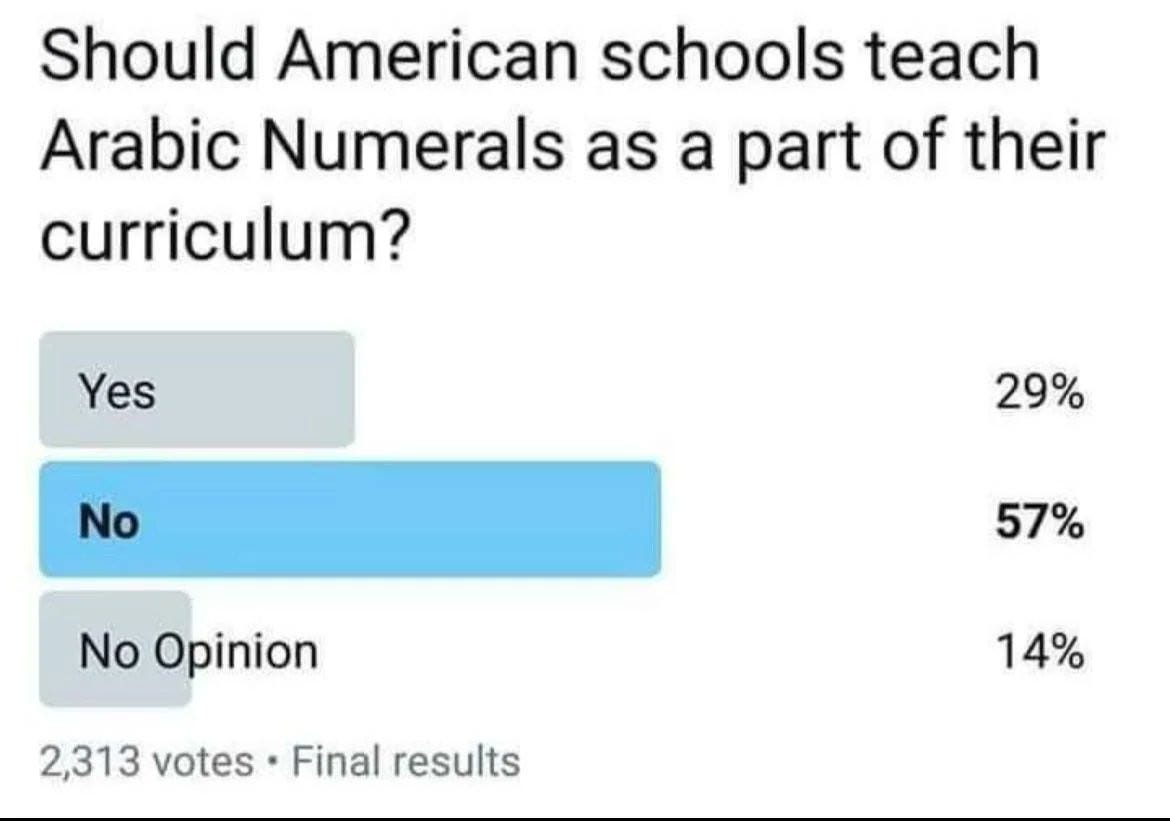

Hurry, call IX-I-I!

This has been making the rounds in various forms for decades, but it still hits home. I sometimes wonder if anybody actually did that survey, or if they just like the gag.

I think this is a good joke…

… but it might not be. Heisenberg isn’t sure.

Finally, a dad joke

A very old gag, but it still works.

Have a great week!

The video version of this newsletter will be on the Tales from the jar side YouTube channel tomorrow.

Last week:

Deep Dive Into Spring, and NFJS Virtual Workshop

Modern Java Functional Programming, ditto

This week:

No classes. That means I really ought to get my next video done, other than the newsletter video that comes out tomorrow.

My Trinity College (Hartford) class on Large Scale and Open Source Software is Tuesday night, with a guest lecture by the inimitable Paul King, head of the Apache Groovy project and all-around awesome dude.