Tales from the jar side: GPT-4o and Vision, Gemini 1.5 Flash, NFJS Madison, A JetBrains live stream, and the usual tweets and toots

I love dried fruit so much you might say it's my raisin d'être (rimshot, h/t @tarajdactyl@anarres.family on Mastodon)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of May 12 - 19, 2024. This week I gave several talks at the NFJS event in Madison, Wisconsin.

Regular readers of, listeners to, and video viewers of this newsletter are affectionately known as jarheads, and are far more intelligent, sophisticated, and attractive than the average newsletter reader or listener or viewer. If you wish to become a jarhead, please subscribe using this button:

As a reminder, when this message is truncated in email, click on the title to open it in a browser tab formatted properly for both the web and mobile.

OpenAI Releases GPT-4o

This week in AI featured big announcements. Everybody knew Google I/O started on Tuesday, so naturally OpenAI wanted to steal their thunder by announcing new products before that.

On Monday, they released GPT-4o, where the “o” stands for “omni”. The idea is that their model can handle text, images, and audio all together, and the demo video they showed was very impressive. That’s all well and good, but this field is filled with hype and promises that the resulting promises can’t match. So for me, the real question was, what’s available right now? They can promise all they want for the future, but I’m not trusting anything until I can try it out.

Fortunately, when I went to the ChatGPT web site (which now redirects to chatgpt.com, in case you didn’t notice), GPT-4o was available immediately.

(I have no idea what a “Temporary chat” is, beyond the name. I assume it doesn’t store the results. I’m not sure what I’d use that for, but hey, it’s an option now.)

A few tests confirmed it was much faster than GPT-4, and that the answers look really solid.

The other question I wanted answered was whether this model was available programmatically. After all, I’m working on book on accessing AI tools from Java (with the working title Adding AI to Java, coming soon from the Pragmatic Bookshelf), so I wanted to update all my examples. I ran my existing test that lists the models, and there it was:

gpt-4-turbo

gpt-4-turbo-2024-04-09

gpt-4-turbo-preview

gpt-4-vision-preview

gpt-4o

gpt-4o-2024-05-13Not only was it there, but the cost was literally half that of GPT-4:

That’s from the OpenAI pricing page. Each request to GPT-4o costs half a cent for 1K input tokens, and three times that for output tokens. By contrast, GPT-4-turbo (the former state of the art), is at 1 cent and 3 cents for input and output tokens. The newer, faster, better model is therefore exactly half the cost of the former champ.

But wait, there’s more.

GPT-4o Vision Model

At the GPT web site, for a long time you’ve been able to click the paperclip icon and upload images or other files and have GPT-4 analyze them. As I mentioned in last week’s newsletter, the term vision model defines uploading an image to GPT and asking it to answer questions about the picture. I knew GPT-4 had vision capabilities, but if you looked in the developer documentation, it said nothing about them.

That is, until this week. Now if you go to the documentation page, you see this:

The images are uploaded either as a URL (which is fine if the images are on a public website) or as base 64 encoded strings. You upload them to the same endpoint you use for chat, which looks like this:

curl https://api.openai.com/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d '{

"model": "gpt-4o",

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": "What’s in this image?"

},

{

"type": "image_url",

"image_url": {

"url": "https://upload.wikimedia.org/wikipedia/commons/thumb/d/dd/Gfp-wisconsin-madison-the-nature-boardwalk.jpg/2560px-Gfp-wisconsin-madison-the-nature-boardwalk.jpg"

}

}

]

}

],

"max_tokens": 300

}'See the “content” field inside the message? For chat, the value is a simple string. Now it’s a JSON array with a text type and an image URL type.

I made a very simple test using Java text blocks:

That allowed me to plug my formatted image into the request, transmit it, and get back a result. The image I sent was (same as last week’s newsletter):

Here’s (part of) the description I got back:

"message": {

"role": "assistant",

"content": "The image depicts a group of cats gathered around a table playing cards. There are five cats sitting on the floor, each with a paw touching the cards on the table. The cards are spread out in front of them, suggesting that they are in the middle of a game. Additionally, there are mugs of milk placed in front of each cat, reinforcing the playful and whimsical nature of the scene. The setting seems to mimic a human poker game, but with cats and milk instead."

}Not bad. The really interesting part for me, however, is using a combination of Java records and sealed interfaces that let me post either chat or vision requests to the same endpoint.

It took some doing, but this is what I eventually came up with:

That’s going to take more explanation than I want to include here. It’s begging to be included in a video, which I plan to record soon.

At least, I hope to record it soon. Last week when I tried to record my newsletter video, the audio came out completely garbled. Here’s an example, which I sent to the support team after registering for premium support (ugh, but necessary if I ever want to get an answer):

Yeah, that’s not going to work. The same mic and audio setup works just fine with Descript or Tella.tv, so I could just use those, but I would like to get this resolved. I’ll let you know what happens.

Two other quick notes from OpenAI:

Apparently, custom GPTs are now available to everybody, not just GPT+ subscribers. That means if you are really bored, you can try out my Mockito Mentor, or, if you’re a Pragmatic author and would like to see my simple bot based on the writer’s guide for Pragmatic Bookshelf books, my Pragmatic Assistant. Have fun! Or don’t. I’m not the boss of you.

Also, GPT-4o is going to be available to everybody for free. You don’t need a paid subscription to use it. I expect that to have a big impact, because a lot of people are still stuck using GPT-3.5, and the newer models are way, way better than that. We’ll see how the community reacts.

Gemini 1.5 Flash

On Tuesday, Google made their announcements at Google I/O. Here’s a five-minute summary, which was about the shortest I could find:

All the presenters are all much too happy and much too excited to announce their new features, but again, that’s SO Google. They did demonstrate a very fast model that integrated text, audio, and images so well that it was able to have an actual conversation with a user. That, however, is not available yet, so I’ll believe it when I see it.

What is available (among other things), is Gemini 1.5 Flash, which is a smaller, quicker version of Gemini 1.5 Pro, but still has a 1 million token context window. Fortunately, it was available in the API, so I was able to try it out. I used the same prompt from last week’s newsletter (“Here is my book Help Your Boss Help You. Give me the top five points made in the book”) and again it did a decent job.

GeminiResponse[candidates=[Candidate[content=Content[parts=[TextPart[text=Here are the top five major points made in the book:

1. **Conflict is inevitable in the employee/manager relationship because their goals and incentives differ.** You want to progress in your career, learn new things, and be recognized for your work. Your manager wants to ensure that the team meets company goals, stays on budget, and avoids unnecessary risk. Conflict is simply a result of these differing priorities.

2. **Building constructive loyalty with your manager is key to your success.** This means demonstrating trust and support, even when you disagree with your manager's decisions. It's about creating a relationship where you can both achieve your goals.

3. **You can train your manager to take your needs into account by using a Tit-for-Tat strategy.** This means starting by cooperating and then pushing back in a timely and constructive manner when your manager makes a decision that harms you. Do this consistently, and your manager will learn to anticipate your reactions and avoid repeating the same mistakes.

4. **Communicate effectively with your manager by understanding their communication style.** The book suggests using the Keirsey Temperament Sorter to assess your manager's personality type, which helps you to tailor your arguments and present your ideas in a way that resonates with them.

5. **Your boss is not your friend.** Maintaining a professional distance with your boss is essential for protecting yourself from being negatively impacted by their decisions. This is not about being unfriendly, but rather about avoiding oversharing and ensuring that personal opinions don't cloud their professional judgement.

]], role=model], finishReason=STOP, index=0, safetyRatings=[SafetyRating[category=HARM_CATEGORY_SEXUALLY_EXPLICIT, probability=NEGLIGIBLE], SafetyRating[category=HARM_CATEGORY_HATE_SPEECH, probability=NEGLIGIBLE], SafetyRating[category=HARM_CATEGORY_HARASSMENT, probability=NEGLIGIBLE], SafetyRating[category=HARM_CATEGORY_DANGEROUS_CONTENT, probability=NEGLIGIBLE]]]], promptFeedback=null]

Input Tokens : 79487

Output Tokens: 321Again, a good job, and you can see that after extracting the text from the pdf, my entire 160 page book only required about 80,000 input tokens. I plan to work with Gemini 1.5 Flash more in the future.

Finally, despite a fair amount of trying, I have to admit that I have not been able to come up with a decent Grandmaster Flash joke related to Gemini 1.5 Flash. If something occurs to you, please let me know and I’ll include it next week.

NFJS Madison

Nobody really wants to hear about travel difficulties, so I’ll just say that despite United’s best efforts (some of which were caused by ORD, which is called ORDeal for a reason), I was able to get to Madison, WI for the No Fluff, Just Stuff conference there this week and even make it home again, more or less on schedule.

My original schedule had me giving five talks on Friday:

Modern Java 21+: The Next-Level Upgrade

Calling AI Tools from Java

LangChain4J: An AI Framework for Java Developers

Practical AI Tools for Java Developers (parts 1 and 2)

Those were all going well, but at lunch time Jay asked if I could cover two more talks the following morning. It turns out that the inimitable Venkat Subramaniam ran into travel issues of his own (I can’t believe that doesn’t happen more often), which resulted in him getting stuck in Frankfurt, Germany. He wasn’t going to make it Madison at all.

We had to scramble to change my flights, but the next morning I did a two-part series on Upgrading to Modern Java, which went quite well. That was fun, and, best of all, now Venkat owes me a favor. Don’t think I won’t collect, too, because I probably won’t. But I’ll make him pay somehow. I just haven’t thought of anything clever yet.

Tweets and Toots

I’m giving a talk this week

Thursday at 11am EDT I’m going to spend a pleasant hour talking about Ollama and uncensored AI models:

The registration link is here. The talk is free. Here’s the description:

General AI models, like ChatGPT, Claude AI, or Gemini, have a broader scope, and their answers to questions can be correspondingly imaginative. But many vendors don't want to be held responsible for awkward answers, so they add "guard rails" to limit the responses. Those limitations often restrict the models so much as to make them unable to answer reasonable questions.

In this talk, we'll discuss the Ollama system, which allows you to download and run open-source models on your local hardware. That means you can try out so-called "uncensored" models, with limited guard rails. What’s more, because everything is running locally, no private or proprietary information is shared over the Internet. Ollama also exposes the models through a tiny web server, so you can access the service programmatically.

We'll look at how to do all of that, and how to use the newest Java features, like sealed interfaces, records, and pattern matching, to access AI models on your own hardware.

If you happen to make it, be sure to say hi.

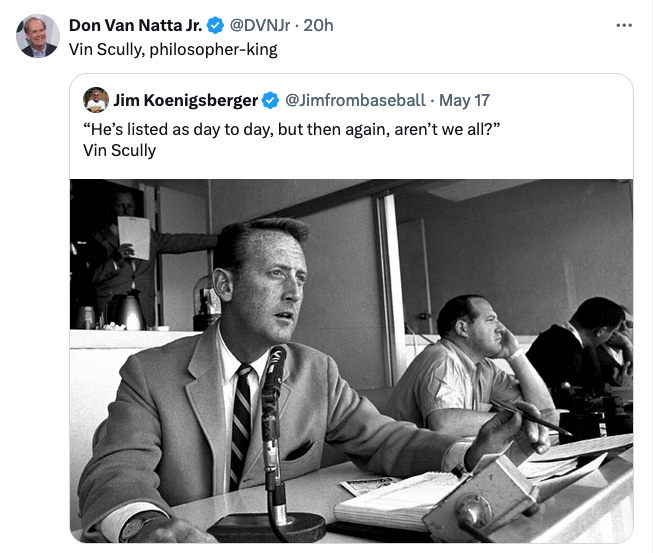

Aren’t we all?

Vin was the best.

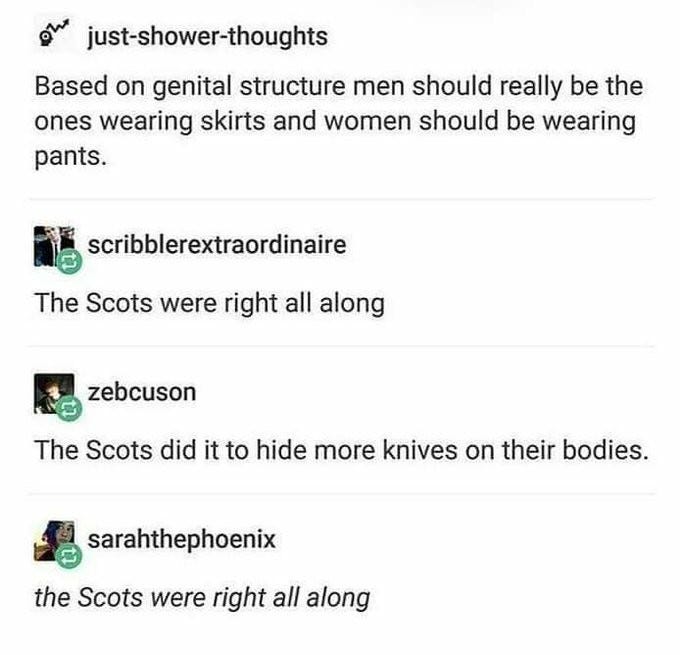

The Scots were right

I think combining knives with men’s skirts could lead to problems, not to mention getting a bit cold, but who am I to argue?

Orwellian

That looks AI generated to me, but if so, it’s a good use of AI.

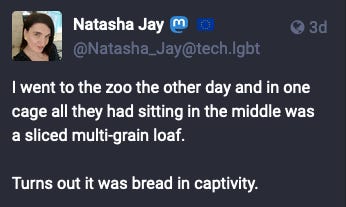

Born and Bread

I thought my jokes were bad. And they are, but so is this one.

One way to solve the problem

Yeah, that’s one way to solve the problem.

Cue Ray Parker, Jr

I know it doesn’t scan, but I can’t help autocorrecting that in my head to “I ain’t afraid of no robots.”

Once an English teacher …

No notes, indeed.

Abstract

I’m sure there are some weird clocks on the wall, too.

Finally, we have this:

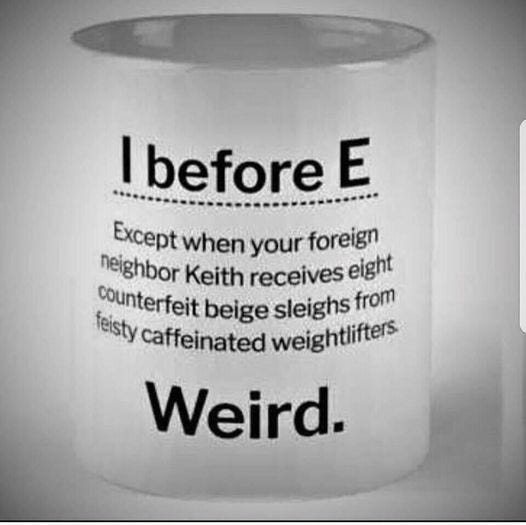

I before E, except

All I can say is, neigh, neigh!

Have a great week, everybody!

The video version of this newsletter will be on the Tales from the jar side YouTube channel tomorrow.

Last week:

NFJS event in Madison, WI.

This week:

Functional Java, on the O’Reilly Learning Platform

Running Uncensored and Open Source LLMs on Your Local Machine, a JetBrains live stream on Thursday