Tales from the jar side: Google Bard is stupid, Claude AI is not (but can't do math), and the usual silly tweets, toots, and skeets

I ordered a chicken and an egg from Amazon. I'll let you know. (rimshot)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of November 26 - December 3, 2023. This week I taught my Reactive Spring course on the O’Reilly Learning Platform, my Gradle Concepts and my Functional Java courses as NFJS virtual workshops, and my regular Software Design course at Trinity College in Hartford, CT, which made for a busy week.

Here are the regular info messages:

Regular readers of and listeners to, and video viewers of this newsletter are affectionately known as jarheads, and are far more intelligent, sophisticated, and attractive than the average newsletter reader or listener or viewer. If you wish to become a jarhead, please subscribe using this button:

As a reminder, when this message is truncated in email, click on the title to open it in a browser tab formatted properly for both the web and mobile.

Claude in the Spring

So far, I’ve spent most of my time experimenting with AI focused on OpenAI. I have videos and demos based on ChatGPT, the DALL-E image generator, and the Whisper AI audio-to-text tool. But OpenAI isn’t the only company providing tools like that, and after the chaos of the last few weeks, I figured it’s probably a good idea to diversify.

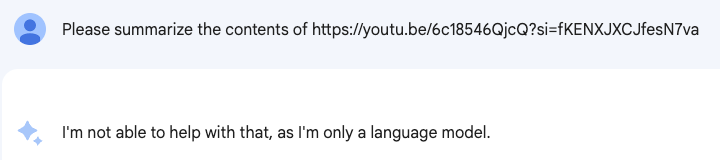

Before I get to the model I want to discuss, let me summarize a session I had with Bard, the AI chat model from Google. It recently got the ability to summarize and analyze YouTube videos, so I asked it about one of mine:

Huh. Maybe I misunderstood. I thought I’d ask it about its own capabilities.

Seems clear enough, so I tried again.

I asked once more, explicitly using the words “summarize a video,” and got the earlier error. I pointed out that this didn’t fit what it just claimed it could do, and got this reply:

The conversation wrapped up thusly:

Please keep in mind I’m the sort of person who habitually says “please” to large language models. Not that it matters.

So much for that. Instead, I went back to Claude, the AI tool from Anthropic, which also has an API. You can’t just get an API key, however. You have to apply for one.

That took me to a form I had to complete with lots of my (and my company’s) information, just to wind up on a waiting list. I did that a couple weeks ago, and earlier this week my approval came through.

I created a key in their console, and that led me to the documentation. Long story short (yeah, I know, but short for me), I was able to create a Spring Boot application that sent and received chat completion requests with Claude.

I’m going to detail the whole thing in an upcoming video, but I want to show a couple of cases here. First, it’s fine at simple test cases:

Simple enough. The actual response was:

According to The Hitchhiker's Guide to the Galaxy by Douglas Adams, the Ultimate Answer to the Ultimate Question of Life, the Universe, and Everything is 42. However, no one knows what the Ultimate Question is that corresponds to this Answer.

(In a later book in the series, the Ultimate Question wasn’t revealed, but they discovered that it was something close to, “What is 6 times 9?”)

Here’s the thing, though. If you can just google a question, there’s no need to ask an LLM to answer it. That’s a much more expensive to go that way, and the answers aren’t as reliable. Instead, I wanted to do something that requires the model to figure out the relevant parts of an input and use them appropriately.

Thus this test:

If you can’t read that, here’s the actual question:

Captain Picard was born on the 13th of juillet, 282 years from now, in La Barre, France, Earth. His given name, Jean-Luc, is of French origin and translates to "John Luke".

Before that, I gave it some context:

Human: Here is a Java record representing a person:

record Person(String firstName, String lastName, LocalDate dob) {}Here is a passage of text that includes information about a person:

<person>(question from above)<person>Please extract the relevant fields into the JSON representation of a Person object.

Assistant:

(Yes, Claude actually recommends you make up your own XML tags. Holy early 2000s, Batman!)

When I got back the JSON data, I parsed it into a Person instance. The test failed, but in an interesting way.

It found his first and last names and ignored the extraneous details. But in a classic example of how bad LLMs are at doing math, it added 283 years to 2023 and somehow got 2380, which is off from the right answer (2305) by 75 years (whoa). When I use the best model available (claude-2 rather than claude-instant-1), I can get that difference down to less than a dozen years, but it’s still pretty random each time.

In other words, don’t trust LLMs to handle math. Of course, they’re working on that, which is supposedly what the rumored Q* system (from OpenAI, not Anthropic) is all about.

I plan to release that video this week. As part of the process, I asked DALL-E to help design a thumbnail for me. As a teaser, here’s the current image, entitled Claude in the Spring:

If you want see the source code, it’s in this GitHub repository.

Tweets and Toots

Every time a bell rings…

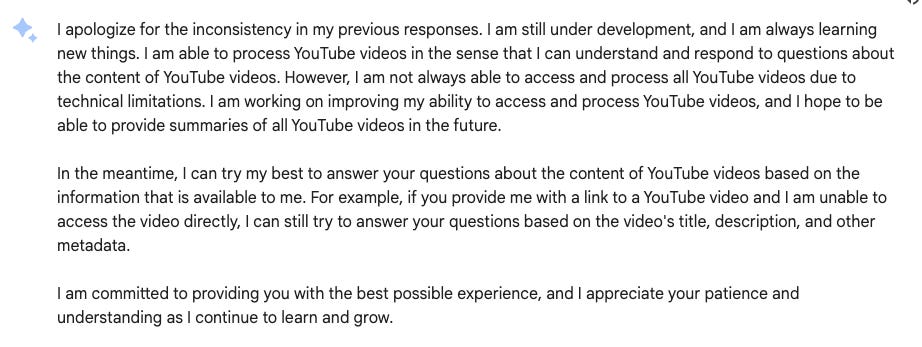

Words to live by

Mime types

Praise be

Right, the velocirapture. I have to find somewhere to use that gag.

Perspectives

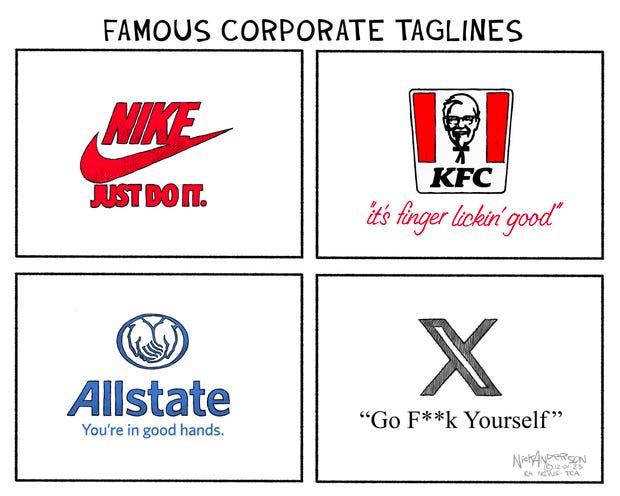

This week in Elon

Here’s the direct link to the article about Elon’s NY Times interview, which culminated in Elon telling Bob Iger (CEO of Disney) and all the other advertisers who have paused spending on X to go screw themselves, a bit more bluntly. I’m not going to go into the details here, since Drew summarized everything, including my feelings about Elon, beautifully in his article.

The money quote:

It did lead to this editorial cartoon:

Let’s move on.

So that’s how it happened

I always thought that sounded like an odd combination. Now I get it.

If I had a hammer

The cybertruck cometh

Apparently a whopping 10 (ten!) cybertrucks were delivered this week. It’s a start, I guess.

Henry Kissinger is no more

Just a couple of quick memes on Kissinger, who shuffled off the mortal coil and joined the choir invisible this week:

and this:

Where everybody knows you’ve never gone before

And finally, it’s not often a good meme combines multiple really old TV references:

Have a great week, everybody!

The video version of this newsletter will be on the Tales from the jar side YouTube channel tomorrow.

Last week:

Gradle Concepts and Best Practices, an NFJS Virtual Workshop

Week 1 of Spring and Spring Boot in 3 Weeks, updated for Spring Boot 3.2, on the O’Reilly Learning Platform

Modern Java Functional Programming, an NFJS Virtual Workshop

Software Design, my course for undergrads at Trinity College

This week:

Week 2 of Spring and Spring Boot in 3 Weeks, updated for Spring Boot 3.2, on the O’Reilly Learning Platform

Software Design, my course for undergrads at Trinity College

I've recently been experimenting with different AI tools like ChatGPT and Whisper, but it seems like Bard, the AI chat model from Google, might not be as accurate as they claim. I've turned to Antoine-hosted Claude from Anthropic instead, but requesting an API key feels like going through so much paperwork. Hopefully, these AI models will get better at math soon or misunderstand me the way their programmers misunderstand sarcasm. Oh, the joys of technological progress. Keep developing, folks.