Tales from the jar side: Counting tokens, Vision models, Magnus is amazing, Stack Overflow sells out its users, and the usual tweets and toots

My wife asked me to clear the kitchen table. i needed to get a running start, but I made it. (rimshot)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of May 5 - 12, 2024.

Regular readers of, listeners to, and video viewers of this newsletter are affectionately known as jarheads, and are far more intelligent, sophisticated, and attractive than the average newsletter reader or listener or viewer. If you wish to become a jarhead, please subscribe using this button:

As a reminder, when this message is truncated in email, click on the title to open it in a browser tab formatted properly for both the web and mobile.

Before we get to the main content, I want to give a shout out to jarhead John Sheehy, a jarhead I met at the NFJS event last week in Boston. Hi John!

Counting Tokens

A topic I’ve revisited a couple of times in this newsletter is how the context window in the various AI models keeps growing rapidly. The context window is how much memory the model has. If you want a model to remember the answers to previous questions (in other words, to hold a conversation), you have to append to your current question the previous questions and answers. The size of the context window is usually measured in terms of tokens, where 1000 tokens represented about 750 words.

In the early days of LLMs (say, a whole year ago), the context window was 4K or 8K in size, so that was about 3 to 6 thousand words of text. Then Claude 2 jumped to 100K tokens, and GPT-4 leapt to 128K. Then Claude 3 bumped that up to 200K tokens.

Along came Google, however, and it’s Gemini 1.5 Pro model has a whopping 1.5 million tokens in the context window.

All AI developers talk about RAG, or Retrieval Augmented Generation, as a way to put only the most relevant parts of your documents in the context window, which was necessary when the context window was small. With 1.5 million tokens available, however, you can add a lot of information.

Recently I talked about taking my book Help Your Boss Help You and uploading the entire book into the context window and asking the AI to give me a summary. Gemini did a decent job of that. The number of tokens required was only about 80,000, so it would fit in several models.

This week I went to the Banned Books page at Project Gutenberg, where they make available lots of public domain books. I downloaded several in text form and sent them to Gemini’s “count tokens” endpoint. Here are the resulting numbers of tokens, keeping in mind that Project Gutenberg appends their own license to them at the end:

The Communist Manifesto (Marx and Engels): 21,231

The Clouds (Aristophenes): 31,719

The Adventures of Sherlock Holmes (Conan Doyle): 156,979

On the Origin of Species (Darwin): 223,747

Les Misérables (Hugo): 873,435

The Decline and Fall of the Roman Empire (Gibbon): 2,706,385

For all but the last one, I was able to stuff the entire book into this prompt:

Summarize the plot of the book:

%s

Explain why it might wind up on a

Banned Books list.The AI did a pretty good job. The one I was curious about was why Sherlock Holmes would be on the banned list, and Gemini had no idea:

“This book is simply a collection of short stories featuring the detective Sherlock Holmes and would be highly unlikely to end up on a Banned Books list. The stories contain no material that would normally be considered controversial or objectionable. They are generally considered appropriate for most ages.

Here's a brief summary of the plots for each of the stories in "The Adventures of Sherlock Holmes" (etc)”

There followed brief summaries of each of the individual stories, which was a good job.

RAG still has its place, of course, but summarization works better when the AI has the entire text to work with. It looks like Gemini 1.5 Pro can handle all but the longest books.

Vision Models

Here’s a generated image of cats playing cards:

There are a few open source vision models available, which means you upload an image to them and they tell you what it contains. One of them is called llava, whose description reads:

Another is called (warning: bad pun alert) bakllava:

Two new variations on llava appeared this week:

llava-llama3, a LLaVa model fine-tuned from Llama 3 Instruct with better scores in several benchmarks, and

llava-phi3, a new small LLaVa model fine-tuned from Phi 3 Mini.

I thought I’d try out all four on the above image. Here’s what I got:

llava: “The image shows three cats sitting around a table. They appear to be engaged in playing cards, with the cats holding cards and the human elements being coffee cups and a cup of milk. This creates a whimsical and anthropomorphic scene, as if the cats are humans enjoying a casual card game. The setting is indoors, likely a home, with wooden flooring visible.”

llava-llama3: “The image captures a moment of tranquility, featuring four cats in varying shades of gray and white, each holding a cup of coffee. They are arranged on a wooden floor, their bodies relaxed as they gaze directly at the camera.

In front of them, there's an array of playing cards and mugs of coffee. The cards are spread out in a fan-like arrangement, creating an interesting pattern on the wood. The mugs, filled to the brim with what appears to be hot coffee, are placed near the cats' paws.

The scene is one of companionship and warmth, as if the cats are sharing a quiet moment over their coffee and cards. It's a charming image that brings together the whimsy of cats with a human-like activity like playing cards and coffee-drinking.”

llava-phi3: “The image features a playful scene of four cats and a few items spread out on a wooden floor. Here's a detailed description:

Four cats are present in the image, each with its own unique color pattern. From left to right, they are:

A gray and white cat sitting upright, looking alert.

A calico cat lying down comfortably on its side.

An orange tabby cat lying down on its stomach.

A brown and black cat laying on its back, paws in the air as if it's playing or relaxed.

There are also two mugs visible in the image, one white with a blue handle and another brown with a gold handle. They seem to be part of their playthings or perhaps used for their water bowls.

A pile of playing cards is spread out on the floor as well, including at least two red aces among other cards. This could suggest that they are in the middle of a game or just found them interesting.

Lastly, there's a spoon lying next to one of the cats. It might have been used earlier for feeding or playtime.

The image captures a moment of feline relaxation and playfulness, with each cat engaged in its own activity or resting peacefully. The wooden floor provides a warm and homely backdrop to this charming scene.”

bakllava: “4 cats”

So none of the models can count and their responses vary wildly. I uploaded the same image to ChatGPT-4 and got:

“The image depicts five cats seated around a wooden table, engaged in what appears to be a card game. Each cat has a distinct look, possibly representing different breeds. There are several playing cards spread out and neatly arranged in front of the cats. Each cat has a small mug, possibly filled with milk, placed near them. The setting conveys a humorous and whimsical take on a typical card game among friends, reimagined with cats as the players.”

That’s much closer, and at least it counted the number of cats correctly. I’m not sure what to make of all this, other than vision models still have a ways to go.

Magnus the Magnificent

Some of you may be aware that Magnus Carlsen is the former World Chess Champion. He got tired of the two-year cycle of preparing and playing championship matches, especially when he much prefers the faster time controls of rapid (30 minute games) or blitz (5 minutes or less) games. After several winning defenses, he declined to participate in 2023, “suffering from a lack of motivation to play classical chess, because of the dominance of opening preparation,” leaving the title to Ding Liren.

Magnus is still Magnus, however. An entire tour of rapid and blitz tournaments sprung up partly due to his interest, and he plays in most of them.

The tour season began this week in Warsaw, Poland, with the 2024 Superbet Rapid & Blitz Poland. Ten players competed in a single rapid round-robin, followed by a double round-robin of blitz. Here was the headline heading into today’s last round:

Wei Yi Powers To 2.5-Point Lead Over Carlsen

As the article says, “I just sucked, honestly,” said GM Magnus Carlsen about his play after losing his first game to GM Praggnanandhaa. He blundered a queen and lost the next game as well, but his 6/9 blitz score was more than respectable. The problem? GM Wei Yi caught fire after losing the first game to score 7.5/8 and go into the final nine rounds of blitz with a huge advantage.”

Here’s the headline on today’s article, rushed out right after the tournament ended:

Carlsen Wins Superbet Rapid & Blitz Poland With 10-Game Winning Streak

You read that correctly. Magnus started 2.5 points behind Yi, but “posted an incredible 10-game winning streak” to win the tournament.

Yi also had an excellent day, and scored six full points ahead of the third-place finisher, but when Magnus does that, there’s nothing you can do. Even Magnus acknowledged that Yi’s score would have won almost any other tournament.

This reminds me of an old story about Isaac Newton, which is recounted in the Later Life of Isaac Newton Wikipedia page. In 1697, Newton was 55 years old and working as Warden of the Mint. After a long day, he returned home to find a letter from Johann Bernoulli, which proposed a couple of mathematical problems “for the finest mathematicians of Europe” to solve.

Newton stayed up half the night, solved both problems, and sent his solution anonymously to a contact at the Royal Society for publication. When Bernoulli saw it, he immediately knew what had happened, famously saying, “one recognizes the lion by his claw.”

This is all a reminder that although Ding Liren and Gukesh Dommaraju will play the official World Championship match later this year, everybody knows who the lion is, and he’s still got his claws.

Stack Overflow Sells Out Its Users

Stack Overflow (SO) is a website for getting answers to (primarily) technical questions. It game-ified the process, meaning that you earn points both for asking questions (if they become popular) and answering questions. You can earn badges, gain privileges, and more. You can even offer a financial reward for answers to some questions, though that’s pretty rare.

The site quickly became the default location for getting answers to your questions for most developers. My reputation there never made it much past 3000, which is pretty low, but the easy questions get answered very quickly and I always felt the hard ones weren’t worth the time and effort it would take to write a decent response. Many developers felt differently, some people made their entire professional reputations that way. For example, Mark Murphy, known as CommonsWare on SO, has a “reputation” of just under 1 million, almost entirely from answering Android questions.

The rise of AI looked set to destroy SO, and the owners appear determined to wipe out what’s left. As you might imagine, once ChatGPT appeared, developers tried to earn points on SO by asking it questions and posting the answers as their own. Everybody hated that, so when it was detected those users were banned, but it’s not so easy to distinguish between AI and human-generated answers. It’s also much easier to ask ChatGPT a question and even have a conversation with it than to ask on SO and hope somebody answers. Usage and participation numbers on SO dropped precipitously as a result.

This week, however, the company now in charge of SO decided they found a ready source of income: the sold the entire collection of questions and answers to OpenAI to use in training the next generation of AI models.

Users have been posting questions and answers there for years. Does that give SO the right to re-sell all that data to a third party without permission?

Of course, the answer is almost certainly yes, or at least it’s legal, but they can’t have expected the contributors to be happy about it. Several users tried to delete their previous posts and answers, only to find themselves banned. See Stack Overflow users sabotage their posts after OpenAI deal for details. The quote in the article is:

Stack Overflow announced that they are partnering with OpenAI, so I tried to delete my highest-rated answers.

Stack Overflow does not let you delete questions that have accepted answers and many upvotes because it would remove knowledge from the community.

So instead I changed my highest-rated answers to a protest message.

Within an hour mods had changed the questions back and suspended my account for 7 days.

I don’t think I saw that exact post, but I saw many like it. I believe we’re watching the death rattle of one of the foremost developer sites on the web.

There’s an old saying that if a site offers a product to you for free, then you are the real product. You can use the site, but they’re going to sell your time, posts, and information to the highest bidder. The problem now is that AI has given these sites a new market of customers, and the users are reduced to providing training data for them. A lot of people aren’t happy about it, but there’s not much they can do.

Casey Newton has a great article discussing how AI changed the bargain between platforms and users. I suppose the whole situation justifies my laziness and/or procrastination around earning points on SO.

Tweets and Toots

Apple, the iPad, and Misreading the Room

This week, Apple posted a new ad that resulted in so much backlash it wound up apologizing canceling an entire campaign. The so-called Crush ad for the new iPad Pro showed a giant hydraulic press squishing object ranging from paint cans to a piano until a single iPad remained. What they missed was that many people saw that as a metaphor for how Big Tech is cashing in on creative works, destroying the creators in the process.

As this (gift) article from the New York Times put it:

Apple doesn’t make mistakes often and seldom apologizes, but on Thursday, its head of advertising said the company had erred in making a new iPad commercial that showed an industrial compressor flattening tools for art, music and creativity.

Here is the tweet that started it all:

Yeah, I can see how that might be a problem.

Woke Sky

The aurora borealis reached deep into the south this week. Here’s a photo from my town of Marlborough, CT (courtesy of Joanna Voskanov on Facebook):

Pretty, but I liked this meme:

Well, maybe that wasn’t the reason. I also liked this take:

An eclipse, an earthquake (in the Northeast), and the aurora all in the same year? Has to mean something, right? No, but if it motivates you to get out and vote in November, it’s worth it.

Fourth Law?

I don’t think AI tools can guarantee that, but I wouldn’t be surprised if that’s a goal.

This week in Elon

In case you missed it, Neuralink, the company owned by Elon that wants to implant chips in people’s brains, ran into a problem this week. The company implanted a chip a few weeks ago in a quadriplegic, enabling him to move a mouse with his mind. According to this article, the test subject this week developed a problem as a few of the chips connective threads detached from his brain (whatever that means, but it can’t be anything good), though they found a workaround.

Ever the marketeer, Musk claimed the company’s first product will be called Telepathy. Yeah, good luck with that. I did like the post, however:

Because he thought he could make a quick buck, that’s why.

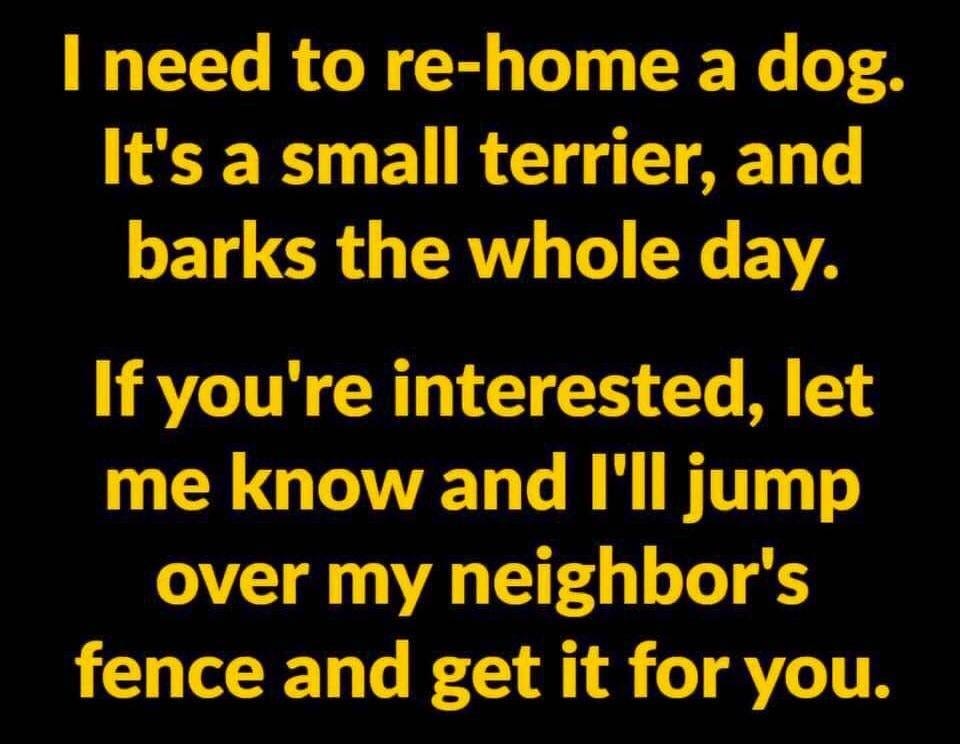

To a good home

We live near a dog like that.

Smooth

Here’s the direct link. The dance is so pure.

Not happening any time soon

And finally, speaking of awesome marketing:

Just kidding

Have a great week, everybody!

The video version of this newsletter will be on the Tales from the jar side YouTube channel tomorrow.

Last week:

Final exam in my Trinity course, which actually means student team presentations. They were excellent, fortunately.

This week:

NFJS event in Madison, WI.