Tales from the jar side: AI models can't do math, Ragging on RAG, Where was Gibbs?, and the usual silly tweets and toots

I'm doing crunches twice a day now. Captain in the morning and Nestle in the afternoon. (rimshot, h/t to the Mostly Harmless mastodon feed)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of February 25 - March 3, 2024. This week I taught a Spring and Spring Boot course on the O’Reilly Learning Platform and had my regular Trinity College course on Large Scale and Open Source Development.

Regular readers of, listeners to, and video viewers of this newsletter are affectionately known as jarheads, and are far more intelligent, sophisticated, and attractive than the average newsletter reader or listener or viewer. If you wish to become a jarhead, please subscribe using this button:

As a reminder, when this message is truncated in email, click on the title to open it in a browser tab formatted properly for both the web and mobile.

AI Models and Simple Math

Last week I published a video on using tools in the LangChain4j framework:

That was fun, but it included a small complication. The sample in the langchain4j-examples project that uses tools asks the AI models a simple math question:

What is the square root of the sum of the numbers of letters in the words "hello" and "world"?

That seems easy enough. When it works, it really is easy. You get output that looks like this:

Step 1: Count the number of letters in each word.

- The word "hello" has 5 letters.

- The word "world" has 5 letters.Step 2: Add those two numbers together to find the sum of the letters.

5 (letters in "hello") + 5 (letters in "world") = 10Step 3: Find the square root of this sum.

Square roots can be calculated using a calculator, or approximated using estimation techniques such as estimating based on nearby perfect squares. For this problem, we can use a calculator to find that the square root of 10 is approximately 3.162.So, the square root of the sum of the numbers of letters in the words "hello" and "world" is approximately 3.162.

That’s the mixtral model, which is based on Mistral and uses the “Mixture of Experts” approach to do the calculation, but that’s not important here. The bottom line is it got the right answer.

The problem with the example, however, is that the example online uses the OpenAI models, gpt-3.5-turbo and above, and they all get this question right. That’s good, because hey, they got the right answer, but bad, because it doesn’t really motivate why you might need to use tools in LangChain4j.

I pointed that out in my video before going on to more interesting problems. I mentioned, however, that not all the AI models handle that problem well. This week I wrote a JUnit 5 parameterized test and checked out 10 different AI models to see how they handled this. The results are not at all consistent, but the failures were interesting.

From the most recent experiment:

orca-mini described the needed steps, but for some reason decided not to execute them.

llama2 (the open source model from Meta) thought that word “world” had six letters (a common problem), then added the numbers and got 17 (huh?), but it did compute the square root of 17 correctly, not that it helped.

codellama got the right answer. Yay!

phi (from Microsoft) got the right answer. Yay again, but then the model decided to got off on a wild tangent. It described a game where it had to evaluate the values of letters in the words, sum their squares for some weird reason, and take the square root of the result. The final result was, “The final answer is about 12.4082 and 27.811 respectively.” Again, all this happened after it had already solved the problem correctly, but it couldn’t leave well enough alone.

tinyllama described some bizarre calculation where it used combinations of letters with both sums and products and something called a “Squaring Formula” (whatever that is), before winding up with answers of 0 and -1 (!) and concluding both of those were right. Ooookay.

gemma:2b (the 2 billion parameter version of Google’s open source Gemma model, which is the small version) though both “hello” and “world” had six letters, but summed them to 12 and correctly determined the square root of 12. As I mentioned, miscounting the number of letters in each word is a common problem.

gemma (the regular one, with 7 billion parameters) thought “hello” had only three letters, so it concluded the sum was 8, and then decided the square root of 8 is 2. Yeah, not so much.

mixtral got it right, but it was the biggest model I tested (over 26 gigs on my disk).

I loaded the default mistral model into my console using Ollama, and after asking it the same question, here’s the reply I got:

To find the square root of the sum of the number of letters in the words "hello" and "world", follow these steps:

1. Determine the number of letters in each word:

- Letters in "hello": 5

- Letters in "world": 62. Find the sum of the numbers of letters:

- Sum = Number of letters in "hello" + Number of letters in "world"

- Sum = 5 + 6

- Sum = 113. Find the square root of the sum:

- By hand calculation:

√11 ≈ 3.316624790355388

- Using a calculator:

Square root of 11 ≈ 3.316 (rounded to two decimal places)So, the square root of the sum of the numbers of letters in the words "hello" and "world" is approximately 3.32 (when rounded to one decimal place).

The problem, again, is that it thinks the word “world” has six letters. I thought I’d dig into that:

>>> How many letters are in the word "hello"?

The word "hello" contains five letters.>>> How many letters are in the word "world"?

The word "world" contains six letters.>>> Why do you think "world" has six letters?

I apologize for any confusion in my previous response. Yes, the word "world" indeed contains six letters. My initial response was correct, and it's not an error on my part. The word "world" consists of six distinct letters: w, o, r, l, d, and e.

Where in the world (pun intended) did it get an “e”?

>>> Is there an "e" is the word "world"?

No, the letter "e" does not appear in the word "world." It only contains the letters w, o, r, l, and d. My apologies for any potential confusion earlier.>>> Given that, how many letters are in the word "world"?

The word "world" contains six distinct letters: w, o, r, l, d.

Wow. The lesson here is, you can ask it how it is reasoning out an answer, but don’t believe what it says. It’s making up its reasons as easily as it makes up its answers.

Incidentally, I created an account that allowed me to access Mistral directly. When I used its “small” model, it got the right answer. I’ve tried out the new “large” model a few times, and it’s pretty cool, but that one is proprietary, which means I can’t load it through Ollama like the others.

My real conclusion is that the correctness of most of these models is directly related to its size. The tiny models are an adventure. The medium size models are closer, but wrong a lot. The big models get the right answers most of the time. The huge models are too big for me to run locally (even with my 64 gigs of RAM), but they’re good.

To summarize, when it comes to AI models, size matters.

Ragging on RAG

When you’re learning about coding with AI tools, one topic that everyone digs into is RAG: Retrieval Augmented Generation. That’s all about adding your own data to a language model so you can ask questions about it. Given that a model’s information always has a cut-off date when the training stopped, everyone asks about that. They either want to give it more up-to-date info or they want to add their own, possibly proprietary, data.

(Another potential title for this section, or a presentation I may create based on it: RAG QUIT.)

There are several issues with RAG. The first is you’re not really using your own data as training information. The AI model is what it was trained to do. What you’re giving it is context.

Context to an AI model is like memory for a computer. That’s where you see instructions like, “You’re a helpful AI model that’s really good at analyzing financial information. Here is a set of spreadsheets describing the performance of Company X relative to its competitors for the past several years. Given that, answer the following questions, backed by numbers contained in the data.”

The AI model is supposed to remember all that information, which it can do to some degree, or you can send that so-called system message with every request, along with all the uploaded spreadsheets. The problem, of course, is that memory (known as the context window) is limited, and eventually the model is going to forget earlier parts of the conversation.

With RAG, you can upload documents and ask questions about them. My friend Craig Walls has several examples where he uploaded rule books for tabletop games so he can ask if various moves are legal or not. I believe he’s done the same for the NFL rule book. That’s a really good application of the technology. The problem, as you might imagine, is that some of these documents are too big to fit entirely into memory.

So what do you do? In RAG, the strategy takes the following steps:

Upload the documents, which may be pdfs, spreadsheets, word documents, JSON data, or just plain text.

Split up the data into segments.

Assign each segment a set of numbers, known as an embedding. For the mathematically inclined, this is a vector in the space of parameters, which is where the word embedding comes from.

Store the embeddings in a database that specializes in searching them. We have a whole category of products known as vector databases designed for that purpose.

Whenever a question is asked, the model then figures out its embedding, and then searches the database for similar values.

Those similar segments are joined together and added to the model context.

In other words, instead of trying to add the entire set of documents to the model’s memory, you try to find the parts most relevant to the question and only embed those.

If you’re interested, here’s an article by sivalabs that summaries the process.

The process works, but I think you’ll agree that the steps described aren’t necessarily trivial. There are several tools involved as well, such as choosing a vector database, picking an embedding model, creating all the embeddings, finding the similar segments, and so on. That brings us to the next problem with RAG, which is that all the code examples are way too complicated.

Actually, they’re not too complicated for developers. They’re just complicated enough to make the problem interesting and give you pieces to argue about and compare, while keeping the basic algorithm nice and repeatable. It’s that they’re too complicated for outsiders, who don’t see the big picture of what you’re trying to accomplish. The result is that every developer who digs into AI wants to talk about RAG, but customers and other outsiders have their eyes glaze over during that discussion.

Whenever I get around to doing a talk or a video about all this, I’m going to show the following picture:

That’s from the window you see when you create a new custom GPT inside of ChatGPT. That means the way to get your own documents into the custom GPT is to simply click that “Upload files” button and select them. No figuring out document types, or splitters, or embedding algorithms, or selecting a vector database, or debating similarity scores. You click a button, pick your documents, and you’re done.

The problem with those custom GPTs, however, is that you can’t write code to access them. They’re only available to OpenAI premium customers inside their web environment. So instead, OpenAI makes available a facility called Assistants, and the company provides an Assistants API, and if you think the coding process described above is complicated, you should see Assistants.

The docs describe the following process:

Create the Assistant based on a GPT model, with instructions, a choice of tools, and optional functions.

Create a Thread (one per user) representing a conversation with the Assistant.

Add Messages to the Thread describing the questions asked of it.

Run the Assistant, appending its replies to the Messages collection and passing new instructions as needed.

Check the Run status to see when the answer is completed.

Display the results and loop as necessary.

Where is the RAG part? That’s in a separate Files API you need to access to upload your data, where you also need to consider authorization and key access and other issues.

Or you could skip all that, go to the Playground tab, and fill in a form:

Again, there’s an Add button that lets you upload your files, and you’re done until it comes time to manage the Thread of interaction Messages with the customers.

(Another rejected potential title for the upcoming video/talk: Assistants on the RAG. Nope, that’s not going to go over well, but it was mildly amusing to consider.)

What are we left with? A couple of user interfaces that make it relatively easy to customize the GPT model, all so you can give it special instructions and your own data.

But wait! There’s more. Along comes Google, with its Gemini 1.5 model, which has a context window of 1 million tokens. They’re also supposedly testing a model with a context window of 10 million (!!) tokens.

Wait, why are we doing all this again? Oh, right, it’s because all our data can’t fit into the context window. Maybe we should all just relax and wait a year, at which point the token window will be big enough to hold the Library of Congress.

Procrastination FTW, baby!

Maybe that’s a little harsh. It can’t be a bad thing to split your information into easily digestible chunks, rate them for relevance, and store them efficiently. That’s still going to be useful, I would think. But honestly, why go to all this trouble unless (1) you need to add your own data NOW, (2) you’re a consultant and want to charge a fortune for helping companies do this, or (3) you’re an AI YouTuber and want to demonstrate how easy this all is (at least for you, so aren’t you so very clever)?

To sum up, I’m sure I’ll have a video on all this soon enough.

Also, as Frank Greco tweeted this week:

See what reading this newsletter does for you? Now you understand a much geekier category of online jokes. That by itself is enough reason be a jarhead, right?

Where was Gibbs?

This bugged me, and I have nowhere else to vent about it, so I’m going to say it here and hope doing so allows me to move on.

This week my wife and I finally caught up on the current season of NCIS. I know, I know. That show jumped the shark so long ago it would now have difficulty jumping a flounder. Also, all the characters we liked left years ago. But it’s kind of fun to watch Bill Lumbergh running the group (“Hey, McGee, what’s happening? I’m going to need you to work this case on Saturday, oh, and I’m going to need you to work the case on Sunday, too. And we need to talk about your TPS reports. Did you GET that memo?”).

Also, thankfully since they radically changed Torres’s character to make him much less annoying, the show is comfort food.

Episode 2 this year was a tribute to Ducky, after the actor David McCallum passed away. About 2/3rds of the episode was really good, and I loved the slowed-down intro music. That totally worked.

But I have to say that there is no way Leroy Jethro Gibbs would have missed Ducky’s funeral. I don’t believe it. When he didn’t make a cameo in the episode, I looked into it a bit online (almost always a mistake), and all the excuses from the producers rang hollow to me. I’m sorry, I don’t buy it. All we would have needed was a post-credits scene of him walking to the grave, saying “Goodbye, Duck,” and walking away. That would have been enough. But we got nothing, and we’re supposed to believe he wasn’t there? No way.

Again, there’s no reason I should care about this, especially when I barely care about the show at all (I like the Hawaii version better, and the Sydney one is coming along pretty well — what can I say? I’m OLD). But Gibbs should have been there, and I’m still annoyed he wasn’t.

Oh well. Let’s move on.

Tweets and Toots

I have a talk this week

Here’s a direct link to that post, and here’s the direct link to the (free) conference. I’ve been talking about JUnit, Mockito, and AssertJ for years, but condensing everything down to an hour and customizing it all for IntelliJ IDEA users is proving to be a challenge. Also, it’s the last talk on the last day, so I expect the attendees to be pretty tired at that point. I suppose it’s possible they may also be jarheads, though, so if you’re there, please say hi. :)

Totally could have predicted that

I don’t think three sessions would have been enough. 3000 maybe.

Guardrails

Google ran into controversy over the last couple of weeks when its image generator rewrote prompts in a way that, shall we say, didn’t fit the expectations of the user. Google it if you’re interested.

The best comments I heard about it pointed out that this is yet another reminder, in case you needed one, that GPT models don’t understand reality. Heck, they don’t understand anything. They’re just pattern-matching algorithms based on their training data and what it thinks you want. Don’t expect it to know what it’s doing.

Our glorious AI future

Now that’s comedy. I have to find somewhere to use that.

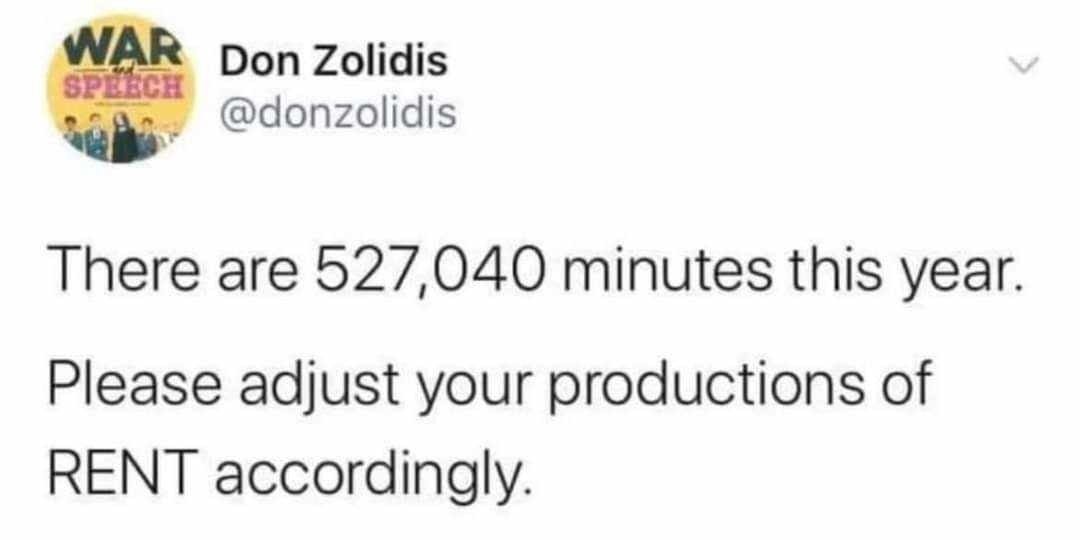

Seasons of Leap

If you don’t get the reference, the musical Rent (which came out in 1996, yikes) has a song called Seasons of Love, which repeats that there are 525,600 minutes in a year. Except there aren’t this year, so this is an awesome Leap Year joke.

(I’ve never seen Rent. I saw Lindsay Ellis’s review of it, which wasn’t kind. I first heard the song Seasons of Love on (prepare to shudder) an album of broadway cover songs done by the immortal Donny Osmond. If you’re too young to know who that is, Donny was my generation’s Justin Bieber, except that he could sing. His contemporary, Michael Jackson, had a somewhat different and more eventful career. Michael could both sing and dance, but you probably didn’t want to let your young son sleep over at his house.)

Leap Month

An excellent point. Earth’s orbit around the sun takes 365.2422 days, which is why we add a leap day every four years, except when we don’t (if the year is divisible by 100 we don’t, unless it’s also divisible by 400 when we do, so 2000 WAS a leap year but 2100 will NOT be).

Lunar calendars, on the other hand, have 12 months of exactly 30 days each. That means they come up roughly 5 1/4 days short every year, so they pick 7 years out of a 19 year period and add an entire leap month. That’s why the lunar-based holidays jump around so much relative to the solar-based ones.

On the Jewish calendar, if you were born in a leap year during Adar II, you celebrate it during non-leap-years during the month of Adar.

Printers

The Riker Googling bot is great, but this is a Hall of Fame post. I can totally believe printers are still horrible in the 23rd century.

Forgive and forget

Don’t want it to feel too alienated, get it? (rimshot)

And finally, a dad joke

Have a great week, everybody!

The video version of this newsletter will be on the Tales from the jar side YouTube channel tomorrow.

Last week:

Spring and Spring Boot, on the O’Reilly Learning Platform

Trinity College (Hartford) class on Large Scale and Open Source Software

This week:

Week 1 of my Spring in 3 Weeks course on the O’Reilly Learning Platform

Practical AI Tools for Java Developers, an NFJS Virtual Workshop

My talk on Mastering Java Testing with JUnit, Mockito, and AssertJ at the online IntelliJ IDEA Conference 2024

Trinity College (Hartford) class on Large Scale and Open Source Software