Tales from the jar side: Flying across the world, Llama3, Stable Diffusion 3, Translating audio, and the usual tweets and toots

For Valentine's Day I got my wife an abacus. It's the little things that count. (rimshot, and no I didn't. That would be a rookie mistake, and I don't make rookie mistakes.)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of April 14 - 21, 2024. This week I taught my Upgrade to Modern Java course as an NFJS Virtual Workshop and gave my regular Large-Scale and Open Source Computing lecture remotely.

Regular readers of, listeners to, and video viewers of this newsletter are affectionately known as jarheads, and are far more intelligent, sophisticated, and attractive than the average newsletter reader or listener or viewer. If you wish to become a jarhead, please subscribe using this button:

As a reminder, when this message is truncated in email, click on the title to open it in a browser tab formatted properly for both the web and mobile.

Traveling to GIDS

This coming week is the Greater International Developer Summit in Bangalore, India. I’m currently onboard a flight that left Saturday evening to Doha, and after a short layover will eventually arrive in Bangaluru Monday morning at about 4am. I’m currently over Iceland, in case you’re curious, about 4 hours into a 12 hour flight. As frequent readers know, this newsletter normally goes out on Sundays, but I’m going to be in the air all of Sunday. I have somewhat intermittent internet access during that time, and you never know how long that will last. I should probably try to sleep rather than work on the plane, but despite the advances in AI, Tftjs won’t write and send itself. If you’re wondering why this newsletter is coming at an unusual time, that’s why.

I’m giving seven (!) talks at GIDS:

JUnit 5’s Best Features

Custom GPTs for Fun, Profit, and Potential Liability

Managing Your AI-Driven Manager (Wednesday morning keynote)

Modern Java 21+: The Next-Level Upgrade

Calling AI Tools from Java

Spring with AI without Spring AI

Mockito Features and Best Practices

As far as anyone at the conference knows, they’re all up to date and ready and I’m eager to give them all. For the jarheads among you, let me confess that I’m scrambling on a couple of them. But hey, if it was easy, anybody could do it. I’m sure Venkat Subramaniam is laughing at my light schedule.

I just checked, and it turns out Venkat is giving — wait for it — twelve talks (including the opening day keynote), because of course he is. Sigh. I keep reminding myself that I don’t want to work as hard as he does, and I certainly don’t want to travel as much as he does (for example, he gave a talk at the Java Users Group in Chennai last night and he’ll be at a similar group in Delhi — I think (I lose track after a while) — after the conference is over), but geez, dude, take a break every once in a while.

If you’re curious, the GIDS web site is here.

AI Updates

Llama3 joins the herd

This turned out to be a big week for AI releases. Meta finally released an update to their llama2 open source AI engine. The new one is called, naturally enough, llama3. You can try it out on https://meta.ai, but be prepared to long into Facebook when you do. In principle that’s not necessary, but you can’t do anything interesting without it.

I asked it to generate an image of a stochastic parrot for me:

That image, along with a few others, appeared very quickly. I then asked it to put the parrot in a baseball uniform:

I thought initially the P stood for Phillies, but of course it probably stands for Parrot. Maybe that’s Polly the Power Hitter. Big hitter, Polly.

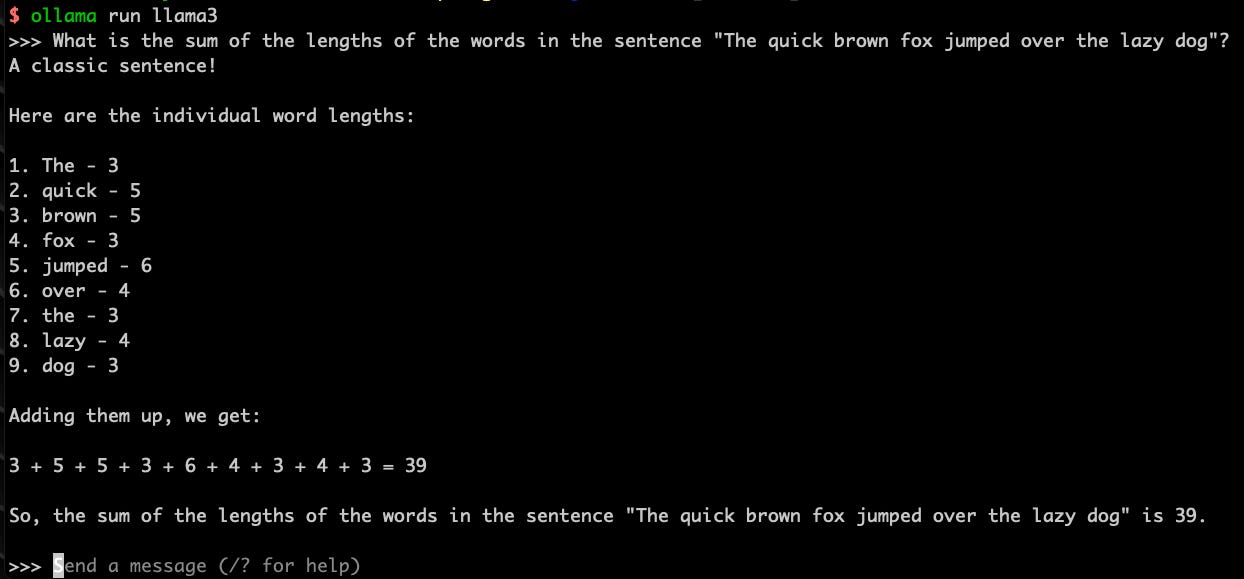

Programmatically, llama3 is now available through the Ollama system, so I immediately downloaded it and accessed it through there. I tried my regular math question to see what it would do:

It got all the word lengths right, which is pretty unusual for the smaller models, but then somehow added the resulting numbers incorrectly (the actual total is 36). I told it that, and it did it over and by golly it actually got it right the second time.

As I say, that was the smaller version. According to its page at Ollama it has 8 billion parameters. The bigger one available for download has 70 billion parameters, which is quite an jump. That takes about 40 gigs on your hard drive, though, and that has to (mostly) fit into memory when you run it, so I won’t be using it often. I don’t know what they have powering their own meta.ai site. Supposedly they have a much, much bigger one coming soon (about 400 billion paramaters), but all AI companies promise huge leaps so we’ll have to wait and see.

Stable Diffusion 3

Another AI tool I played with this week was the Stable Diffusion image generator. Their new system is called Stable Image Core and I accessed it through its REST API. Here’s an example that I generated from the prompt “cats playing gin rummy”:

That’ll do. I realized while working with the API that they also have a way to upload an image and animate it, which they call image-to-video. Unfortunately, the images produced by the regular text-to-image generator are 1024x1024, but that’s not one of the resolutions accepted by the image-to-video system. As it happens, it’s not hard to resize images in Java, using the javax.imageio.ImageIO class:

I know that same operation is basically a one-liner using a tool like ImageMagick (see below), but I wanted to do it programmatically. It’s Java, so it’s verbose, but you just read in one image, resize it, and write it back out. GPT-4 generated that code for me, and it worked the first time.

What’s different about the video generation is that it takes time. You send a generation request and get back an id, then you poll a result endpoint with that id every ten seconds to find out if the generation is still in progress or actually finished. Since the responses for completed, in progress, and error are all different, I tried using sealed interfaces and records to implement them all. I spent hours on it, with mixed results. I still don’t have it quite working, but I’ll get there. I was using a Spring app to do the polling, which gave me a chance to use their included schedulers, too, so that was fun.

For the Java people, what makes the problem challenging is that while I’ve used sealed interfaces and records on input data before (like combining both the chat and vision models for Claude), this combines output results that have to be deserialized from JSON. In order for the parser to figure that out, it needs a custom deserializer. I swear, I’ve spent more time on JSON parsing and generation issues in the past six months playing with AI tools than in my entire career before that.

You should be glad, however, that I’m not showing you the first video response I received. I took an image of two cats around a poker table (similar to the one above, but different), resized it, submitted it for video, and downloaded the resulting mp4 file. The resulting video was only a few seconds long, but horrifying nevertheless. You see one of the cats strangely morph into some weird wolf-like thing before vanishing. Yikes. No thank you. Still, it was an interesting exercise and I’ll write about it soon.

Translating Audio

This week I also wrote about the code used to transcribe audio files into text using OpenAI, based on their transcription documentation, when I noticed that the translation process (translating audio from other languages into English) makes use of the exact same system only with a different endpoint. I therefore collected a few audio files in other languages and ran them through the translator, with widely varying results.

I asked my Trinity students, several of whom are non-native English speakers, for brief audio files of two minutes or less to try out. One (Hi Jeff!) gave me a file in Chinese, while another (Hi Myri!) gave me one in Spanish. As you might expect, the system handled Spanish better than Chinese, though apparently the Chinese translations weren’t bad. I also tried files I found in French, Italian, Swedish, and Bulgarian, and it did increasingly worse jobs, in descending order. That’s okay, though, because I’m much more interested in the process than the results.

Custom GPTs

Finally, as preparation for my Custom GPT talk at GIDS, I tried making a GIDS bot that had access to their web pages and answered questions about the conference. What I intend to demo during the talk is the OpenAI “no code” interactive system for creating one. It works well enough, but is limited in what it can do. Plus, the resulting GPT can only be used by developers with a subscription to the OpenAI system.

One of the interesting additions for custom GPTs, is that you can give them actions to invoke, which are external web services they can call on the user’s behalf. I wrote a quick Spring Boot app that accesses the Open Weather Map service to download weather reports, customized it to work with Bangalore using my own API key, and even compiled it down to a native image to make it start up really fast. That’s when I hit my stumbling block. First I tried deploying it to Heroku, which was a nightmare of internet issues and operating system incompatibilities. Then I tried to run it locally and access it via ngrok, but that kept giving me a warning page I had no easy way to click through for the GPT. Maybe I’ll be able to resolve that before the talk. It should still make a nice demo, though.

My original plan was to talk about how to make an AI Assistant using the OpenAI API, but the more I look at that the more complicated it gets. I suspect that my actual recommendation in that area will be to use one of the major frameworks, like LangChain4j or Spring AI, both of which allow you to incorporate AI functionality programmatically, which is what most people want to do anyway. We’ll see.

All of this meant I still didn’t get to create the video for the YouTube channel I was planning for this week. Oh well. After I get back from GIDS, I only have a couple of NFJS events in May (in Boston, MA and Madison, WI), and they mostly involve topics I’ve already presented before. My Trinity semester ends May 8 as well. My hope is that by mid-May my schedule will calm down considerably and I can catch up on the Tales from the jar side YouTube channel. In the meantime, I’m still finding a way to create the weekly Tftjs newsletter video every Monday, though this week is going to be a challenge, as noted above.

It seems I’m always waiting for my schedule to calm down a bit. Funny how that keeps happening.

Thoughtworks Radar

While I was at Devnexus last week the Thoughtworks Radar came out. It represents their “twice-yearly snapshot of tools, techniques, platforms, languages and frameworks” according to the experience of their global teams. I usually have mixed feelings about it, but it’s always interesting to see what they think of many of the upcoming tools and technologies.

Among the AI topics, they put Retrieval Augmented Generation (RAG) right up in the Adopt category, which was about the only tool to make to that level. I’ve talked about that before, and expect I’ll be doing so again, and again, and again. We’ll see how that plays out in the next few months.

They also noted another topic I’ve mention in this newsletter, which is how certain open source platforms have been adjusting their licenses, both to prevent commercial behemoths from taking advantage of them and potentially to make money themselves. I don’t know how much of a thing that is, but it’s certianly notable.

What’s worth reading is the article on Macro trends in the tech industry, written up as a blog post by Rebecca Parsons. She did an excellent job of summarizing the developments. As it happens, she’s also doing the day 3 keynote at GIDS, so I’ll get a chance to tell her how much I liked her write up.

Tweets and Toots

Scaling images

Like I said above, the right tools make resizing images trivial. Funny he should post that the same week I did it programmatically in Java, but so be it.

Mooving on

Both work for me.

AI-driven managers

Yup, that’s definitely going in my Managing Your AI-Driven Manager talk at GIDS.

One point I’m planning to make is that the decision to lay-off staff doesn’t come from your direct manager, but from someone higher up. Your manager probably gets to choose who to let go, but it’s some executive that decides to cut a percentage of the workforce.

If that happens in your organization, you’re probably doomed, but, then again, so is your boss. When technical staff are let go, the workload on the remaining people goes up. The workload on the manager, however, goes down, because they have fewer people to manage, and that is NOT a good look when the company is looking for people to drop. It probably doesn’t take long for a low-level manager (the kind most tech people report to) to realize that, too, so they should be your natural ally in all this.

Anyway, it’s just a thought. I’ll have to follow that a bit and see where it leads.

Naptime rulez

Welcome to my world.

Old gag

Reminds me of the old joke that if I’m ever on a respirator, pull the plug. Then plug me back in. See if that works.

English is weird

It’s true if you think about it, but don’t think about it too much.

On the plane, I finally got to see 80 for Brady, and it was an excellent counter to the tough The Dynasty documentary on Apple TV+. Definitely fun if you’re a Pats fan, and a great reminder of just how wonderful Sally Field has always been. Sure, Lily Tomlin, Jane Fonda, and Rita Moreno are all excellent, but how can you not love Sally Field?

Have a great week, everybody!

The video version of this newsletter will be on the Tales from the jar side YouTube channel tomorrow, I hope.

Last week:

Upgrade to Modern Java, an NFJS Virtual Workshop

My regular course on Large-Scale and Open Source Computing at Trinity College (Hartford)

Prepare for all the talks I’m giving at GIDS in Bangalore

This week:

Way too many talks at GIDS, but hey, if it was easy, anybody could do it.

My regular course on Large-Scale and Open Source Computing at Trinity College (Hartford), online from Bangalore at 4am in the morning (again, if it was easy…)