Tales from the jar side: "Star Trek: The MCP Server", two new blog posts, a new video, a Junie live stream, and the usual toots and skeets

How many engagement baiters does it take to change a lightbulb? Answer in the comments. (rimshot)

Welcome, fellow jarheads, to Tales from the jar side, the Kousen IT newsletter, for the week of May 25 - June 1, 2025. This week I didn’t have any training classes or academic classes (welcome to the Summer break), but I published a video and a couple of blog posts.

Sure, I’ll sign your ebook

Let me start with the fun one, though I’ve already written about this app. I finally documented my Certificate Service in a blog post, which you can find here. As a reminder, my certificate service is all about generating a digitally signed PDF “Certificate of Ownership” for one of my books, which includes my own scanned signature. In other words, it’s all about generating a self-signed certificate signed by my own self-signed certificate. (rimshot)

To try it out, go to this link where it’s currently deployed, fill in your name, pick one of the books, and generate the pdf.

The only downside (for me at least), is that now I’m going to have that song from Hamilton going through my head all afternoon.

The blog post summarizes a lot of the details, such as using Claude Code to help automate the annoying parts, like using the low-level Java signing libraries and the PDF generation. It includes the links to the service and the GitHub repository where all the code lives. Share and enjoy. :)

Waiting for Streaming Responses

The other blog post was about a more geeky technical issue I ran into, which was that when I try to demonstrate asynchronous (streaming) responses coming back from AI tools, I needed a way to make a JUnit test wait for them. Otherwise the test exits before I get a response back. In this post, I wrote about three ways to wait:

Use a

CountDownLatchfrom the Java libraryInvoke the

doOn…methods in the returnedFluxDelegate to the

StepVerifierfrom Project Reactor

The post has all the details, in the wildly unlikely event that you need them.

Later this week I ran into a similar problem, however, and none of these worked. All three of those examples were built assuming you were using the newly released Spring AI. When you call the “stream()” method on the ChatClient, you get back a Flux and you can go from there. This week, however, I’ve been working on my training course for LangChain4j, instead.

It turns out that LangChain4j uses a StreamingChatModel for async responses, and when you invoke the call method on it, the second argument is a StreamingChatResponseHandler:

public interface StreamingChatResponseHandler {

void onPartialResponse(String partialResponse);

void onCompleteResponse(ChatResponse completeResponse);

void onError(Throwable error);

}That’s fine, and you can use the CountDownLatch approach with it by instantiating one beforehand and calling countDown in both the onError or the onCompleteResponse methods. The thing is, though, at the bottom of the documentation page describing this, they mention a potential simplification:

A more compact way to stream the response is to use the

LambdaStreamingResponseHandlerclass. This utility class provides static methods to create aStreamingChatResponseHandlerusing lambda expressions. The way to use lambdas to stream the response is quite simple. You just call theonPartialResponse()static method with a lambda expression that defines what to do with the partial response

That’s actually a problem. The LambdaStreamingResponseHandler class has two (static) methods in it: onPartialResponse, and onPartialResponseAndError. The first takes a a Consumer<String>, which you can use to, say, print each token that comes by. The second method takes a Consumer<String> and a Consumer<Throwable>, with the intent being to supply one consumer for the streaming tokens and one for when there’s an error. The implementation of both methods is to create an anonymous inner class implementing StreamingChatResponseHandler and passing the arguments to the methods. (It makes more sense when you see the code, but I’m not going to clutter things here with that.)

The problem is, the implementation of the third method, onCompleteResponse, is to do nothing. That’s a problem, because if I don’t call countDown() on my latch in there, the whole system doesn’t work. Worse, there’s no obvious way to change that behavior, and since they aren’t returning a Flux (like the Spring AI people do), I can’t use doOn methods or a StepVerifier instead. In other words, I’ve got no way to wait for the response to be done other than just sleeping on the main thread for a while, and that’s really not a good practice. That means this nice, shiny, new lambda version of the class is useless to me.

It’s possible I’m missing something, and if you know what I didn’t, please let me know. If not, I might wind up raising an issue and offering to write a version with a CountDownLatch built into it that calls the countDown method (and the await method) at the right places. That would be a nice commit to the framework, but I don’t want to go to all that trouble unless I’m right about this. I’ll report on what happens, assuming anything does.

AI Integration in Java

I finally managed to publish a new video this week:

Yeah, the title is a bit more clickbaity than I like, but so be it. It’s a relatively high-level overview of the three major approaches to adding AI tools into Java, two of which are frameworks and one is to treat them like just another REST-based web service:

Spring AI

LangChain4j

Direct REST access

I also threw in a section at the end on the rise of AI agents, but I didn’t say a lot about them. That leads me to my section.

I join the MCP stampede

MCP stands for Model-Context-Protocol, and is all about getting AI tools to talk to each other. There are tons of services out that that AI tools could use if they need them, so Anthropic (the makers of the Claude AI tool) came up with a common protocol for everybody to talk to each other. Both OpenAI and Google have agreed to support that, which means pretty much everybody is going to support it.

In the Java world, I knew that both the major frameworks were planning to support it, so I was waiting for the 1.0 release of both. Lo and behold, they’re both available now. I’m planning to add a few demos to both my Spring AI and my LangChain4j training courses to use them.

As it happens, Spring AI is way, way ahead of LangChain4j on this. Both frameworks have client support, so they help you access an existing MCP server wherever it may be. Only Spring AI lets you also develop your own client. Also, Spring AI’s approach is simpler for both clients and servers.

The question then becomes, what existing servers to add, and (if I use Spring AI), what servers to create? In the Claude Desktop app, you can add any given MCP server by stuffing a block of JSON data inside its claude_desktop_config.json file and restarting the app:

"mcpServers": {

"sqlite": {

"command": "uvx",

"args": ["mcp-server-sqlite", "--db-path", "/path/test.db"]

},

"filesystem": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-filesystem", "/somefolder"]

},

"tavily-mcp": {

"command": "npx",

"args": ["-y", "tavily-mcp@0.1.2"],

"env": {

"TAVILY_API_KEY": "tvly-dev-..."

}

}

}The first two of those (sqlite and filesystem) are part of the standard servers provided by Anthropic when introducing MCP. The one from Tavily is a search engine that lets Claude search the web and/or extract web data from a specific site if it needs to. That one requires a key, and it turns out there’s no way to avoid having to hard code your own key in there.

What you include in that file is an instruction for the client to use to start up the server and then use it as a tool if it needs it. There are a lot of tools available (see a big list here), but if you’re willing to write your own server, all you need to do is tell the client how to start it, and you’re good.

A Star Trek MCP server

That leads me to this awesome blog post, by Daniela Petruzalek, a developer relations engineer at Google:

The tl;dr of her post (and I do recommend reading the whole thing) is that you can install a command line tool on a Mac called osquery, which has a simple command-line interface to your system properties, and then you can ask it questions using an AI agent.

Like most AI people, Daniela used Python for her implementation, and like most Google people, she used a Google library to make it all work.

The key part is here:

She created an agent by calling the LangChainAgent constructor, and supplied her access to osquery through that tools list. The agent class took care of the rest. She was then able to say, “Computer, run a Level 1 diagnostic” and got back all the system information from osquery.

Obviously, I had to try to replicate that in Java. I used Spring AI, because it was really easy to create a service that wrapped osquery.

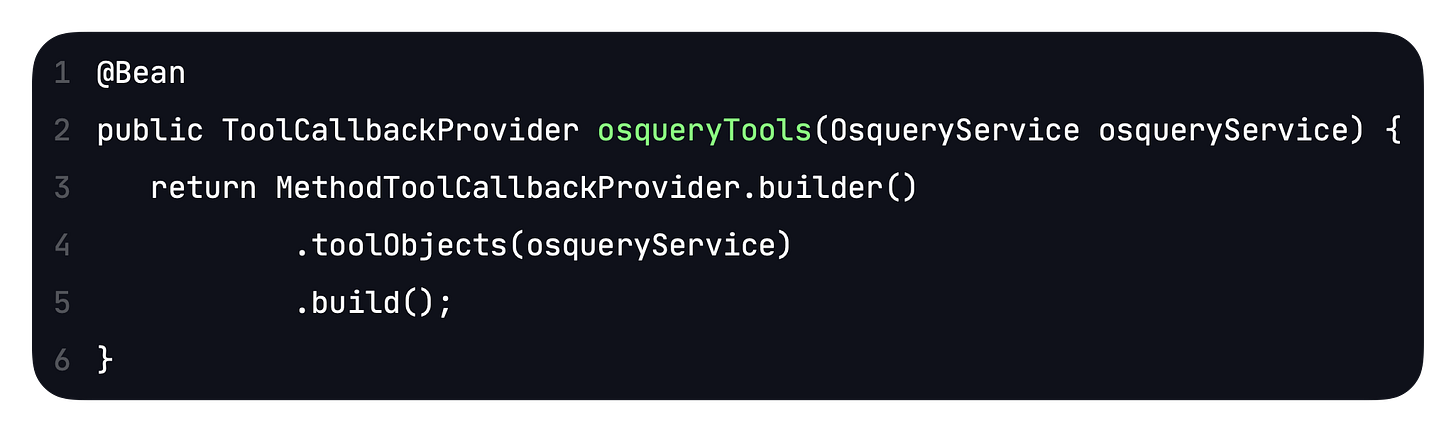

I needed to expose a bean for this:

Then I added some configuration properties:

And that’s basically the entire service. I added some JSON to my claude_desktop_config.json file (with arguments like “java”, “-jar”, and the path to my executable jar file, and suddenly Claude Desktop could access info about my system.

I couldn’t leave that alone, however. With some AI help, I eventually produced this little nugget:

If you click and hold the button labeled COMM, you can speak your command into your microphone. Spring AI then uses the whisper-1 model to transcribe the audio into text, which it shows right below the button. It then submits that to the MCP service, which asks osquery for the data, and puts the results inside the text area at the bottom. Nice.

I haven’t yet added the capability for the system to read back the final response to me directly, which shouldn’t be too hard. I’m not sure I want something like that on a response this large, but I’ll think about it.

This client app uses MCP and Spring AI with a JavaFX front end. That combination is always a challenge (at least for me) to get right, but you can probably expect an video on all this.

Junie Live Stream Tomorrow

A few weeks ago, Anton Arhipov from JetBrains posted on LinkedIn about the Junie AI agent. Junie is part of their overall AI tools subscription and integrated directly into IntelliJ IDEA, among other products. I couldn’t resist saying that I found it slow and not terribly helpful, at which point Anton invited me to appear tomorrow on their monthly Live Stream:

He found an old picture of me, but hey, I’ll never have that much hair again anyway. :)

Please drop by if you’re available. It starts at 1 pm EDT, and I would give you that in UTC or other time zones but I’m likely to get that wrong, so just ask Google. Actually, the Bluesky post has CET:

My actual account on Bluesky is @kousenit.com, btw. I probably should have said something earlier. Oh well.

Here are my biases going in:

I’m a huge fan of JetBrains as a company, and really like all the people I’ve met who work there.

I use IntelliJ IDEA, WebStorm, and PyCharm as my primary IDEs and I am very happy with all of them.

For a few years I was one of their Training Partners for Kotlin

These days I practically live on Claude Code, and while I’ve played with Junie, I find it rather underwhelming. I’ve tried OpenAI’s Codex CLI, which is okay, and Google’s new Jules product. For that one, see the next section.

I’m happy to represent Claude Code (though I’ll emphasize I don’t work for or with Anthropic — I just use the tool so much I had to upgrade my subscription) and while I’m not thrilled with Junie, I’m willing to be persuaded.

I should also mention that the IntelliJ IDEA conference is being held this week on Tuesday and Wednesday. I hope to attend several talks, even though they’re starting at 5 am Eastern time. Yawn.

Jules, from Google

I mentioned in my video that the AI agents I used were Claude Code, OpenAI’s Codex CLI, and Junie. Significant by its absence was anything from Google.

Wait, there is one now. It’s called Jules, from Google Labs:

Isn’t it just like Google to use as their avatar an image strongly resembling Cthulhu Maybe it’s just supposed to be a squid. I don’t know. Their blog post doesn’t say. But seriously, if you’re marketing a new agent to developers, do you want to be associated with a Lovecraftian horror? That reminds me of when Windows 95 was released (yeah, I remember that, I’m old) and they used Start Me Up from the Rolling Stones in all of their commercials, seemingly unaware that the chorus of that song was, “You make a grown man cry”. Incredibly clueless. If you go to the Jules site, the squid bounces up and down, too.

I tried it out, and … ugh. The less said at this point, the better. They’re no threat to anybody right now.

That concert I was in a couple weeks ago

Remember that concert I mentioned, where my wife and I were both performing? That concert we spent hours and hours preparing for, all for a single show? Well, the YouTube video is out now:

I was planning to go through and create time markers for all the individual songs, but I ran out of time this week. I figured I’d share it with you anyway.

A good time was had by all. 🎶

Toots and Skeets

Not Cthulhu, but evil nonetheless

Maybe I’ll have that song going through my head now, instead of Hamilton.

Second law of thermodynamics, indeed

I mean, it’s not like they have much choice. It’s the law.

It was glorious

Wait, a chicken reference? Are we going there?

No, not really. This was clever, though:

And unrelated, but I did enjoy this:

As I always suspected

The Onion had a good week. I should text them that, but maybe I’ll pull over first. Nah.

Also not Cthulhu, but okay

Why not have fun with the other monsters?

At least they’re not fighting

And, finally:

Graduation

Have a great week, everybody. :)

Last week:

New YouTube video

Two new blog posts

This week:

LangChain4j, on the O’Reilly Learning Platform

Junie Live Stream, with Anton Arhipov